Albert Jiang

@AlbertQJiang

Followers

3K

Following

2K

Media

149

Statuses

990

Officially submitted my thesis very recently. Extremely grateful to @Mateja_Jamnik @WendaLi8 for the three years of excellent and patient supervision. Just like how Cambridge terms work, each week in the PhD feels very long, but the entire thing feels like an incredibly short

28

35

348

Has been to one of the workshops on LLMs in Warsaw. Frankly it puts almost all the UK ones in shame. Open and deep exchange of bold ideas makes #IDEAS one of the best institutes to collaborate with. Do not destroy a powerhouse of European innovation.

2

47

253

Many high-quality AI4Maths papers were submitted to ICLR and NeurIPS workshop this year!. My first research project was in summer 2018 with @Yuhu_ai_ @jimmybajimmyba and we saw reviews like "this is of limited novelty to a niche research field". So damn encouraging.

5

13

56

Baldur: Whole-Proof Generation and Repair with Large Language Models. This is such amazing work. Congrats to Emily, Markus @MarkusNRabe, Talia @TaliaRinger, and Yuriy @YuriyBrun!.

6

26

141

Join us to build with the best colleagues!.Offices in France, UK, and US west coast.

We are announcing €600M in Series B funding for our first anniversary. We are grateful to our new and existing investors for their continued confidence and support for our global expansion. This will accelerate our roadmap as we continue to bring frontier AI into everyone’s.

4

4

122

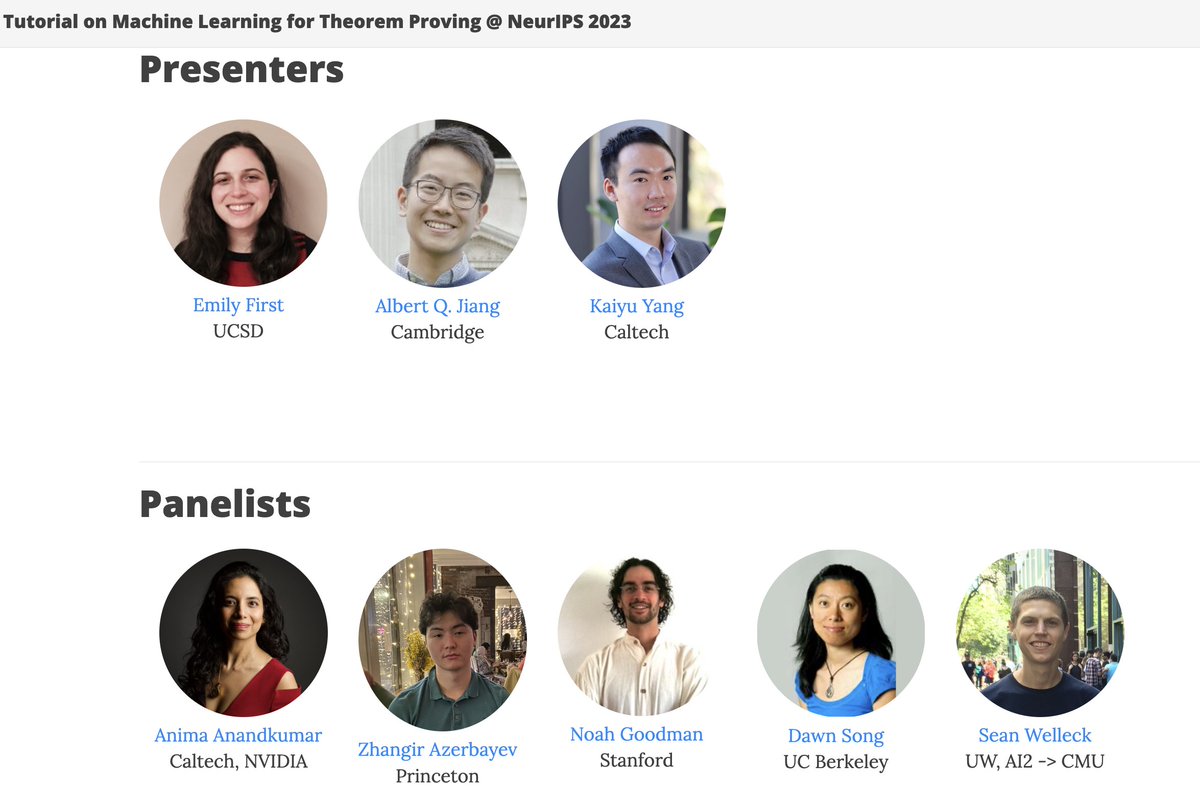

Going to NeurIPS?.Interested in AI4Maths?. Come to the Machine Learning for Theorem Proving tutorial on 11 Dec!. Emily, @KaiyuYang4, and I will be presenting how machine learning can prove theorems (in Coq, Isabelle, and Lean!). Panel is stunning.

1

20

110

Super late to the party, but DSP was accepted to ICLR for an oral presentation (notable top 5%)! Let's chat when in Kigali!. We updated the paper according to reviews and released the code for reproduction:. Paper: Code:

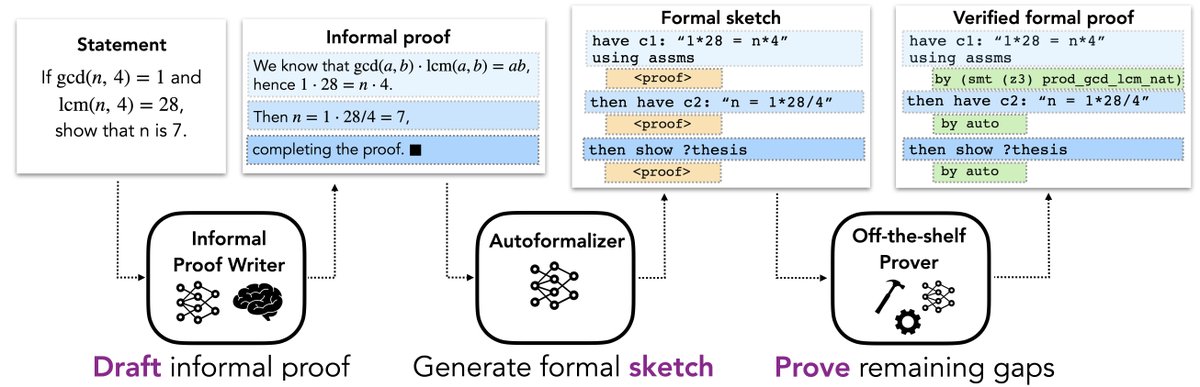

Large language models can write informal proofs, translate them into formal ones, and achieve SoTA performance in proving competition-level maths problems!. LM-generated informal proofs are sometimes more useful than the human ground truth 🤯. Preprint: 🧵

6

16

89

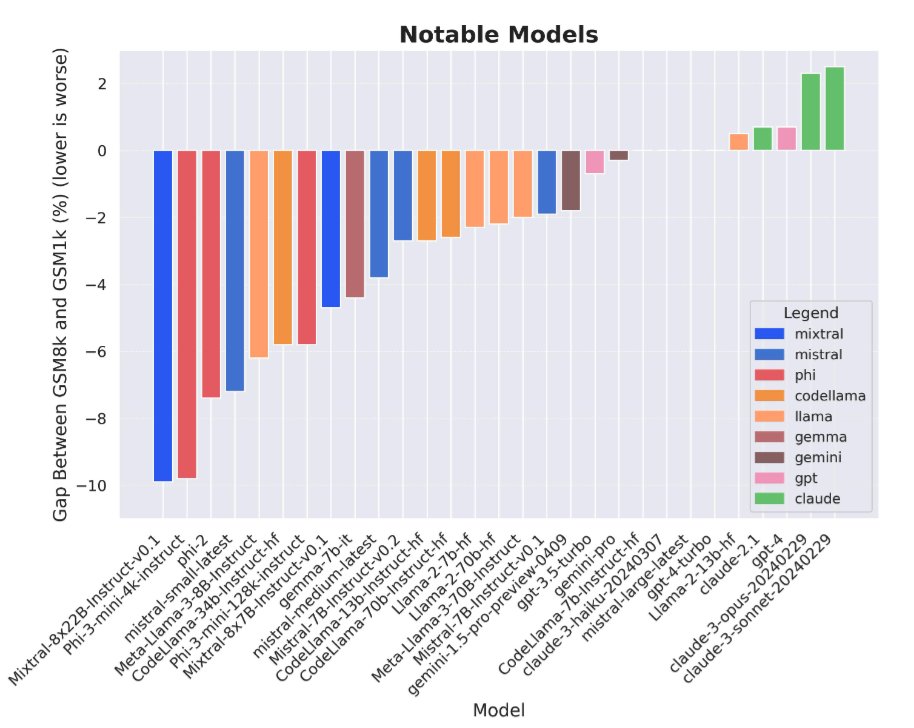

Nice paper! Some surprising highlights:.1. Mixtral 8x22B is ~GPT4-turbo level on GSM8K and GSM1K. Mistral large is better on both. 2. On GSM1K, Mixtral-8x22B-Instruct (84.3%) > claude-2 (83.6%) >> claude-3-haiku (79.1%) >> claude-3-sonnet (72.4%) 🤔.Also worth highlighting how

Data contamination is a huge problem for LLM evals right now. At Scale, we created a new test set for GSM8k *from scratch* to measure overfitting and found evidence that some models (most notably Mistral and Phi) do substantially worse on this new test set compared to GSM8k.

2

21

80

Thor was accepted to Neurips. It's my first paper during my PhD. It's towards a direction I really want to push (conjecturing). I feel good.

Language models are bad at retrieving useful premises from large databases for theorem proving, mainly because they're limited by a small context window. We use symbolic tools to overcome this difficulty, boosting proof rates from 39% to 57%. Thor: 1/

4

6

79

3/3 papers accepted at NeurIPS. Albert’s last batch of papers in PhD. A real Fibonacci soup of submissions because they have been rejected.0 time.1 time.2 times. Congratulations and gratitude to @andylolu24 @ZiarkoAlicja Bartosz Piotrowski @WendaLi8 @PiotrRMilos @Mateja_Jamnik!

6

2

70

If you're a mathematician interested in automatic formalization, or a machine learning practitioner interested in formal math, come to this workshop in April! I'm very honoured to organise it with Jarod, Dan, Kim and @wellecks!. Apply:

2

12

68

Exciting news: the article "Evaluating language models for mathematics through interactions" by @katie_m_collins and me is published in the Proceedings of the National Academy of Sciences!. Check out this original thread by Katie:

Evaluating large language models is hard, particularly for mathematics. To better understand LLMs, it makes sense to harness *interactivity* - beyond static benchmarks. Excited to share a new working paper 1/

1

5

60

Want to tackle some of the challenges here? Apply for an AI for math grant: Web form proposal deadline 10 Jan!.

🚀 Excited to share our position paper: "Formal Mathematical Reasoning: A New Frontier in AI"!.🔗 LLMs like o1 & o3 have tackled hard math problems by scaling test-time compute. What's next for AI4Math?. We advocate for formal mathematical reasoning,.

1

11

47

At #ICLR2023 in Kigali! Come to our oral session on Tuesday afternoon for the paper Draft, Sketch, and Prove: Guiding Formal Theorem Provers with Informal Proofs. DM for grabbing coffee/meal to chat about AI for maths, reasoning, large and small LMs!.

0

7

33

Keep these posts to LinkedIn plz.

Nobel Prize is NOT about h-index or citations. It is about the emergence of big new fields. So many posts discuss Nobel awardees. And so many misunderstand the Nobel Prize. 📍 A bit of clarification from my side:. 1⃣ Nobel Prize is NOT about how useful your work is. It’s about

1

0

38

What Christian had | What I heard.in mind when he |.suggested the name |

Magnushammer - the mythical weapon of the supreme transformer - beats sledgehammer by a large margin for Isabelle proof automation, improves Thor!. Many thanks to @s_tworkowski @Yuhu_ai_, @PiotrRMilos et al for the great work:.

2

9

36

Giving a talk on evaluating large language models for mathematics through interactions (work co-lead with @katie_m_collins) on Thursday. In the same session is the one and only @ChrSzegedy!.

0

3

34

Big thanks to the amazing team @wellecks @JinPZhou @jiachengliu1123 @WendaLi8 @tlacroix6 @Mateja_Jamnik and @Yuhu_ai_ @GuillaumeLample!. We have a team photo during AITP this year which I saved until this very moment (with @ChrSzegedy sipping beer in the background).

2

3

32

AI for maths workshop at ICML with challenge tracks (incl. autoformalization) in Vienna!. Now what should my talk focus on 🤔.

Excited to announce the AI for Math Workshop at #ICML2024 @icmlconf! Join us for groundbreaking discussions on the intersection of AI and mathematics. 🤖🧮. 📅 Workshop details: 📜 Submit your pioneering work: 🏆 Take on our

1

5

31

Panel starting!

📢 Can't wait to see you at the 3rd #MathAI Workshop in the LLM Era at #NeurIPS2023!. ⏰ 8:55am - 5:00pm, Friday, Dec 15.📍 Room 217-219.🔗 📽️ Exciting Lineup:.⭐️ Six insightful talks by @KristinLauter, @BaraMoa, @noahdgoodman,

1

6

31

In two days, I translated ~150 theorems from Lean to Isabelle (all of the validation set from @KunhaoZ @jessemhan @spolu's except a huge bunch which I found too difficult and threw to @WendaLi8). I'm tired and happy. Some thoughts: 1/n.

2

4

28

Super happy to contribute a very small part to Llemma. Let's move open model scaling perfs completely above closed ones!.

We release Llemma: open LMs for math trained on up to 200B tokens of mathematical text. The performance of Llemma 34B approaches Google's Minerva 62B despite having half the parameters. Models/data/code: Paper: More ⬇️

0

3

29

Pre-trained 7B outperforming LLaMA2 13B on all metrics. Apache 2.0.

At @MistralAI we're releasing our very first model, the best 7B in town (outperforming Llama 13B on all metrics, and good at code), Apache 2.0. We believe in open models and we'll push them to the frontier . Very proud of the team !.

2

8

28

OK 30.3% is not SOTA on miniF2F test/Lean. In May 2022, we have 41% with HTPS. 35% with only supervised training:.

InternLM-Math: Open Math Large Language Models Toward Verifiable Reasoning. Obtains open-sourced SotA performance in various benchmarks including GSM8K, MATH, Hungary math exam, and MiniF2F. repo: abs:

2

1

29

Organising the MATH-AI workshop @NeurIPSConf on 15 Dec in New Orleans this year!. We have a fantastic line-up of speakers and panelists (updated). Please consider sharing your work in AI4Math and Math4AI!. Website: Paper submission ddl: 29 Sept

We're organizing the 3rd #MathAI workshop at @NeurIPSConf #NeurIPS. 🚀 Excited for our speakers on AI for mathematical reasoning, @guyvdb, @noahdgoodman, @wtgowers, @BaraMoa, @KristinLauter, @TaliaRinger, @paul_smolensky, Armando Solar-Lezama, @Yuhu_ai_, @ericxing, @denny_zhou.

0

9

27

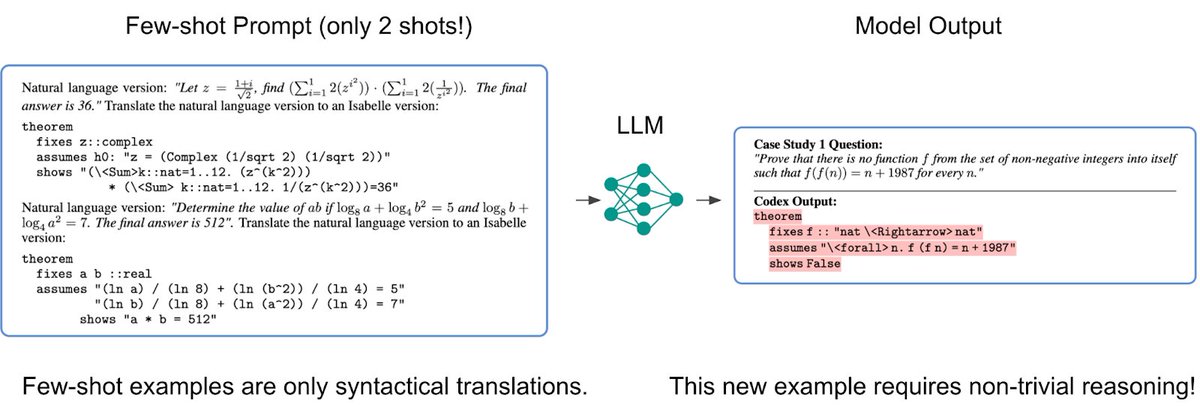

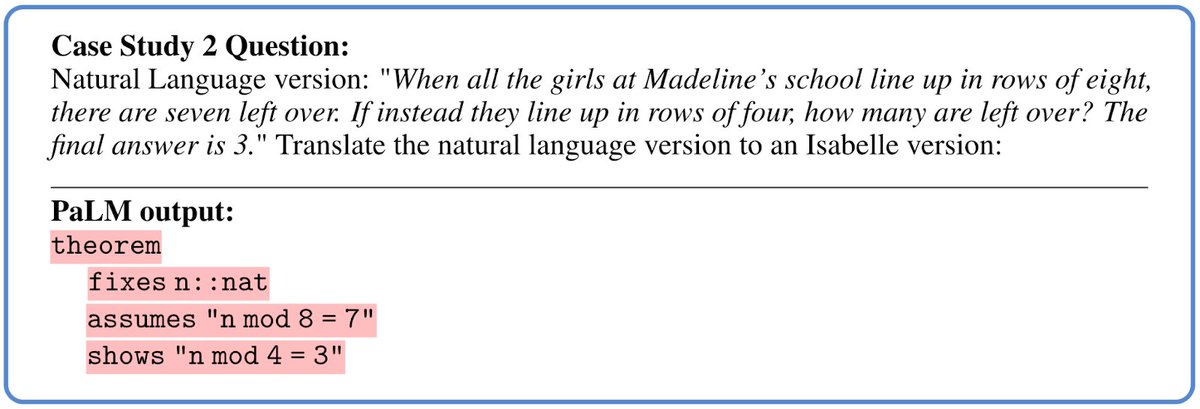

Autoformalization work accepted to Neurips. I was surprised when @TaliaRinger listed autoformalization as "one of the things most AI for proofs people are doing" despite there's one or two published work on it with deep learning.

After showing a few examples, large language models can translate natural language mathematical statements into formal specifications. We autoformalize 4K theorems as new data to train our neural theorem prover, achieving SOTA on miniF2F!. 1/. Paper:

2

5

26

My internship project #2 went into this 💻 that was a blast!.

Using HyperTree Proof Search we created a new neural theorem solver that was able to solve 5x more International Math Olympiad problems than any previous AI system & best previous state-of-the-art systems on miniF2F & Metamath. More in our new post ⬇️.

1

1

24

Legend has it Tony is driving to New Orleans because no airline allowed him to carry 10 poster rolls on the plane. Congratulations! Great to work together!.

Hello #NeurIPS2022! I'm at New Orleans and will be here until Thursday morning (Dec 1). Let's brainstorm AI for math, LLMs, Reasoning 🤯🤯!. We'll present 8 papers (1 oral and 7 posters) + 2 at workshops (MATHAI and DRL). Featuring recent breakthroughs in AI for math! See👇.

1

0

22

The Evaluating Language Models for Mathematics through Interactions preprint has been updated with annotated behaviour taxonomy, key findings, and more!. 🧵of exciting additions.

Evaluating large language models is hard, particularly for mathematics. To better understand LLMs, it makes sense to harness *interactivity* - beyond static benchmarks. Excited to share a new working paper 1/

1

4

22

I had an extremely enjoyable first year of PhD. What a privilege to be supervised by @Mateja_Jamnik and mentored by @Yuhu_ai_ and @WendaLi8 to work on a topic I enjoy (machine learning x mathematics). Really cannot hope for a better support team.

2

3

21

@wtgowers And you have it!. The recent “scaling laws hitting a wall” is an empirical observation: under the classical pretrain-finetune-align regime of LLM training, after a certain threshold, multiplying the compute pumped into the model yields negligible benefits. This violates the.

2

1

20

MATH-AI workshop tomorrow!.

📢 Can't wait to see you at the 3rd #MathAI Workshop in the LLM Era at #NeurIPS2023!. ⏰ 8:55am - 5:00pm, Friday, Dec 15.📍 Room 217-219.🔗 📽️ Exciting Lineup:.⭐️ Six insightful talks by @KristinLauter, @BaraMoa, @noahdgoodman,

0

3

18

Theorems for free with autoformalization!.

After showing a few examples, large language models can translate natural language mathematical statements into formal specifications. We autoformalize 4K theorems as new data to train our neural theorem prover, achieving SOTA on miniF2F!. 1/. Paper:

0

3

16