Agentica Project

@Agentica_

Followers

3K

Following

125

Media

24

Statuses

72

Building generalist agents that scale @BerkeleySky

San Francisco, CA

Joined January 2025

🚀 Introducing DeepSWE 🤖: our fully open-sourced, SOTA software engineering agent trained purely with RL on top of Qwen3-32B. DeepSWE achieves 59% on SWEBench-Verified with test-time scaling (and 42.2% Pass@1), topping the SWEBench leaderboard for open-weight models. 💪DeepSWE

15

65

347

RT @AlpayAriyak: Excited to introduce DeepSWE-Preview, our latest model trained in collaboration with @Agentica_ . Using only RL, we increa….

0

4

0

RT @WolframRvnwlf: Let's give a big round of applause for an amazing open-source release! They're not just sharing the model's weights; the….

0

2

0

RT @ChenguangWang: 🚀 Introducing rLLM: a flexible framework for post-training language agents via RL. It's also the engine behind DeepSWE,….

0

4

0

RT @sijun_tan: The first half of 2025 is all about reasoning models. The second half? It’s about agents. At Agentica, we’re thrilled to la….

0

9

0

RT @koushik77: We believe in experience-driven learning in the SKY lab. Hybrid verification plays an important role.

0

4

0

RT @StringChaos: 🚀 Introducing DeepSWE: Open-Source SWE Agent . We're excited to release DeepSWE, our fully open-source software engineerin….

0

12

0

RT @michaelzluo: 🚀The era of overpriced, black-box coding assistants is OVER. Thrilled to lead the @Agentica_ team in open-sourcing and tr….

0

13

0

RT @togethercompute: Announcing DeepSWE 🤖: our fully open-sourced, SOTA software engineering agent trained purely with RL on top of Qwen3-3….

0

78

0

@Alibaba_Qwen (8/n) Acknowledgements. DeepSWE is the result of an incredible collaboration between @agentica and @togethercompute. Agentica’s core members hail from @SkyLab and @BAIR, bringing together cutting-edge research and real-world deployment. More details on rLLM is coming soon in our.

1

1

15

(7/n) Acknowledgements. DeepSWE stands on the shoulders of giants — it's trained from Qwen3-32B. Huge kudos to the @Alibaba_Qwen team for open-sourcing such a powerful model!.

1

0

14

(6/n) Test-Time Scaling. We studied two approaches to boost agent performance at test time:. 📈 Scaling Context Length: We expanded max context from 16K → 128K tokens. Performance improved, with ~2% gains beyond 32K, reaching 42.2% Pass@1. 🎯 Scaling Agent Rollouts: We

1

1

10

(3/n) DeepSWE is trained directly with pure reinforcement learning—without distillation. In just 200 steps of training, its Pass@1 SWE-Bench-Verified score rises from 23→42.2%. Remarkably, RL improves DeepSWE’s generalization over time.

1

1

14

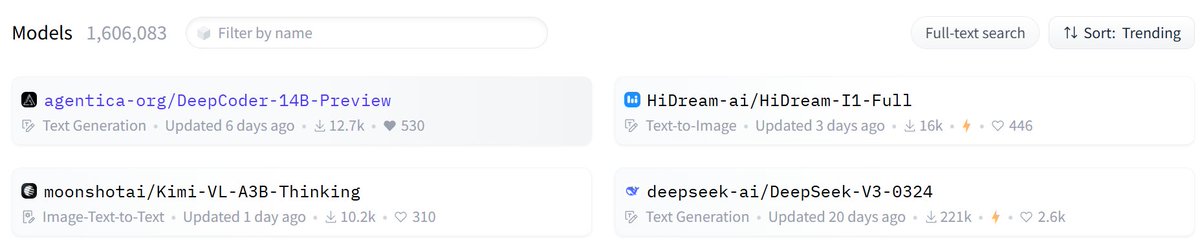

We're trending on @huggingface models today! 🔥. Huge thanks to our amazing community for your support. 🙏

2

6

47

RT @Yuchenj_UW: UC Berkeley open-sourced a 14B model that rivals OpenAI o3-mini and o1 on coding!. They applied RL to Deepseek-R1-Distilled….

0

398

0

RT @ralucaadapopa: Our team has open sourced our reasoning model that reaches o1 and o3-mini level on coding and math: DeepCoder-14B-Previ….

0

2

0