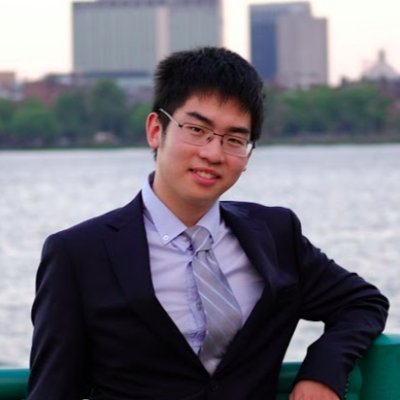

Naman Jain

@StringChaos

Followers

3K

Following

5K

Media

50

Statuses

539

PhD @UCBerkeley ; Research @cursor_ai | Projects - LiveCodeBench, DeepSWE, R2E-Gym, GSO, Syzygy, LMArena Coding | Past: @MetaAI @AWS @MSFTResearch @iitbombay

Berkeley

Joined March 2018

🚀 Introducing DeepSWE: Open-Source SWE Agent We're excited to release DeepSWE, our fully open-source software engineering agent trained with pure reinforcement learning on Qwen3-32B. 📊 The results: 59% on SWE-Bench-Verified with test-time scaling (42.2% Pass@1) - new SOTA

🚀 Introducing DeepSWE 🤖: our fully open-sourced, SOTA software engineering agent trained purely with RL on top of Qwen3-32B. DeepSWE achieves 59% on SWEBench-Verified with test-time scaling (and 42.2% Pass@1), topping the SWEBench leaderboard for open-weight models. 💪DeepSWE

3

12

79

Semantic search improves our agent's accuracy across all frontier models, especially in large codebases where grep alone falls short. Learn more about our results and how we trained an embedding model for retrieving code.

65

98

1K

We added LLM judge based hack detector to our code optimization evals and found models perform non-idiomatic code changes in upto 30% of the problems 🤯

Tests certify functional behavior; they don’t judge intent. GSO, our code optimization benchmark, now combines tests with a rubric-driven HackDetector to identify models that game the benchmark. We found that up to 30% of a model’s attempts are non-idiomatic reward hacks, which

0

2

7

ok composer-1 is pretty nuts, and the code it writes is quite nice. probably my new daily driver for many things not quite as galaxy-brain as codex, but it's SO fast that you can use it sync instead of async, and very quickly iterate on fixes. follows instructions very well

27

20

540

Composer is a new model we built at Cursor. We used RL to train a big MoE model to be really good at real-world coding, and also very fast. https://t.co/DX9bbalx0B Excited for the potential of building specialized models to help in critical domains.

53

73

785

Some exciting new to share - I joined Cursor! We just shipped a model 🐆 It's really good - try it out! https://t.co/kc1gmT3SsM I left OpenAI after 3 years there and moved to Cursor a few weeks ago. After working on RL for my whole career, it was incredible to see RL come alive

cursor.com

Built to make you extraordinarily productive, Cursor is the best way to code with AI.

43

18

771

I have one PhD intern opening to do research as a part of a model training effort at the FAIR CodeGen team (latest: Code World Model). If interested, email me directly and apply at

metacareers.com

Meta's mission is to build the future of human connection and the technology that makes it possible.

7

27

234

✂️Introducing ProofOptimizer: a training and inference recipe for proof shortening! 😰AI-written formal proofs can be long and unreadable: Seed-Prover's proof of IMO '25 P1 is 16x longer in Lean vs. English. Our 7B shortens proofs generated by SoTA models by over 50%! 🧵⬇️

6

36

204

Hybrid Reinforcement (HERO): When Reward Is Sparse, It’s Better to Be Dense 🦸♂️ 💪 📝: https://t.co/VAXtSC4GGp - HERO bridges 0–1 verifiable rewards and dense reward models into one 'hybrid' RL method - Tackles the brittleness of binary signals and the noise of pure reward

4

53

324

If you are at COLM 2025 and interested in code gen, agents, reasoning and or infra, Reach out to join an @a16z x @cursor_ai luncheon tomorrow (Friday) near the conference Cc @chsrbrts @srush_nlp @StringChaos

1

4

14

When making AI benchmarks, people often think you need to make the instructions as precise as possible, otherwise its unfair to the AI system. This causes us to overestimate AI capabilities, because the real world isn't precise.

8

7

84

At COLM presenting R2E-Gym at the poster session tomorrow afternoon! Reach out if you want to chat about coding agents, post-training, and evals!

Excited to release R2E-Gym - 🔥 8.1K executable environments using synthetic data - 🧠 Hybrid verifiers for enhanced inference-time scaling - 📈 51% success-rate on the SWE-Bench Verified - 🤗 Open Source Data + Models + Trajectories 1/

0

0

12

(🧵) Today, we release Meta Code World Model (CWM), a 32-billion-parameter dense LLM that enables novel research on improving code generation through agentic reasoning and planning with world models. https://t.co/BJSUCh2vtg

60

313

2K

Congratulations to Jesse, Jared @jdlichtman, and Christian @ChrSzegedy on this great result! (They told me and Terry about it weeks ago, but released it while I was giving a lecture series in Italy last week, followed by speaking at a conference this week at Harvard -- where I

Today we're announcing Gauss, our first autoformalization agent that just completed Terry Tao & Alex Kontorovich's Strong Prime Number Theorem project in 3 weeks—an effort that took human experts 18+ months of partial progress.

8

29

196

Excited to share some of the work we’ve been doing to improve Tab using our data on how people respond to suggestions!

We've trained a new Tab model that is now the default in Cursor. This model makes 21% fewer suggestions than the previous model while having a 28% higher accept rate for the suggestions it makes. Learn more about how we improved Tab with online RL.

9

12

295

Quick post-summer GSO update. Several new models are now live on the leaderboard!! 🧵👇

12

8

66

Interested in building and benchmarking deep research systems? Excited to introduce DeepScholar-Bench, a live benchmark for generative research synthesis, from our team at Stanford and Berkeley! 🏆Live Leaderboard https://t.co/gWuylXVlkJ 📚 Paper: https://t.co/BbtsoZHlSh 🛠️

1

43

175

MoE layers can be really slow. When training our coding models @cursor_ai, they ate up 27–53% of training time. So we completely rebuilt it at the kernel level and transitioned to MXFP8. The result: 3.5x faster MoE layer and 1.5x end-to-end training speedup. We believe our

29

105

880

After three intense months of hard work with the team, we made it! We hope this release can help drive the progress of Coding Agents. Looking forward to seeing Qwen3-Coder continue creating new possibilities across the digital world!

>>> Qwen3-Coder is here! ✅ We’re releasing Qwen3-Coder-480B-A35B-Instruct, our most powerful open agentic code model to date. This 480B-parameter Mixture-of-Experts model (35B active) natively supports 256K context and scales to 1M context with extrapolation. It achieves

66

85

993

We just released the evaluation of LLMs on the 2025 IMO on MathArena! Gemini scores best, but is still unlikely to achieve the bronze medal with its 31% score (13/42). 🧵(1/4)

13

40

220