Yong Jae Lee

@yong_jae_lee

Followers

965

Following

328

Media

8

Statuses

92

Associate Professor, Computer Sciences, UW-Madison. I am a computer vision and machine learning researcher.

Madison, WI

Joined April 2018

RT @sicheng_mo: #ICCV2025 Introducing X-Fusion: Introducing New Modality to Frozen Large Language Models. It is a novel framework that adap….

0

24

0

RT @MuCai7: LLaVA-Prumerge, the first work of Visual Token Reduction for MLLM, finally got accepted after being cited 146 times since last….

0

12

0

RT @wregss: Training text-to-image models?. Want your models to represent cultures across the globe but don't know how to systematically ev….

0

11

0

Thank you @_akhaliq for sharing our work!.

0

0

7

@AniSundar18 Spurious patterns associated w/ real images (eg compression artifacts) often mislead the detector. We show that dropping them by last layer re-training significantly improves robustness to misleading patterns. Super simple method that works really well! .

0

0

0

Excited to share our new work on learning a visual tool selection agent using RL! Our agent composes external tools to solve complex visual reasoning tasks. Led by my fantastic student @huang43602, w/ key contributions from @AniSundar18 & team.

🚨Our new paper: VisualToolAgent (VisTA) 🚨. Visual agents learn to use tools—no prompts or supervision! . ✅RL via GRPO.✅Decoupled agent/reasoner (e.g. GPT-4o) .✅Strong OoD generalization . 📊ChartQA, Geometry3K, BlindTest, MathVerse.🔗. 🧵👇.

1

0

6

Check out our new paper on fake image detection (ICML 2025), led by my great student @AniSundar18 !.

🚨 New paper at ICML 2025! 🚨.Stay-Positive: A Case for Ignoring Real Image Features in Fake Image Detection. Detectors often spuriously latch onto patterns from real images. 💡Simple Fix !. 📄 Project page: With @yong_jae_lee. Details in thread 🧵👇.

1

0

2

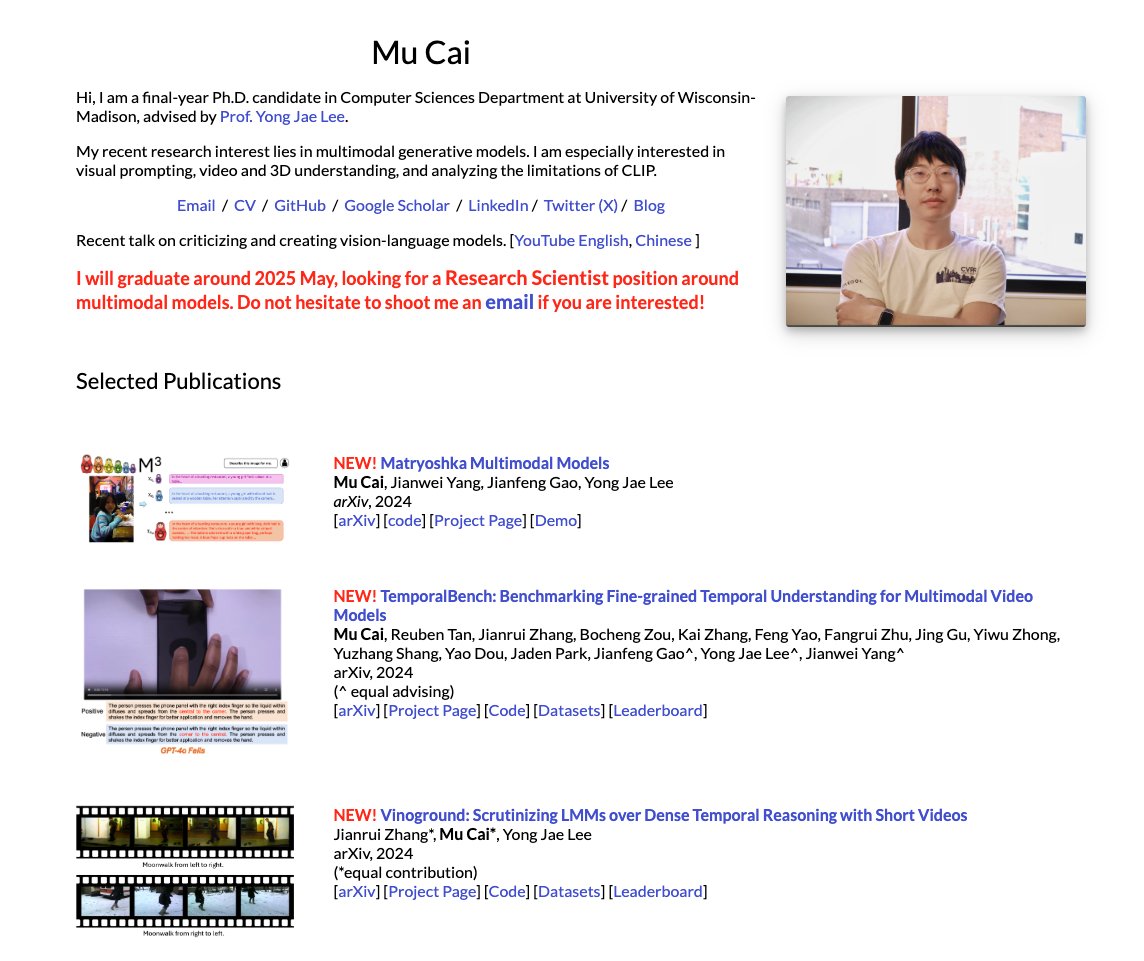

Congratulations Dr. Mu Cai @MuCai7! Mu is my 8th PhD student and first to start in my group at UW–Madison after my move a few years ago. He made a number of important contributions in multimodal models during his PhD, and recently joined Google DeepMind. I will miss you a lot Mu!

12

8

235

RT @jw2yang4ai: 🚀 Excited to announce our 4th Workshop on Computer Vision in the Wild (CVinW) at @CVPR 2025!.🔗 ⭐We….

0

26

0

RT @ErnestRyu: Public service announcement: Multimodal LLMs are really bad at understanding images with *precision*. .

0

11

0

Congratulations again @MuCai7!! So well deserved. I will miss having you in the lab.

I am thrilled to join @GoogleDeepMind as a Research Scientist and continue working on multimodal research!

1

0

46

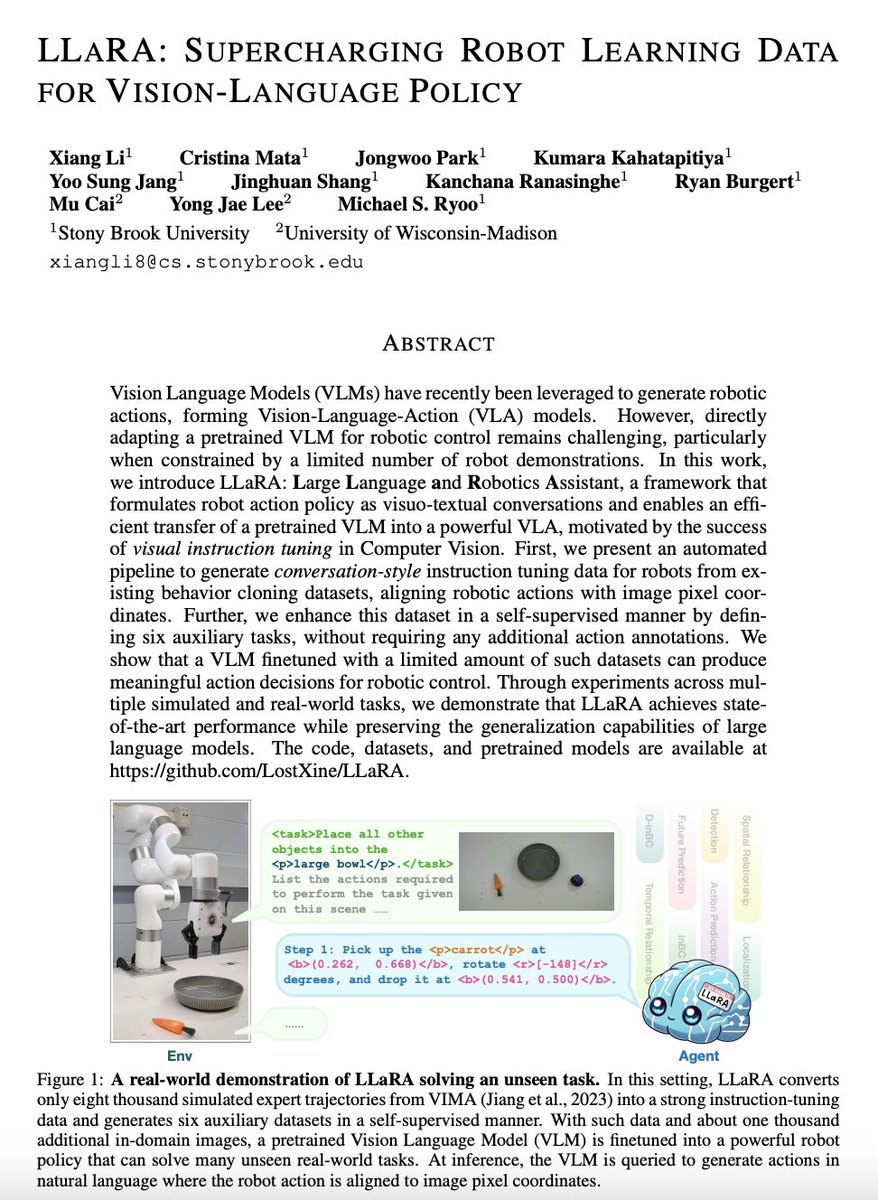

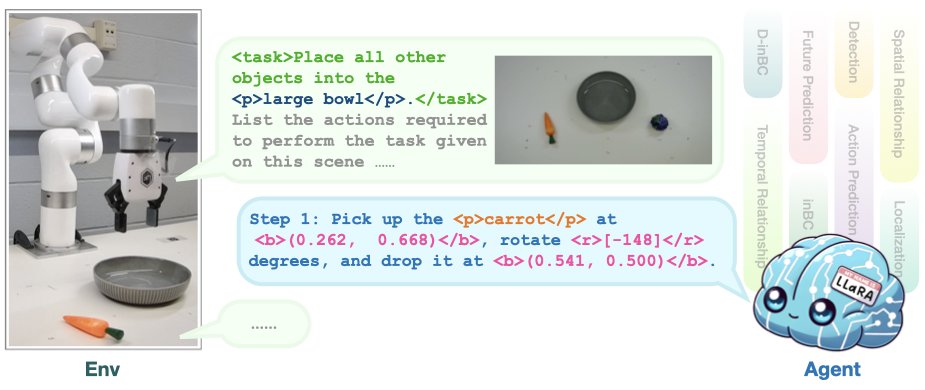

Check out our new ICLR 2025 paper, LLaRA, which transforms a pretrained vision-language model into a robot vision-language-action policy! Joint work with @XiangLi54505720, @ryoo_michael, et al from Stony Brook U, and @MuCai7.

github.com

[ICLR'25] LLaRA: Supercharging Robot Learning Data for Vision-Language Policy - LostXine/LLaRA

(1/5).Excited to present our #ICLR2025 paper, LLaRA, at NYC CV Day!.LLaRA efficiently transforms a pretrained Vision-Language Model (VLM) into a robot Vision-Language-Action (VLA) policy, even with a limited amount of training data. More details are in the thread. ⬇️

2

6

49

RT @xyz2maureen: 🔥Poster: Fri 13 Dec 4:30 pm - 7:30 pm PST (West).It is the first time for me try to sell a new concept that I believe but….

0

14

0

If you're hiring in multimodal models, Mu has my strongest recommendation! His papers in this space cover visual prompting (ViP-LLaVA), efficiency (Matryoshka Multimodal Models), enhancing compositional reasoning (CounterCurate), video understanding (VinoGround,TemporalBench),. .

🚨 I’ll be at #NeurIPS2024! 🚨On the industry job market this year and eager to connect in person!.🔍 My research explores multimodal learning, with a focus on object-level understanding and video understanding. 📜 3 papers at NeurIPS 2024:. Workshop on Video-Language Models.📅

1

5

51

RT @MuCai7: 🚨 I’ll be at #NeurIPS2024! 🚨On the industry job market this year and eager to connect in person!.🔍 My research explores multimo….

0

22

0

RT @MuCai7: I am not in #EMNLP2024 but @bochengzou is in Florida!. Go checkout vector graphics, a promising format that is completely diff….

0

1

0

RT @jw2yang4ai: 🔥Check out our new LMM benchmark TemporalBench! . Our world is temporal, dynamic and physical, which can be only captured i….

0

22

0