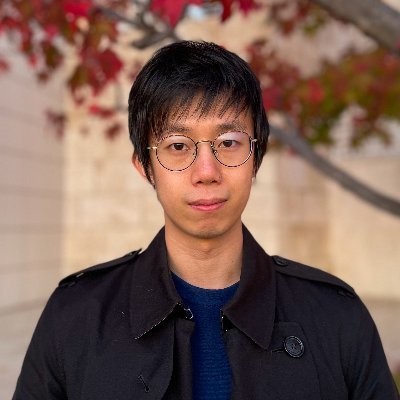

Zhuang Liu

@liuzhuang1234

Followers

11K

Following

3K

Media

82

Statuses

477

Assistant Professor @PrincetonCS. deep learning, vision, models. previously @MetaAI, @UCBerkeley, @Tsinghua_Uni

Princeton, NJ

Joined April 2016

To summarize: Derf is a simple, practical drop-in layer for stronger normalization-free Transformers. code: https://t.co/BvQgCyGWyi paper: https://t.co/iIdcWVQoWd Led by @mingzhic2004, and joint work with @TaimingLu, @JiachenAI, and @_mingjiesun.

arxiv.org

Although normalization layers have long been viewed as indispensable components of deep learning architectures, the recent introduction of Dynamic Tanh (DyT) has demonstrated that alternatives are...

3

2

60

Is Derf just fitting better? Surprisingly, no. When we measure training loss in eval mode on the training set: • Norm-based models have the lowest train loss • Derf has a higher train loss • Yet Derf has better test performance This suggests Derf’s gains mainly come from

1

1

45

Derf matches or outperforms normalization layers, and consistently beats DyT, with the same training recipe, across domains. 1. ImageNet - higher top-1 in ViT-B/L 2. Diffusion Transformers - lower FID across the DiT family 3. Genomics (HyenaDNA, Caduceus) - higher DNA

2

3

31

Guided by these properties, we run a expansive function search over many candidates that satisfy them (e.g., transformed erf, tanh, arcsinh, log-type functions, etc.). Across ViT classification and DiT diffusion performance, erf with a learnable shift and scale consistently

1

1

32

Before designing Derf, we asked: what makes a point-wise function a good normalization replacement? What’s needed for stable convergence? We identify 4 key properties: - Zero-centeredness - Boundedness - Center sensitivity (responsive near 0) - Monotonicity We found functions

1

1

35

Like DyT, Derf is a statistics-free, point-wise layer that doesn’t rely on activation statistics. It’s just a shifted and scaled Gauss error function with a few learnable parameters, dropped in wherever you’d normally use LayerNorm or RMSNorm.

1

1

32

Earlier this year, in our "Transformers without Normalization" paper, we showed that a Dynamic tanh (DyT) function can replace norm layers in Transformers. Derf pushes this idea further.

1

2

39

Stronger Normalization-Free Transformers – new paper. We introduce Derf (Dynamic erf), a simple point-wise layer that lets norm-free Transformers not only work, but actually outperform their normalized counterparts.

13

138

881

Excited to work with new PhD students (Fall 2026) on multimodal models, AI for automated scientific research, and foundation model architectures at Princeton. If this resonates with you, please apply to the CS PhD program and mention my name.

9

78

456

most of people didn’t know this we had been using TPUs at *Facebook* as far back as 2020. Kaiming led the initial development of the TF and JAX codebase, and research projects like MAE, MoCo v3, ConvNeXt v2 and DiT were developed *entirely* on TPUs. because we were the only

I keep seeing stuff about TPU, has anything materially new happened? There’s no evidence Google has ever trained a Gemini on non-TPU hardware, going years back to pre-GPT models like BERT. TPUs predate Nvidia’s own tensor cores. Anthropic (and Character, and SSI, and

32

83

1K

I will join UChicago CS @UChicagoCS as an Assistant Professor in late 2026, and I’m recruiting PhD students in this cycle (2025 - 2026). My research focuses on AI & Robotics - including dexterous manipulation, humanoids, tactile sensing, learning from human videos, robot

26

100

638

After a year of team work, we're thrilled to introduce Depth Anything 3 (DA3)! 🚀 Aiming for human-like spatial perception, DA3 extends monocular depth estimation to any-view scenarios, including single images, multi-view images, and video. In pursuit of minimal modeling, DA3

80

504

4K

I'm teaching a new "Intro to Modern AI" course at CMU this Spring: https://t.co/ptnrNmVPyf. It's an early-undergrad course on how to build a chatbot from scratch (well, from PyTorch). The course name has bothered some people – "AI" usually means something much broader in academic

50

242

2K

Life update: I recently defended my PhD at CMU and started as a postdoctoral fellow at Princeton! Grateful to my advisors and all who supported me, and excited for this next chapter :)

49

43

1K

Excited to share our lab’s first open-source release: LLM-Distillation-JAX supports practical knowledge distillation configurations (distillation strength, temperature, top-k/top-p), built on MaxText designed for reproducible JAX/Flax training on both TPUs and GPUs

4

30

225

Out of curiosity, I analyzed academic/industry affiliations of first/last authors at ML confs. Some findings: 1. Pubs are increasing massively, academia leads the charge 2. The proportion of industry is falling, esp. first authors. Now 8x first authors are from academia.

10

17

230

I'm at ICCV in Hawaii this week with @TongPetersb, @JiachenAI, @_amirbar, and @liuzhuang1234 to present two papers (WebSSL + MetaMorph) from work done at Meta Fundamental AI Research! Please stop by our workshop invited talk + two posters to chat with us :D Scaling

arxiv.org

Visual Self-Supervised Learning (SSL) currently underperforms Contrastive Language-Image Pretraining (CLIP) in multimodal settings such as Visual Question Answering (VQA). This multimodal gap is...

Can visual SSL match CLIP on VQA? Yes! We show with controlled experiments that visual SSL can be competitive even on OCR/Chart VQA, as demonstrated by our new Web-SSL model family (1B-7B params) which is trained purely on web images – without any language supervision.

1

8

51

How can an AI model learn the underlying dynamics of a visual scene? We're introducing Trajectory Fields, a new way to represent video in 4D! It models the path of each pixel as a continuous 3D trajectory, which is parameterized by a B-spline function of time. This unlocks

Excited to share our latest work from the ByteDance Seed Depth Anything team — Trace Anything: Representing Any Video in 4D via Trajectory Fields 💻 Project Page: https://t.co/Q390WcWwG4 📄 Paper: https://t.co/NfxT260QWy 📦 Code: https://t.co/r2VbOHyRwL 🤖 Model:

1

18

82