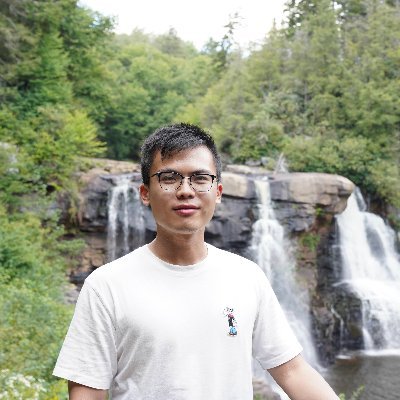

Xichen Pan

@xichen_pan

Followers

633

Following

95

Media

7

Statuses

46

PhD Student @NYU_Courant, Researcher @metaai; Multimodal Generation | Prev: @MSFTResearch, @AlibabaGroup, @sjtu1896; More at https://t.co/yyS8q316AV

New York, USA

Joined August 2022

We find training unified multimodal understanding and generation models is so easy, you do not need to tune MLLMs at all. MLLM's knowledge/reasoning/in-context learning can be transferred from multimodal understanding (text output) to generation (pixel output) even it is FROZEN!

9

67

417

three years ago, DiT replaced the legacy unet with a transformer-based denoising backbone. we knew the bulky VAEs would be the next to go -- we just waited until we could do it right. today, we introduce Representation Autoencoders (RAE). >> Retire VAEs. Use RAEs. 👇(1/n)

51

290

2K

🎉 Excited to share RecA: Reconstruction Alignment Improves Unified Multimodal Models 🔥 Post-train w/ RecA: 8k images & 4 hours (8 GPUs) → SOTA UMMs: GenEval 0.73→0.90 | DPGBench 80.93→88.15 | ImgEdit 3.38→3.75 Code: https://t.co/yFEvJ0Algw 1/n

6

29

80

@joserf28323 @CVPR @ICCVConference @nyuniversity Thanks for bringing this to my attention. I honestly wasn’t aware of the situation until the recent posts started going viral. I would never encourage my students to do anything like this—if I were serving as an Area Chair, any paper with this kind of prompt would be

10

28

215

metaquery is now open-source — with both the data and code available.

The code and instruction-tuning data for MetaQuery are now open-sourced! Code: https://t.co/VpHrt5POSH Data: https://t.co/EvpCEPDGFN Two months ago, we released MetaQuery, a minimal training recipe for SOTA unified understanding and generation models. We showed that tuning few

2

7

56

The code and instruction-tuning data for MetaQuery are now open-sourced! Code: https://t.co/VpHrt5POSH Data: https://t.co/EvpCEPDGFN Two months ago, we released MetaQuery, a minimal training recipe for SOTA unified understanding and generation models. We showed that tuning few

huggingface.co

We find training unified multimodal understanding and generation models is so easy, you do not need to tune MLLMs at all. MLLM's knowledge/reasoning/in-context learning can be transferred from multimodal understanding (text output) to generation (pixel output) even it is FROZEN!

1

23

134

[8/8] For more details, please check below: Paper: https://t.co/lkv3DsKahu Website: https://t.co/8rPh56xe3d It was a great pleasure to work with @sainingxie, @j1h0u, @ImSNShukla, @felixudr, @iam_aashusingh, @zhuokaiz, @shlokkkk, Jialiang, Kunpeng, @zhiyangx11, @JiuhaiC

xichenpan.com

Introducing MetaQuery, a minimal recipe for building state-of-the-art unified multimodal understanding (text output) and generation (pixel output) models

3

4

29

[7/8] Even with a frozen MLLM, our model is able to recover visual details beyond semantics, and provide good image editing results.

1

1

17

[6/8] Tuned on this data, MetaQuery achieves great subject driven generation capability (first row), and even unlock several interesting new capability like visual association and logo design (second row).

1

1

15

[5/8] We also adopt an interesting approach to collect instruction tuning data (inspired by MagicLens). Instead of creating image pairs using known transformations like ControlNet, we use naturally occurring image pairs and ask MLLMs to describe the diverse transformations.

1

1

13

[4/8] MetaQuery achieves SOTA performances on knowledge and reasoning benchmarks (like the cases shown in the first tweet). It is also the first model to successfully transfer the advanced capabilities of MLLMs to image generation and exceed the performance of SOTA T2I models.

1

1

15

[3/8] Bridging the powerful Qwen MLLM and Sana diffusion model, we achieves SOTA-level multimodal understanding and generation results with only 25M publicly available image-caption pairs.

1

1

15

[2/8] This can be easily achieved with MetaQuery, under a very classic learnable query architecture (also used by GILL, DreamLLM, SEED-X, etc.) MetaQuery only has one denoising objective, and is only trained on image-caption pairs to augment frozen MLLMs with image generation.

2

1

20

[1/8] Previous unified models jointly model p(text, pixel) in one single transformer, while we delegate understanding and generation to frozen MLLMs and diffusion models, respectively. That is to say, complex training recipes and careful data balancing are no longer needed.

1

1

16

Heading to #NeurIPS2024 to present Cambrian-1 w/ @TongPetersb! Catch our oral presentation Friday @ 10am (Oral 5C) and our poster afterwards until 2pm (#3700 in East Hall A-C) 🪼🎉

2

12

57

🚨 New VLM Paper ! Florence-VL: Enhancing Vision-Language Models with Generative Vision Encoder and Depth-Breadth Fusion 1️⃣ Are CLIP-style vision transformers the best vision encoder for VLMs? We explore new possibilities with Florence-2, a generative vision foundation model,

1

6

31

SV3D takes an image as input and outputs camera-controlled novel views that are highly consistent across the views. We also propose techniques to convert these novel views into quality 3D meshes. View synthesis models are publicly released. Project page:

Today, we are releasing Stable Video 3D, a generative model based on Stable Video Diffusion. This new model advances the field of 3D technology, delivering greatly improved quality and multi-view. The model is available now for commercial and non-commercial use with a Stability

4

22

139

TLDR: Meet ✨Lumiere✨ our new text-to-video model from @GoogleAI! Lumiere is designed to create entire clips in just one go! Seamlessly opening up possibilities for many applications: Image-to-video 🖼️ Stylized generation 🖌️ Video editing 🪩 and beyond. See 🧵👇

70

203

920

Introducing Scalable Interpolant Transformer! SiT integrates a flexible interpolant framework into DiT, enabling a nuanced exploration of dynamical transport in image generation. With an FID of 2.06 on ImageNet 256, SiT pushes Interpolant-based models to new heights! (1/n)

2

14

100