Aydyn Tairov

@tairov

Followers

974

Following

462

Media

134

Statuses

1K

Production Engineer / ex-Meta

London

Joined March 2009

BTW, Jensen’s last bet was legendary. ( DGX-1 delivered to OpenAI office )

0

0

0

Bold bet from Nvidia. DGX Spark and it's successors have really high chances to become the very kernel platform for future AI appliances - satellites, robots, cars. All things that Elon actually building RN.

From rockets to AI. Nine years after the original NVIDIA DGX-1 handoff, Jensen Huang delivered a brand-new DGX Spark to @ElonMusk at Starbase. 🚀✨ The DGX Spark is a petaflop powerhouse built for creators, researchers, and developers — a desktop-sized supercomputer with five

1

0

2

OpenZL - outperforms zstd, xz, gzip, and Blosc on multiple real-world datasets with 10x (!!!) speed improvement Another huge win when computations represented in a form of graph (DAG) Meta / OpenZL: A Novel Data Compression Framework It's not a new algorithm. It’s a new

3

17

176

It turns out we've all been using MCP wrong. Most agents today use MCP by exposing the "tools" directly to the LLM. We tried something different: https://t.co/IHAVr6nn8F

#BirthdayWeek

blog.cloudflare.com

It turns out we've all been using MCP wrong. Most agents today use MCP by exposing the "tools" directly to the LLM. We tried something different: Convert the MCP tools into a TypeScript API, and then...

9

40

257

This week on Democratizing AI Compute, @clattner_llvm shares the origin story of the MLIR compiler framework: how it started, what it changed, and the power struggles along the way.

modular.com

1

17

59

LinkedIn gonna be crazy when they find out ChatGPT can do images next week

229

749

23K

Building on last month's release, we're excited to unveil our next round of improvements! 💪 MAX 25.2 delivers industry-leading performance on @nvidia GPUs, built from the ground up without CUDA, to power faster, more responsive, and more customizable GenAI deployments at scale.

2

8

61

💪🏻 This is SO COOL I build an app to count dumbbell reps with an AI model in the browser using Tensorflow.js and a pose detection model

267

665

10K

#NotebookLM chewed through my #Mojo 🔥 vs. 6 languages benchmark of llama2 implementations in just 5 minutes! Completely blown away by the analysis in this deep dive into programming languages. It's hands down the most insightful and concise discussion I've ever listened!

3

4

21

Github joins the AI model deployment platforms. Let's see how it goes. Is there any chance that HF will have to worry?

0

0

0

I've been a huge fan of https://t.co/NUdK2vUByK for a while — it felt like the best way to write secure and reliable apps! Thought it was unbeatable... until today 😮 Just saw this on HN 👉 https://t.co/ViLJioA7v4 🤯 #NoCode #HN #Tech #Development #Security #Reliability

usenothing.com

A timer that counts nothing.

0

0

0

TIL: Google's first name was BackRub From the beginning, it analyzed a website’s backlinks (incoming traffic from other sites) to determine its rank

1

0

0

It's great to see such esteemed voices in the AI community featuring llama2.🔥 as a reference solution on Mojo language that demonstrates many of its capabilities.. It's also the most popular fork/re-implementation of llama2.c and holds the title for the fastest CPU inference of

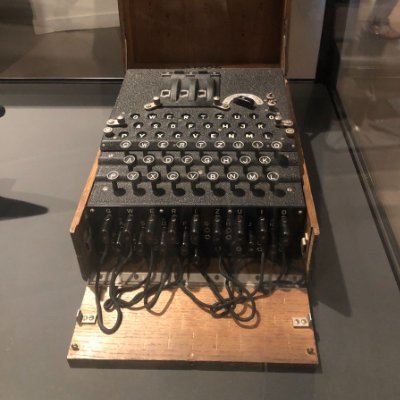

AI is math. GPU is metal. Sitting between math and metal is a programming language. Ideally, it should feel like Python but scale like CUDA. I find two newcomers in this middle layer quite exciting: 1. Bend: compiles modern high-level language features to native multi-threading

1

0

2

Insights from the AWS Summit London 2024 This week I visited the AWS Summit in London. I checked out so many cool workshops put on by the AWS team. They covered all sorts of topics - from proving out new ways to search and find info (retrieval argumentation), to the latest in

0

0

0

Karpathy rewrote ChatGPT training and inference within 1000 lines of C code and also explained how does it work in a tweet

# explaining llm.c in layman terms Training Large Language Models (LLMs), like ChatGPT, involves a large amount of code and complexity. For example, a typical LLM training project might use the PyTorch deep learning library. PyTorch is quite complex because it implements a very

1

0

6