Weinan Sun

@sunw37

Followers

790

Following

2K

Media

28

Statuses

889

Neuroscience, Artificial Intelligence, and Beyond. Assistant professor, Neurobiology and Behavior @CornellNBB

Joined February 2016

1/12 How do animals build an internal map of the world? In our new paper, we tracked thousands of neurons in mouse CA1 over days/weeks as they learned a VR navigation task. @nspruston @HHMIJanelia, w/ co-1st author @JohanWinn Video summary: https://t.co/7iU0R4OVbf Paper:

6

54

216

The US should do something equally big in brain circuit mapping https://t.co/qZerDAjbJf

1

6

15

Incredibly proud to announce that today @E11BIO is releasing our first preprint together with accompanying open data and methods🚀 Here we show how our PRISM technology addresses the biggest bottlenecks in connectomics: tracing and sample fragility.

@E11BIO is excited to unveil PRISM technology for mapping brain wiring with simple light microscopes. Today, brain mapping in humans and other mammals is bottlenecked by accurate neuron tracing. PRISM uses molecular ID codes and AI to help neurons trace themselves. We discovered

1

1

11

@E11BIO is excited to unveil PRISM technology for mapping brain wiring with simple light microscopes. Today, brain mapping in humans and other mammals is bottlenecked by accurate neuron tracing. PRISM uses molecular ID codes and AI to help neurons trace themselves. We discovered

36

111

369

Veo is a more general reasoner than you might think. Check out this super cool paper on "Video models are zero-shot learners and reasoners" from my colleagues at @GoogleDeepMind.

8

57

337

Why does AI sometimes fail to generalize, and what might help? In a new paper, we highlight the latent learning gap — which unifies findings from language model weaknesses to agent navigation — and suggest that episodic memory complements parametric learning to bridge it. Thread:

19

102

550

Our thoughts on symbols in mental representation, given today's advanced neural nets, w/ @cocosci_lab @RTomMcCoy @Brown_NLP @TaylorWWebb Major open Q: Can neural nets learn symbol-related abilities *without* training on massive, unrealistic data generated from symbolic systems?

🤖🧠 NEW PAPER ON COGSCI & AI 🧠🤖 Recent neural networks capture properties long thought to require symbols: compositionality, productivity, rapid learning So what role should symbols play in theories of the mind? For our answer...read on! Paper: https://t.co/VsCLpsiFuU 1/n

2

8

74

The world renowned Cornell Lab of Ornithology is seeking to hire a new director

1

7

12

Excited to share new work @icmlconf by Loek van Rossem exploring the development of computational algorithms in recurrent neural networks. Hear it live tomorrow, Oral 1D, Tues 14 Jul West Exhibition Hall C: https://t.co/zsnSlJ0rrc Paper: https://t.co/aZs7VZuFNg (1/11)

openreview.net

Even when massively overparameterized, deep neural networks show a remarkable ability to generalize. Research on this phenomenon has focused on generalization within distribution, via smooth...

2

21

69

"A transformer trained on 10M solar systems nails planetary orbits. But it botches gravitational laws".

Can an AI model predict perfectly and still have a terrible world model? What would that even mean? Our new ICML paper formalizes these questions One result tells the story: A transformer trained on 10M solar systems nails planetary orbits. But it botches gravitational laws 🧵

9

26

191

New paper: World models + Program synthesis by @topwasu 1. World modeling on-the-fly by synthesizing programs w/ 4000+ lines of code 2. Learns new environments from minutes of experience 3. Positive score on Montezuma's Revenge 4. Compositional generalization to new environments

16

105

572

Transformers employ different strategies through training to minimize loss, but how do these tradeoff and why? Excited to share our newest work, where we show remarkably rich competitive and cooperative interactions (termed "coopetition") as a transformer learns. Read on 🔎⏬

1

23

134

How does in-context learning emerge in attention models during gradient descent training? Sharing our new Spotlight paper @icmlconf: Training Dynamics of In-Context Learning in Linear Attention https://t.co/rr8T8ww5Kt Led by Yedi Zhang with @Aaditya6284 and Peter Latham

3

22

121

Cool work from @HHMIJanelia .. "cognitive graphs of latent structure" .... Looks like even more evidence for CSCG-like representation and schemas. ( https://t.co/DdijjzJlKn,

https://t.co/68jAi540Oy)

biorxiv.org

Mental maps of environmental structure enable the flexibility that defines intelligent behavior. We describe a striking internal representation in rat prefrontal cortex that exhibits hallmarks of a...

3

17

73

This preprint is now published at @Nature. With current and former DeepMinders @yuvaltassa, Josh Merel, Matt Botvinick, and my @HHMIJanelia colleagues @vaxenburg, Igor Siwanowicz, @KristinMBranson, @MichaelBReiser, Gwyneth Card and more

🪰By infusing a virtual fruit fly with #AI, Janelia & @GoogleDeepMind scientists created a computerized insect that can walk & fly just like the real thing➡️ https://t.co/LqvOHKJr4F 🤖Read more about this work, first published in a #preprint in 2024➡️ https://t.co/QXsltdPxMb

6

25

96

(Plz repost) I’ve been receiving some good news lately and will be hiring at all levels to expand the lab. Please get in contact if you are interested in reinforcement learning, neural plasticity, circuit dynamics, and/or hearing rehabilitation. pierre.apostolides @ umich .edu

2

29

33

From our team at @GoogleDeepMind: we ask, as an LLM continues to learn, how do new facts pollute existing knowledge? (and can we control it) We investigate such hallucinations in our new paper, to be presented as Spotlight at @iclr_conf next week.

7

27

199

New preprint! Intelligent creatures can solve truly novel problems (not just variations of previous problems), zero-shot! Why? They can "think" before acting, i.e. mentally simulate possible behaviors and evaluate likely outcomes How can we build agents with this ability?

3

12

49

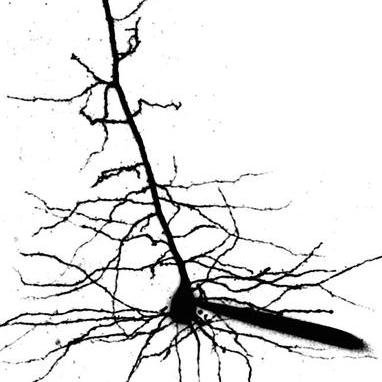

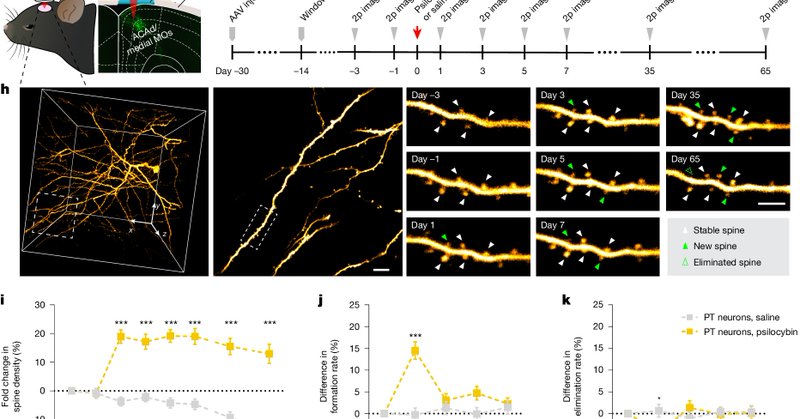

Our latest study identifies a specific cell type and receptor essential for psilocybin’s long-lasting neural and behavioral effects 🍄🔬🧠🐁 Led by Ling-Xiao Shao and @ItsClaraLiao Funded by @NIH @NIMHgov 📄Read in @Nature - https://t.co/teA2IhCpXi 1/12

nature.com

Nature - A pyramidal cell type and the 5-HT2A receptor in the medial frontal cortex have essential roles in psilocybin’s long-term drug action.

17

91

312