Sumanth Hegde

@sumanthrh

Followers

943

Following

4K

Media

67

Statuses

403

Post-training @anyscalecompute. Prev - @UCSanDiego, @C3_AI, @iitmadras. Machine Learning and Systems. Intensity is all you need.

San Francisco

Joined February 2016

Some of our interesting observations from working on multi-turn text2SQL: - Data-efficient RL works pretty well: We did very typical GRPO settings; Just make sure to use "hard-enough" samples and no KL. KL can stabilize learning early on but will always bring down rewards

1/N Introducing SkyRL-SQL, a simple, data-efficient RL pipeline for Text-to-SQL that trains LLMs to interactively probe, refine, and verify SQL queries with a real database. 🚀 Early Result: trained on just ~600 samples, SkyRL-SQL-7B outperforms GPT-4o, o4-mini, and SFT model

0

5

18

Wide-EP and prefill/decode disaggregation APIs for vLLM are now available in Ray 2.52 🚀🚀 Validated at 2.4k tokens/H200 on Anyscale Runtime, these patterns maximize sparse MoE model inference efficiency, but often require non-trivial orchestration logic. Here’s how they

1

15

27

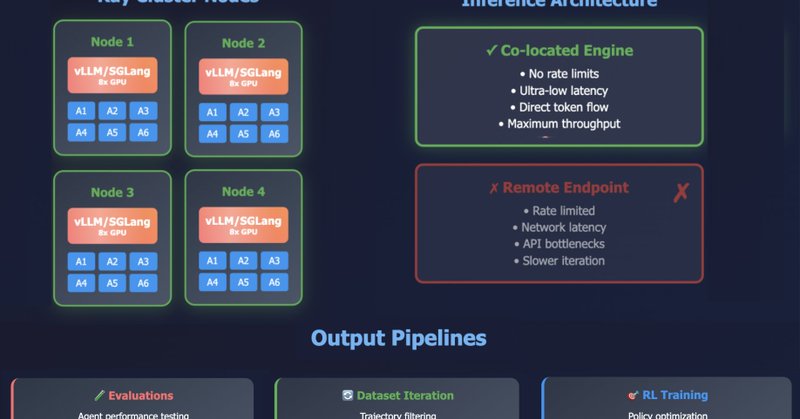

1/n 🚀 Introducing SkyRL-Agent, a framework for efficient RL agent training. ⚡ 1.55× faster async rollout dispatch 🛠 Lightweight tool + task integration 🔄 Backend-agnostic (SkyRL-train / VeRL / Tinker) 🏆 Used to train SA-SWE-32B, improving Qwen3-32B from 24.4% → 39.4%

5

60

274

🧑🏫On-Policy Distillation is available as an example on SkyRL! The implementation required no library code changes, and we were able to reproduce AIME math reasoning experiments from the @thinkymachines blogpost. Check out our detailed guide to see how! https://t.co/TqDS649oFQ

Our latest post explores on-policy distillation, a training approach that unites the error-correcting relevance of RL with the reward density of SFT. When training it for math reasoning and as an internal chat assistant, we find that on-policy distillation can outperform other

4

5

27

🔥 New Blog: “Disaggregated Inference: 18 Months Later” 18 months in LLM inference feels like a new Moore’s Law cycle – but this time not just 2x per year: 💸 Serving cost ↓10–100x 🚀 Throughput ↑10x ⚡ Latency ↓5x A big reason? Disaggregated Inference. From DistServe, our

hao-ai-lab.github.io

Eighteen months ago, our lab introduced DistServe with a simple bet: split LLM inference into prefill and decode, and scale them independently on separate compute pools. Today, almost every product...

7

48

175

☁️SkyRL now runs seamlessly with SkyPilot! Let @skypilot_org handle GPU provisioning and cluster setup, so you can focus on RL training with SkyRL. 🎯 Launch distributed RL jobs effortlessly ⚙️ Auto-provision GPUs across clouds 🤖 Train your LLM agents at scale Get started

0

10

25

🚀 SkyRL has day-zero support for OpenEnv!! This initial integration with OpenEnv highlights how easily new environments plug into SkyRL. Train your own LLM agents across containerized environments with simple, Gym-style APIs 🔥 👉 Check it out:

Excited to share OpenEnv: frontier-grade RL environments for the open-source community 🔥! https://t.co/KVeBMsxohL 🧩 Modular interfaces: a clean Gymnasium-style API (reset(), step(), state()) that plugs into any RL framework 🐳 Built for scale: run environments in containers

0

10

22

⚙️ Engineering for scale and speed. Next-gen RL libraries are redefining how agents learn and interact. 🎤 Open Agent Summit Speakers: • @sumanthrh (Anyscale) – “SkyRL: A Modular RL Library for LLM Agents” • @tyler_griggs_ (UC Berkeley) – “Lessons in Agentic RL Modeling and

0

8

57

🚀 Excited to release our new paper: “Barbarians at the Gate: How AI is Upending Systems Research” We show how AI-Driven Research for Systems (ADRS) can rediscover or outperform human-designed algorithms across cloud scheduling, MoE expert load balancing, LLM-SQL optimization,

8

35

153

SkyRL now supports Megatron! Training massive MoE models demands more than just ZeRO-3/FSDP sharding. The Megatron backend for SkyRL unlocks high throughput training with: ✅ 5D parallelism (tensor + pipeline + context + expert + data) ✅ Efficient training for 30B+ MoEs

2

8

21

Training our advisors was too hard, so we tried to train black-box models like GPT-5 instead. Check out our work: Advisor Models, a training framework that adapts frontier models behind an API to your specific environment, users, or tasks using a smaller, advisor model (1/n)!

16

43

244

when bro suddenly starts submitting PRs with detailed descriptions, correct grammar and casing, and testing steps

164

677

17K

RT @NovaSkyAI: Scaling agentic simulations is hard, so in collaboration with @anyscalecompute we wrote up our experience using Ray for agen…

anyscale.com

Powered by Ray, Anyscale empowers AI builders to run and scale all ML and AI workloads on any cloud and on-prem.

0

1

0

Do you find it challenging to run RL / agent simulations at a large scale (e.g. dealing with docker and remote execution)? Check out our blog post https://t.co/iNPivIzbc2 where we show how to do it with Ray and mini-swe-agent (kudos to @KLieret)

anyscale.com

Powered by Ray, Anyscale empowers AI builders to run and scale all ML and AI workloads on any cloud and on-prem.

0

7

17

🚀🚀🚀🚀

SkyRL x Environments Hub is live!! Train on any of 100+ environments natively on SkyRL today ➡️ https://t.co/Aj287ZKOsw This was super fun to work on, the @PrimeIntellect team crushed it, go OSS!

0

0

4

An amazing collaboration with the Biomni team! We introduce Biomni-R0, a reasoning-enabled multi-task multi-tool biomedical research agent, trained through end-to-end RL. The results are impressive -- 2x stronger than the base model and >10% better than GPT 5 and Claude 4 Sonnet!

🚀 Thrilled to share a preview of Biomni-R0 — we trained the first RL agent end-to-end for biomedical research. ➡️ nearly 2× stronger than its open-source base ➡️ >10% better than frontier closed-source models ➡️ Scalable path to hill climb to expert-level performance 🔗

4

16

61

2. Online quantization: Policy model runs in BF16, while rollouts run in FP8/ Int8. FlashRL patches vLLM’s weight loading APIs to ensure compatibility. We’ve ported over these patches for v0.9.2 and further optimized weight syncing! Try it out:

0

0

1

We’ve implemented both ingredients in FlashRL: 1. Truncated Importance Sampling: Ensures that the difference in rollout and policy logprobs doesn’t hurt performance This is a simple token-level correction factor for your policy loss. Can help stabilize training even without

1

0

2

SkyRL now has native support for FlashRL! We now support Int8 and FP8 rollouts, enabling blazing fast inference - upto 1.7x for the DAPO recipe - without compromising performance!

⚡𝐅𝐏𝟖 makes RL faster — but at the cost of performance. We present 𝐅𝐥𝐚𝐬𝐡𝐑𝐋, the first 𝐨𝐩𝐞𝐧–𝐬𝐨𝐮𝐫𝐜𝐞 & 𝐰𝐨𝐫𝐤𝐢𝐧𝐠 𝐑𝐋 𝐫𝐞𝐜𝐢𝐩𝐞 that applies 𝐈𝐍𝐓𝟖/𝐅𝐏𝟖 for rollout 𝐰𝐢𝐭𝐡𝐨𝐮𝐭 𝐥𝐨𝐬𝐢𝐧𝐠 𝐩𝐞𝐫𝐟𝐨𝐫𝐦𝐚𝐧𝐜𝐞 compared to 𝐁𝐅𝟏𝟔! 📝 Blog:

3

21

118

I’m confused by ppl saying GPT-5 is bad. At least with thinking mode, I find it way more focused, more reasonable, and less rambling. Sometimes I even have to ask it to go into more detail! IMO best model from OAI so far.

102

26

894