Stephan Rabanser

@steverab

Followers

519

Following

465

Media

1K

Statuses

10K

Incoming Postdoctoral Researcher @Princeton. Reliable, safe, trustworthy machine learning. Previously: @UofT @VectorInst @TU_Muenchen @Google @awscloud

Toronto, Ontario

Joined April 2010

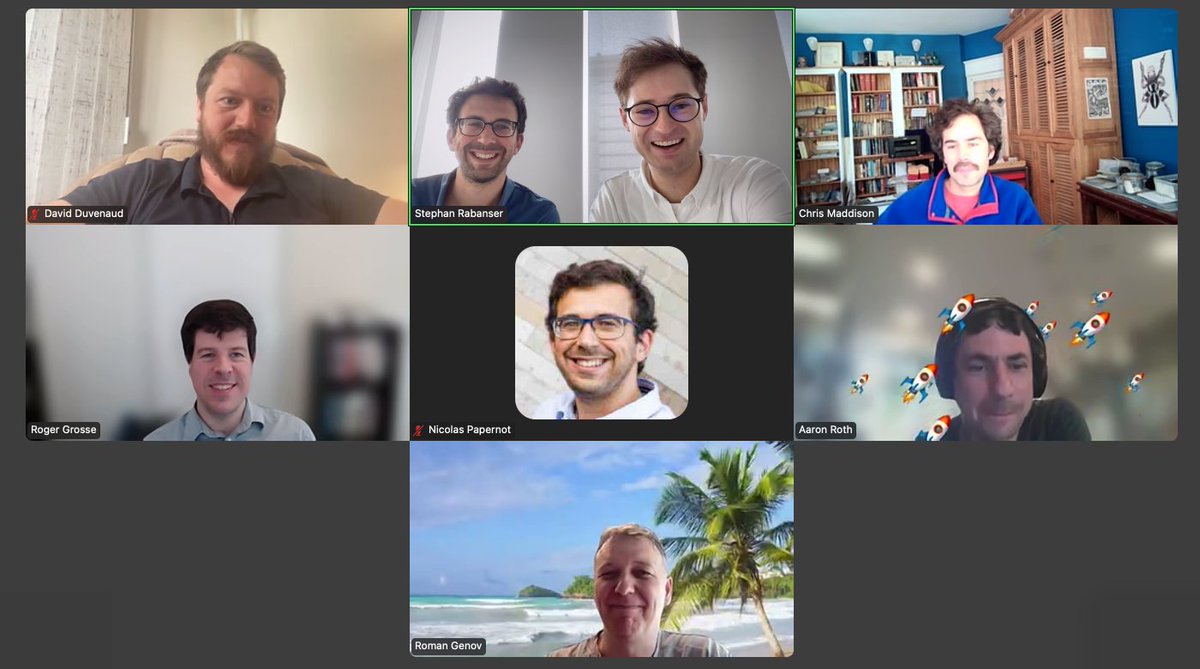

🎓 PhDone! After 5 intense years, I’m thrilled to share that I’ve just passed my final oral examination and had my thesis accepted without corrections! 🥳

8

0

105

RT @MLStreetTalk: We might not need cryptography anymore for some applications. Because ML models are drastically changing notions of trus….

0

9

0

I also want to thank all of my (ex) lab-mates, paper collaborators, and the broader research community at @UofT and @VectorInst for their support and inspiration! Excited for my next chapter at @Princeton @PrincetonCITP with @random_walker and @msalganik !.

0

0

1

I’m incredibly grateful to my advisor @NicolasPapernot (fun fact: I am his first PhD graduate!), my supervisory committee (@rahulgk , @DavidDuvenaud , @RogerGrosse , @zacharylipton ), and my examination committee (@Aaroth , @cjmaddison , Roman Genov).

1

0

6

Thanks to all my amazing collaborators at Google for hosting me for this internship in Zurich and for making this work possible: Nathalie Rauschmayr, Achin (Ace) Kulshrestha, Petra Poklukar, @wittawatj, @seanAugenstein, @ccwang1992, and @fedassa!.

1

1

1

RT @adam_dziedzic: 🚨 Join us at ICML 2025 for the Workshop on Unintended Memorization in Foundation Models (MemFM)! 🚨. 📅 Saturday, July 19….

0

7

0

RT @VectorInst: Happy #AIAppreciationDay! 🎉 What better way to celebrate than showcasing even more Vector researchers advancing AI at #ICML….

0

2

0

RT @yucenlily: In our new ICML paper, we show that popular families of OOD detection procedures, such as feature and logit based methods, a….

arxiv.org

To detect distribution shifts and improve model safety, many out-of-distribution (OOD) detection methods rely on the predictive uncertainty or features of supervised models trained on...

0

49

0

📄 Gatekeeper: Improving Model Cascades Through Confidence Tuning.Paper ➡️ Workshop ➡️ Tiny Titans: The next wave of On-Device Learning for Foundational Models (TTODLer-FM).Poster ➡️ West Meeting Room 215-216 on Sat 19 Jul 3:00 p.m. — 3:45 p.m.

arxiv.org

Large-scale machine learning models deliver strong performance across a wide range of tasks but come with significant computational and resource constraints. To mitigate these challenges, local...

0

0

1

📄 Selective Prediction Via Training Dynamics.Paper ➡️ Workshop ➡️ 3rd Workshop on High-dimensional Learning Dynamics (HiLD).Poster ➡️ West Meeting Room 118-120 on Sat 19 Jul 10:15 a.m. — 11:15 a.m. & 4:45 p.m. — 5:30 p.m.

arxiv.org

Selective Prediction is the task of rejecting inputs a model would predict incorrectly on. This involves a trade-off between input space coverage (how many data points are accepted) and model...

1

0

1

📄 Suitability Filter: A Statistical Framework for Classifier Evaluation in Real-World Deployment Settings (✨ oral paper ✨).Paper ➡️ Poster ➡️ E-504 on Thu 17 Jul 4:30 p.m. — 7 p.m. Oral Presentation ➡️ West Ballroom C on Thu 17 Jul 4:15 p.m. — 4:30 p.m.

arxiv.org

Deploying machine learning models in safety-critical domains poses a key challenge: ensuring reliable model performance on downstream user data without access to ground truth labels for direct...

1

0

0

📄 Confidential Guardian: Cryptographically Prohibiting the Abuse of Model Abstention.TL;DR ➡️ We show that a model owner can artificially introduce uncertainty and provide a detection mechanism. Paper ➡️ Poster ➡️ E-1002 on Wed 16 Jul 11 a.m. — 1:30 p.m.

arxiv.org

Cautious predictions -- where a machine learning model abstains when uncertain -- are crucial for limiting harmful errors in safety-critical applications. In this work, we identify a novel threat:...

1

0

0

📣 I will be at #ICML2025 in Vancouver next week to present two main conference papers (including one oral paper ✨) and two workshop papers! Say hi if you are around and want to chat about ML uncertainty & reliability! 😊. 🧵 Papers in order of presentation below:

1

0

8

RT @AliShahinShams1: Can safety become a smokescreen for harm?#icml2025 . ML abstain when uncertain—a safeguard to prevent catastrophic err….

0

1

0

RT @polkirichenko: Excited to release AbstentionBench -- our paper and benchmark on evaluating LLMs’ *abstention*: the skill of knowing whe….

0

81

0