Shangbin Feng

@shangbinfeng

Followers

4K

Following

11K

Media

126

Statuses

966

PhD student @uwcse @uwnlp. Model collaboration, social NLP, networks and structures. #水文学家

Joined June 2021

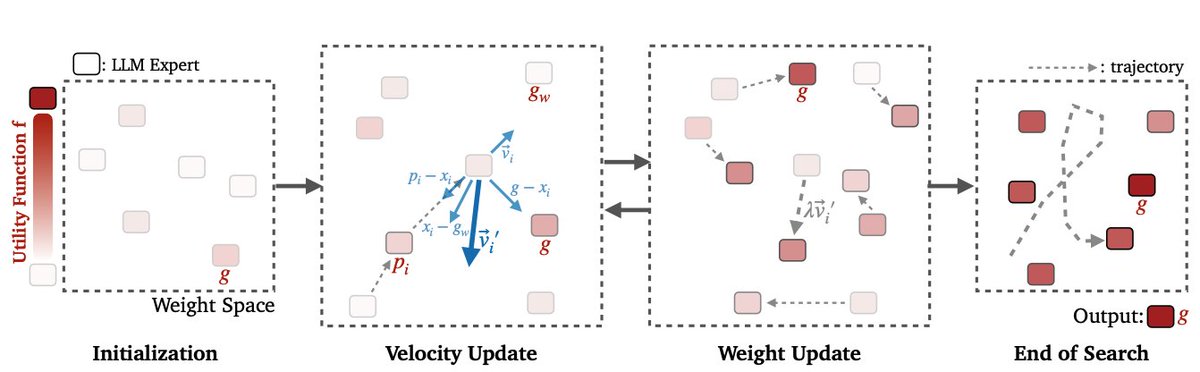

👀 How to find a better adapted model?.✨ Let the models find it for you!. 👉🏻 Introducing Model Swarms, multiple LLM experts collaboratively search for new adapted models in the weight space and discover their new capabilities. 📄 Paper:

3

43

219

RT @ysu_nlp: Excited to receive the NSF CAREER Award! . Grateful for all the support and encouragement I've received in the 6 years of fac….

0

8

0

RT @wyu_nd: 𝑳𝑳𝑴𝒔 can really 𝑺𝒆𝒍𝒇-𝑬𝒗𝒐𝒍𝒗𝒆, 𝒘𝒊𝒕𝒉𝒐𝒖𝒕 𝑯𝒖𝒎𝒂𝒏 𝑫𝒂𝒕𝒂!. -- One LLM, two roles: Challenger creates tasks, Solver answers them. -- No d….

0

241

0

RT @gregd_nlp: 📢I'm joining NYU (Courant CS + Center for Data Science) starting this fall!. I’m excited to connect with new NYU colleagues….

0

44

0

RT @chengmyra1: The more human-like LLMs become, the more we risk misunderstanding them. In our new paper, @lujainmibrahim and I explore ho….

0

20

0

RT @abeirami: If the model can't solve a problem in 100 trials with an optimized prompt, post-training* can't fix this. *RL, test-time sca….

0

2

0

RT @aclmeeting: The Winner of the 1st ACL Computational Linguistics Doctoral Dissertation Award is . 🏆 Sewon Min with Rethinking Data Use….

0

22

0

RT @WeijiaShi2: How to write good reviews & rebuttals? We've invited 🌟 reviewers to share their expertise in person at our ACL mentorship s….

0

4

0

RT @StellaLisy: WHY do you prefer something over another?. Reward models treat preference as a black-box😶🌫️but human brains🧠decompose deci….

0

72

0

RT @jcz12856876: 1/ 🚨New Paper 🚨.LLMs are trained to refuse harmful instructions, but internally, do they see harmfulness and refusal as th….

0

16

0

RT @tsvetshop: Novel cognitive science grounded approach to preference modeling: synthetic counterfactual training + attention-based attrib….

0

3

0

RT @HaileyJoren: PhD in Computer Science, University of California San Diego 🎓. My research focused on uncertainty and safety in AI systems….

0

21

0

RT @aclmeeting: 📣"NLP x Graphs: Where Structure Meets Language" will bring together NLP researchers working on or interested in graph-based….

2025.aclweb.org

ACL 2025 Birds of a Feather Sessions

0

2

0

RT @SmithaMilli: Today we're releasing Community Alignment - the largest open-source dataset of human preferences for LLMs, containing ~200….

0

70

0

RT @AkariAsai: I'll be hiring a couple of Ph.D. students at CMU (via LTI or MLD) in the upcoming cycle! .If you are interested in joining m….

docs.google.com

Interested in Joining My Group? Thank you for your interest! I may not be able to respond to individual inquiry emails, so please read the following information before reaching out! If you're...

0

15

0

RT @AkariAsai: Some updates 🚨.I finished my Ph.D at @uwcse in June 2025!.After a year at AI2 as a Research Scientist, I am joining CMU @LTI….

0

62

0

RT @ShirleyYXWu: CollabLLM won #ICML2025 ✨Outstanding Paper Award along with 6 other works! . 🫂 Absolutey honored a….

0

26

0

RT @WeijiaShi2: Going to #ICML2025 next week! Excited to chat about decentralized LM training, unified models, reasoning, and more. Please….

0

4

0

RT @LogConference: We’re thrilled to share that the first in-person LoG conference is officially happening December 10–12, 2025 at Arizona….

logconference.org

Learning on Graphs Conference

0

26

0

RT @Cumquaaa: 🚀 Training an image generation model and picking sides between autoregressive (AR) and diffusion? Why not both? Check out MAD….

0

10

0

Check out this new Weijia work immediately.

Can data owners & LM developers collaborate to build a strong shared model while each retaining data control?. Introducing FlexOlmo💪, a mixture-of-experts LM enabling:.• Flexible training on your local data without sharing it.• Flexible inference to opt in/out your data

0

0

13