Philipp Wu

@philippswu

Followers

2K

Following

2K

Media

50

Statuses

414

PhD @Berkeley_AI advised by @pabbeel. Previously @MetaAI @covariantai.

Berkeley, CA

Joined November 2018

🎉Excited to share a fun little hardware project we’ve been working on. GELLO is an intuitive and low cost teleoperation device for robot arms that costs less than $300. We've seen the importance of data quality in imitation learning. Our goal is to make this more accessible 1/n

26

107

690

Excited to share what we've been brewing at PI! We’re working on making robots more helpful by making them faster and more reliable through real-world practice, even on delicate behaviors like carrying this very full latte cup

3

18

174

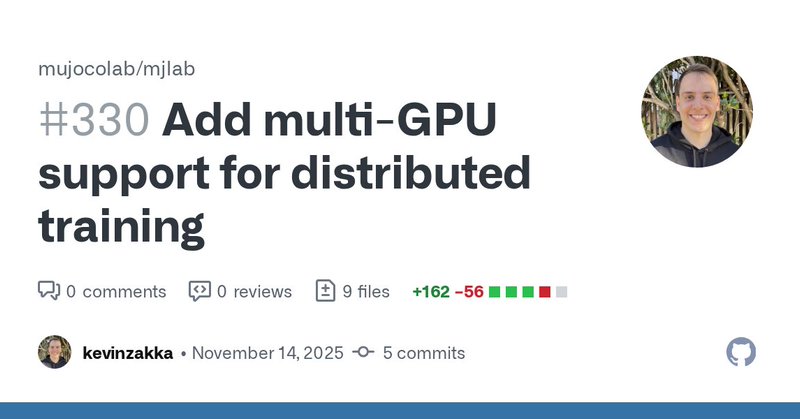

Happy Friday! Multi-GPU training is now supported in mjlab:

github.com

Implements distributed RL training across multiple GPUs using torchrun. Each GPU runs independent rollouts with full environment isolation, and rsl_rl handles gradient synchronization automatically...

5

5

172

I spend so much time in MuJoCo I made a Halloween edition 🎃👻

10

8

198

Didn’t go max speed for safety and space reasons but pretty happy with the result!

Coming to mjlab today! This is vanilla RL, no motion imitation/AMP. Natural gaits emerge from minimal rewards: velocity tracking, upright torso, speed-adaptive joint regularization, and contact quality (foot clearance, slip, soft landings). No reference trajectories or gait

4

18

188

Super happy and honored to be a 2025 Google PhD Fellow! Thank you @Googleorg for believing in my research. I'm looking forward to making humanoid robots more capable and trustworthy partners 🤗

🎉 We're excited to announce the 2025 Google PhD Fellows! @GoogleOrg is providing over $10 million to support 255 PhD students across 35 countries, fostering the next generation of research talent to strengthen the global scientific landscape. Read more: https://t.co/0Pvuv6hsgP

35

4

196

Simulation drives robotics progress, but how do we close the reality gap? Introducing GaussGym: an open-source framework for learning locomotion from pixels with ultra-fast parallelized photorealistic rendering across >4,000 iPhone, GrandTour, ARKit, and Veo scenes! Thread 🧵

11

64

333

Great to see people already using video rewinding (proposed in https://t.co/oE9qpYUeDV) to improve their reward models! This paper adds stage prediction to better reward long-horizon tasks. Excited to see how much better reward models can get!

🚀 Introducing SARM: Stage-Aware Reward Modeling for Long-Horizon Robot Manipulation Robots struggle with tasks like folding a crumpled T-shirt—long, contact-rich, and hard to label. We propose a scalable reward modeling framework to fix that. 1/n

1

13

131

We've seen reward models make a massive impact in improving language models with RLHF. Excited to see how reward models can play a similar role in robotics! Follow David's thread for the paper! https://t.co/TMNw7Cpfzr

Huge thanks to my amazing co-authors: @justinyu_ucb ( https://t.co/tsSKadrATJ)

@MacSchwager @pabbeel @YideShentu @philippswu 📖Read the full paper: https://t.co/HlRxtCCWut 📷 Project website: https://t.co/2li1qk4AsG

#robotics #AI #imitationlearning #SARM 5/n

0

0

4

Very excited about this overall research direction. Having such a precise model that can measure task progress enables countless possibilities for improved policy performance. As the saying goes "You can't improve what you don't measure".

1

0

5

One of my favorite results: despite the reward model and policy being trained on the same data, the policy misidentifies the crumpled shirt as folded and pushes the unfinished fold to the corner, where the reward model correctly identifies the failure. https://t.co/R1BVQyZy17

🔍 Robust to OOD + noisy policy rollouts SARM isn’t just for clean human demos. It accurately estimates progress even on noisy, OOD policy rollouts. 👇 a demo where SARM detects recession and not cheated by “fake finish”. 3/n

1

0

4

🎆New paper! We explore how to learn precise reward models that can accurately measure task progress for robot manipulation. See @QianzhongChen's thread for more details.

🚀 Introducing SARM: Stage-Aware Reward Modeling for Long-Horizon Robot Manipulation Robots struggle with tasks like folding a crumpled T-shirt—long, contact-rich, and hard to label. We propose a scalable reward modeling framework to fix that. 1/n

2

6

78

Very excited to start sharing some of the work we have been doing at Amazon FAR. In this work we present OmniRetarget, which can generate high-quality interaction-preserving data from human motions for learning complex humanoid skills. High-quality re-targeting really helps

Humanoid motion tracking performance is greatly determined by retargeting quality! Introducing 𝗢𝗺𝗻𝗶𝗥𝗲𝘁𝗮𝗿𝗴𝗲𝘁🎯, generating high-quality interaction-preserving data from human motions for learning complex humanoid skills with 𝗺𝗶𝗻𝗶𝗺𝗮𝗹 RL: - 5 rewards, - 4 DR

8

39

292

SOTA data generation from OmniRetarget + SOTA formulation from BeyondMimic = mind-blowing performance

Humanoid motion tracking performance is greatly determined by retargeting quality! Introducing 𝗢𝗺𝗻𝗶𝗥𝗲𝘁𝗮𝗿𝗴𝗲𝘁🎯, generating high-quality interaction-preserving data from human motions for learning complex humanoid skills with 𝗺𝗶𝗻𝗶𝗺𝗮𝗹 RL: - 5 rewards, - 4 DR

0

5

40

New project from @kevin_zakka I've been using + helping with! Ridiculously easy setup, typed codebase, headless vis => happy 😊

I'm super excited to announce mjlab today! mjlab = Isaac Lab's APIs + best-in-class MuJoCo physics + massively parallel GPU acceleration Built directly on MuJoCo Warp with the abstractions you love.

1

3

37

Excited to introduce Dreamer 4, an agent that learns to solve complex control tasks entirely inside of its scalable world model! 🌎🤖 Dreamer 4 pushes the frontier of world model accuracy, speed, and learning complex tasks from offline datasets. co-led with @wilson1yan

82

358

3K

I'm super excited to announce mjlab today! mjlab = Isaac Lab's APIs + best-in-class MuJoCo physics + massively parallel GPU acceleration Built directly on MuJoCo Warp with the abstractions you love.

32

140

858

“…turns out the ‘render’ subset of simulation is well-matched to the ‘quasi-static’ subset of manipulation.”

Great talk by @uynitsuj presenting the Real2Render2Real paper at CoRL in Seoul! A great collaboration between @UCBerkeley and @ToyotaResearch. The code for this paper is now available, check it out here: https://t.co/ayTC0nPtf4

@ToyotaResearch @AUTOLab_Cal @Ken_Goldberg

0

8

26

Excited! Kevin always delivers🚀🚀🚀

0

0

27