Niklas Stoehr

@niklas_stoehr

Followers

1K

Following

4K

Media

45

Statuses

185

Research Scientist @GoogleDeepMind and PhD from @ETH ⭕️ Gemini Post-Training ⭕️

Zurich, Switzerland

Joined October 2017

RT @valentina__py: Interested in shaping the progress of responsible AI and meeting leading researchers in the field? SoLaR@COLM 2025 is lo….

0

6

0

RT @alexandrutifrea: Very excited about this tutorial at #AAAI2025 on inducing privacy, fairness or robustness to distribution shifts when….

0

2

0

RT @MilaNLProc: 📖 For our weekly @MilaNLProc lab seminar, it was a pleasure to have @joabaum in person, introducing his work on fact-checki….

0

2

0

RT @kevdududu: Very glad and grateful to share this fun work, especially because of the opportunity to work with this immensely talented te….

0

4

0

RT @jkminder: Can we understand and control how language models balance context and prior knowledge? Our latest paper shows it’s all about….

0

22

0

RT @a_stadt: I'm excited to announce my new lab: UCSD's Learning Meaning and Natural Language Lab. a.k.a. LeM🍋N Lab!. And 📢WE ARE RECR….

0

77

0

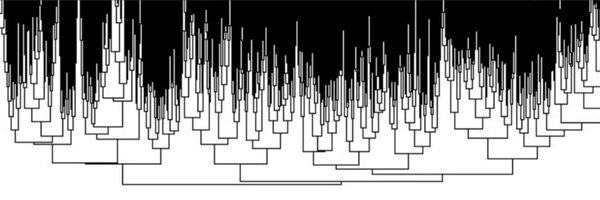

On synthetic tasks, activation scaling performs on par with steering vectors (effect, faith) but is fundamentally more parsimonious (minim). We seek to synthesize steering and interpretability, building upon recent work questioning their relationship (@peterbhase, @wzihao12,…).

1

0

2

Drawing analogies to the fascinating circuits literature (@AdithyaNLP, @ArthurConmy, . ), we say a successful intervention should flip the two answer tokens (effectiveness), leave other tokens unaffected (faithfulness) (@michaelwhanna), all while being sparse (minimality).

1

0

4

Scaling individual specialized components has been successfully explored by @francescortu, @ZhijingJin, @mrinmayasachan and @jack_merullo_ among others. We train all scalars on a multi-objective using gradient-based optimization similar to @evanqed & @belindazli's COLM paper.

1

3

6

Activation scaling may be understood as scaling steering directions already encoded in the model, inspired by Information Flows (@javifer_96, @lena_voita), extracting latent steering vecs by @nsubramani23, concept promotion by @megamor2 or PatchScopes by @ghandeharioun et al.

1

0

4

Our new mechanistic interpretability work "Activation Scaling for Steering and Interpreting Language Models" was accepted into Findings of EMNLP 2024! 🔴🔵. 📄 @kevdududu, @vesteinns, @cervisiarius, Ryan Cotterell and @AaronSchein. thread 👇

3

18

99

RT @cervisiarius: AI alignment steers AI toward human goals & values. In a recent perspective piece, we draw attention to a fundamental cha….

0

15

0

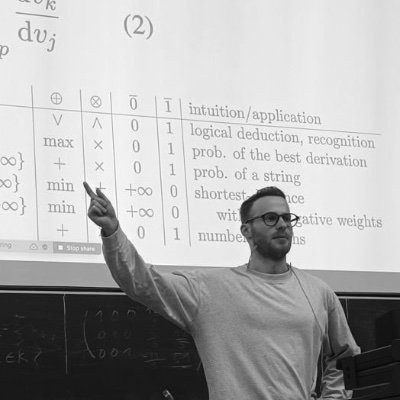

RT @cervisiarius: It was a joy to give my inaugural lecture at @EPFL_en @ICepfl last week. I tried to give an easy-to-digest intro into wha….

0

12

0

RT @manoelribeiro: I'm thrilled to announce that I'll join @PrincetonCS/@PrincetonCITP as an assistant professor in Spring 2025 — can't wai….

0

19

0

RT @kevdududu: How much does an LM depend on information provided in-context vs its prior knowledge?. Check out how @vesteinns, @niklas_sto….

0

15

0