Niklas Funk

@n_w_funk

Followers

195

Following

365

Media

9

Statuses

51

Joined October 2018

I am thrilled to share that I successfully defended my PhD thesis! 🎓 The past few years have been an amazing and intense journey, and I could not be happier with how everything came together. However, this milestone would have been impossible without the incredible support from

Just graduated PhD student #45 ... Niklas Wilhelm Funk did an outstanding job defending a doctoral dissertation on Learning Robotic Manipulation through Vision, Touch, and Spatially Grounded Representations! Major insights on many different aspects of manipulation...

2

4

26

Join us tomorrow June 12th at 8:00am for the fifth #CVPR2025 Workshop on Event-based Vision! Where: 4th level, Grand Ballroom C2, Website: https://t.co/7Gp8nPUyhu Schedule:

2

6

17

Just arrived at #ICRA2025 to present 2 works on tactile manipulation: 1) Evetac: An Event-based Optical Tactile Sensor for Robotic Manipulation On Tue 11:15 - Room 304 ( https://t.co/LY1DY73jyt) 2) On the Importance of Tactile Sensing for Imitation Learning: A Case Study on

sites.google.com

Evetac: An Event-based Optical Tactile Sensor for Robotic Manipulation Niklas Funk, Erik Helmut, Georgia Chalvatzaki, Roberto Calandra, Jan Peters Accepted at the IEEE Transactions on Robotics (T-RO)...

I am excited to share that our work "Evetac: An Event-based Optical Tactile Sensor for Robotic Manipulation" got accepted for publication in the IEEE Transactions on Robotics (T-RO). @ias_tudarmstadt @lasr_lab @TactileInternet @SECAI_School @RCalandra @GeorgiaChal @Jan_R_Peters

0

3

17

Super excited to share our work on force estimation for the @gelsight mini optical tactile sensor! 🚀 We introduce FEATS—Finite Element Analysis (FEA) for Tactile Sensing—a U-net that predicts 3D force distributions from tactile images leveraging FEA. Why does it matter? 👇

2

2

4

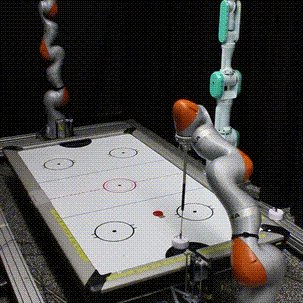

I was very happy to present our retrospective of our 2023 Robot Air Hockey Challenge at @NeurIPSConf 2024. Thank you very much to @Huawei for sponsoring our competition, and stay tuned for the future editions!

1

5

18

Join us at @corl_conf in the afternoon for an inspiring lineup of talks and posters on robotic assembly! Check out how manufacturing and construction are the perfect arenas for roboticists to tackle complex manipulation challenges and drive real-world impact.

0

6

28

I am excited to share our recent work on "ActionFlow: Equivariant, Accurate, and Efficient Policies with Spatially Symmetric Flow Matching". The work presents a novel policy class combining Flow Matching with SE(3) Invariant Transformers for fast, equivariant, and expressive

2

12

59

Our competition retrospective on the Robot Air Hockey Challenge 2023 has been accepted in the dataset and benchmark track at @NeurIPSConf 2024! See you at the conference, but in the meantime, you can take a look at the videos here https://t.co/oEvd8RxFEX

air-hockey-challenge.robot-learning.net

News [2025-08-15] The Qualifying Stage has begun! [2025-09-15] The deadline for the Qualifying Stage has been extended by one week! The new deadline is September 21st, 2025 AoE.

1

3

13

Researchers from @ias_tudarmstadt and @lasr_lab present a novel, open-source event-based optical #tactilesensor called Evetac. By applying this sensor, the researchers are able to create fast and robust closed-loop grasp controllers for manipulation. https://t.co/R6ejt8mPME

1

8

41

YouTube is a LARGE dataset of demonstration videos to train Generalist robot agents, but lacks action data. How can we learn DEXTEROUS skills from them? In #CoRL2024, we explore the problem of learning a Generalist Piano Playing agent from YouTube videos. https://t.co/nRRy3hdqkL

6

43

316

We believe that fast, bandwidth-efficient event-based tactile sensors like Evetac will be essential for bringing human-like manipulation capabilities to robotics. Check out https://t.co/LY1DY73Ro1 for the link to the paper, design files, and software interface.

sites.google.com

Evetac: An Event-based Optical Tactile Sensor for Robotic Manipulation Niklas Funk, Erik Helmut, Georgia Chalvatzaki, Roberto Calandra, Jan Peters Accepted at the IEEE Transactions on Robotics (T-RO)...

1

1

6

Evetac's output and our touch processing algorithms also provide meaningful features for learning data-driven slip detection and prediction models. The models form the basis for robust and adaptive closed-loop grasp controllers capable of handling a wide range of objects.

1

0

5

In benchmarking experiments, we demonstrate Evetac's capabilities of sensing vibrations up to 498 Hz, reconstructing shear forces, and significantly reducing data rates compared to RGB optical tactile sensors like GelSight Mini.

1

0

4

The work introduces a novel, open-source event-based optical tactile sensor called Evetac. The sensor is essentially an event-based version of standard RGB optical tactile sensors. However, the camera replacement allows for obtaining and processing touch measurements at 1000 Hz.

1

0

3

I am excited to share that our work "Evetac: An Event-based Optical Tactile Sensor for Robotic Manipulation" got accepted for publication in the IEEE Transactions on Robotics (T-RO). @ias_tudarmstadt @lasr_lab @TactileInternet @SECAI_School @RCalandra @GeorgiaChal @Jan_R_Peters

2

9

64

Today at #NeurIPS23 I’ll give a talk about efficient real world RL for locomotion, manipulation, and navigation at the Robot Air Hockey Challenge (Rm 353, 10:45 am CT), summarizing how far efficient deep RL has come over the past 5 years:

3

13

123

We're excited to present several works at #NeurIPS2023 this week, ranging from approximate inference to tactile sensing! Check out the thread below for more info 👇

2

10

36

We are super excited to share the #air_hockey_challenge competition between @alr_lab_kit and @Idiap_ch on real robots! Join our #NeurIPS2023 workshop on 15. Dec and learn more details: https://t.co/NAYLqy2Ikt Full game: https://t.co/RPlZu15rDl

0

11

33

Looking forward to presenting our work on „Placing by Touching“ at IROS tomorrow. In short, we exploit tactile sensing for precise & stable object placing. It is also nominated for the Best Paper Award on Mobile Manipulation. Happening at 9:12 in room 252AB (Tactile Sensing I)

Placing by Touching: An empirical study on the importance of tactile sensing for precise object placing Luca Lach, @n_w_funk, Robert Haschke, Severin Lemaignan, Helge Joachim Ritter, @Jan_R_Peters & @GeorgiaChal Website: https://t.co/mlwhLaiuML [4/5]

0

4

19