Michael Kirchhof

@mkirchhof_

Followers

2K

Following

1K

Media

54

Statuses

353

Research Scientist at @Apple for uncertainty quantification.

Paris

Joined June 2022

Can LLMs access and describe their own internal distributions? With my colleagues at Apple, I invite you to take a leap forward and make LLM uncertainty quantification what it can be. 📄 https://t.co/uhoCJfPdZK 💻 https://t.co/pQY1DfaKtS 🧵1/9

1

21

89

Our research team is hiring PhD interns 🍏 Spend your next summer in Paris and explore the next frontiers of LLMs for uncertainty quantification, calibration, RL and post-training, and Bayesian experimental design. Details & Application ➡️

jobs.apple.com

Apply for a Internship - Machine Learning Research on Uncertainty job at Apple. Read about the role and find out if it’s right for you.

4

55

328

We rethink how and why LLMs are calibrated: Not just on token-level, but on answer-level 👇

LLMs are notorious for "hallucinating": producing confident-sounding answers that are entirely wrong. But with the right definitions, we can extract a semantic notion of "confidence" from LLMs, and this confidence turns out to be calibrated out-of-the-box in many settings (!)

0

1

19

📢 We’re looking for a researcher in in cogsci, neuroscience, linguistics, or related disciplines to work with us at Apple Machine Learning Research! We're hiring for a one-year interdisciplinary AIML Resident to work on understanding reasoning and decision making in LLMs. 🧵

9

57

308

🚀New Paper https://t.co/KB2hZljDHu We conduct a systematic data-centric study for speech-language pretraining, to improve end-to-end spoken-QA! 🎙️🤖 Using our data-centric insights, we pretrain a 3.8B SpeechLM (called SpeLangy) outperforming 3x larger models! 🧵👇

3

40

126

ICLR reviewers: Be the change you want to see in the world. Don't reproduce violence :)

8

12

206

If you are excited about Multimodal and Agentic Reasoning with Foundation Models, Apple ML Research has openings for Researchers, Engineers, and Interns in this area. Consider applying through the links below or feel free to send a message for more information. - Machine

jobs.apple.com

Apply for a AIML - Machine Learning Researcher, MLR job at Apple. Read about the role and find out if it’s right for you.

12

54

460

🚀 Come work with me in the Machine Learning Research team at Apple! I’m looking for FT research scientists with a strong track of impactful publications on generative modeling (NeurIPS, ICML, ICLR, CVPR, ICCV, etc.) to join my team and work on fundamental generative modeling

jobs.apple.com

Apply for a AIML - Machine Learning Researcher, MLR job at Apple. Read about the role and find out if it’s right for you.

7

41

345

The Foundation Model Team @🍎Apple AI/ML is looking for a Research Intern (flexible start date) to work on Multimodal LLMs and Vision-Language. Interested? DM me to learn more!

28

19

464

📣We have PhD research internship positions available at Apple MLR. DM me your brief research background, resume, and availability (earliest start date and latest end date) if interested in the topics below.

Introducing Pretraining with Hierarchical Memories: Separating Knowledge & Reasoning for On-Device LLM Deployment 💡We propose dividing LLM parameters into 1) anchor (always used, capturing commonsense) and 2) memory bank (selected per query, capturing world knowledge). [1/X]🧵

8

48

460

We use latent diffusion to model CoT as continuous semantics without committing to actual tokens. The thinking process is implicit and can be iteratively refined thanks to the diffusion, which allows for test time scaling.

🧵1/ Latent diffusion shines in image generation for its abstraction, iterative-refinement, and parallel exploration. Yet, applying it to text reasoning is hard — language is discrete. 💡 Our work LaDiR (Latent Diffusion Reasoner) makes it possible — using VAE + block-wise

0

8

27

LLMs are currently this one big parameter block that stores all sort of facts. In our new preprint, we add context-specific memory parameters to the model, and pretrain the model along with a big bank of memories. 📑 https://t.co/xTNn2rNTK5 Thread 👇

arxiv.org

The impressive performance gains of modern language models currently rely on scaling parameters: larger models store more world knowledge and reason better. Yet compressing all world knowledge...

Introducing Pretraining with Hierarchical Memories: Separating Knowledge & Reasoning for On-Device LLM Deployment 💡We propose dividing LLM parameters into 1) anchor (always used, capturing commonsense) and 2) memory bank (selected per query, capturing world knowledge). [1/X]🧵

0

21

174

We’re excited to share our new paper: Continuously-Augmented Discrete Diffusion (CADD) — a simple yet effective way to bridge discrete and continuous diffusion models on discrete data, such as language modeling. [1/n] Paper: https://t.co/fQ8qxx4Pge

6

36

238

Super excited to share what @stephenz_y and I’ve been up to during our internship at🍎: Using optimal transport makes flows straighter and generation faster in flow matching, but small batch OT is biased and large batch OT is slow. What to do? Use semidiscrete OT! 🧵

3

39

268

The story repeats itself. We find that xLSTM performs better than Transformers on moderate context lengths of e.g. 8k (see picture). However, xLSTM handles longer contexts better and the benefit over Transformers grows with context length - both for training and inference.

0

2

6

But it does not seem impossible. Releasing this benchmark (+ code) to let you take a shot at this new avenue for uncertainty communication. This is a missing building block to enable agentic reasoning in uncertain environments, user trust, conformal calibration. Let’s solve it :)

0

1

1

Second, we attempted hill-climbing along the benchmark. We already knew Reasoning and CoT can’t do it, now we’ve tried to explicitly SFT/DPO. Result: LLMs can get the format right, but what they output is not what they are actually uncertain about, information-theoretically.

1

0

1

Since its initial release, we didn’t stop cooking: First, we continued validating whether the scores that the SelfReflect benchmarks assigns are robust signals of quality. Across more LLMs and datasets, it works. I have more confidence in the benchmark than ever.

1

0

0

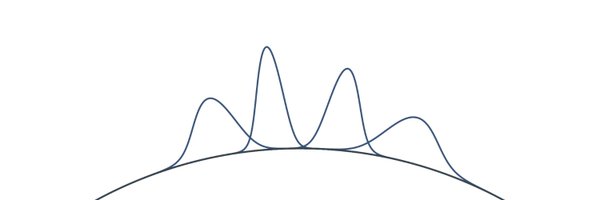

Many treat uncertainty = a number. At Apple, we're rethinking this: LLMs should output strings that reveal all information of their internal distributions. We find Reasoning, SFT, CoT can't do it -yet. To get there, we introduce the SelfReflect benchmark. https://t.co/uhoCJfPdZK

2

19

27

🤔 Ever thought a small teacher could train a student 6× larger that sets new SOTA in training efficiency and frozen evaluation performance for video representation learning? 🤔 Do we really need complex EMA-based self-distillation to prevent collapse, bringing unstable loss

8

77

461

🚨 Machine Learning Research Internship opportunity in Apple MLR! We are looking for a PhD research intern with a strong interest in world modeling, planning or learning video representations for planning and/or reasoning. If interested, apply by sending an email to me at

4

35

324