Max Kleiman-Weiner

@maxhkw

Followers

5K

Following

11K

Media

119

Statuses

897

professor @UW computational cognitive scientist working on social minds and machines. cofounder @CSM_ai. priors: PhD @MIT founder @diffeo (acquired)

Seattle, WA

Joined April 2011

🗺️ We started writing "AI Influence: Mechanisms, Amplifiers, and Consequences" at the start of 2025, when things weren't so out of hand. Later events - things like AI psychosis, coding agents - confirmed many of our worries. Check out our survey paper, and let's figure out a

1

5

10

Forget modeling every belief and goal! What if we represented people as following simple scripts instead (i.e "cross the crosswalk")? Our new paper shows AI which models others’ minds as Python code 💻 can quickly and accurately predict human behavior! https://t.co/1t2fsW7jyL🧵

4

33

100

Great work led by @kjha02 and collaborators: @aydan_huang265, @EricYe29011995, @natashajaques! See his explainer thread: https://t.co/5KpFSfyeXW arXiv:

Forget modeling every belief and goal! What if we represented people as following simple scripts instead (i.e "cross the crosswalk")? Our new paper shows AI which models others’ minds as Python code 💻 can quickly and accurately predict human behavior! https://t.co/1t2fsW7jyL🧵

0

0

2

New paper challenges how we think about Theory of Mind. What if we model others as executing simple behavioral scripts rather than reasoning about complex mental states? ROTE (Representing Others' Trajectories as Executables) treats behavior prediction as program synthesis.

4

1

10

Great work led by @uilydna and collaborators: @GhateKshitish, @MonaDiab77, @dan_fried, @Dr_Atoosa arXiv:

arxiv.org

Past work seeks to align large language model (LLM)-based assistants with a target set of values, but such assistants are frequently forced to make tradeoffs between values when deployed. In...

0

1

1

When values collide, what do LLMs choose? In our new paper, "Generative Value Conflicts Reveal LLM Priorities," we generate value conflicts and find that models prioritize "protective" values in multiple-choice, but shift toward "personal" values when interacting.

🚨New Paper: LLM developers aim to align models with values like helpfulness or harmlessness. But when these conflict, which values do models choose to support? We introduce ConflictScope, a fully-automated evaluation pipeline that reveals how models rank values under conflict.

3

0

7

Interesting work. Measuring intelligence without reference to specific goals is important (and relevant to alignment), and empowerment is one method. I have previously discussed theoretical intelligence measures by convergent instrumental achievements: https://t.co/tJYmOw4QD9

lesswrong.com

It is analytically useful to define intelligence in the context of AGI. One intuitive notion is epistemology: an agent's intelligence is how good its…

#1. New paper alert!🚀 How do we evaluate LM agents today? Mostly benchmarks. But: (1) good benchmarks are costly + labor-intensive, and (2) they target narrow end goals, missing unintended capabilities. In our recent paper, we propose a goal-agnostic alternative for evaluating

0

1

15

#1. New paper alert!🚀 How do we evaluate LM agents today? Mostly benchmarks. But: (1) good benchmarks are costly + labor-intensive, and (2) they target narrow end goals, missing unintended capabilities. In our recent paper, we propose a goal-agnostic alternative for evaluating

1

4

26

Excited by our new work estimating the empowerment of LLM-based agents in text and code. Empowerment is the causal influence an agent has over its environment and measures an agent's capabilities without requiring knowledge of its goals or intentions. Led by @jinyeop_song! 🧵👇

#1. New paper alert!🚀 How do we evaluate LM agents today? Mostly benchmarks. But: (1) good benchmarks are costly + labor-intensive, and (2) they target narrow end goals, missing unintended capabilities. In our recent paper, we propose a goal-agnostic alternative for evaluating

1

0

6

Mentors include: @divyasiddarth (@collect_intel), @conitzer (@UniofOxford, @CarnegieMellon), @bakkermichiel (@MIT, @GoogleDeepMind), @sahar_abdelnabi (@Microsoft, @MPI_IS, @ELLISInst_Tue), @jzl86 (@GoogleDeepMind), @ZhijingJin (@UofT, Max Planck), @lrhammond (@coop_ai,

1

2

6

Could humans and AI become a new evolutionary individual?

pnas.org

Could humans and AI become a new evolutionary individual?

0

0

4

Excited to work with the Cooperative AI Foundation on multi-agent safety, mitigating gradual disempowerment, and AI for human cooperation! Consider applying for this 3 month research fellowship ⬇️

🌍 Join a cohort of ambitious researchers in Cape Town for a cooperative AI research fellowship Spend 3 months researching the biggest problems in cooperative AI, with world-class mentorship from Google DeepMind, Oxford, and MIT researchers. See comments for details!

0

0

9

CSM Cube delivers industry-leading model quality, topology, parts, and AI re-topology, providing a significant advantage for workflows from quick prototypes to full production.

38

88

918

#Workshop Generative AI & Theory of Mind in Communicating Agents #InvitedTalk 🗣️Joyce Y. Chai @UMich 🗣️Tomer Ullman @TomerUllman @Harvard 🗣️Vered Swartz @UBC & @VectorInst 🗣️Max Kleiman-Weiner @maxhkw @UW 🗣️Pei Zhou @peizNLP @Microsoft

#IJCAI2025

https://t.co/YLGuc3sKYK

1

2

4

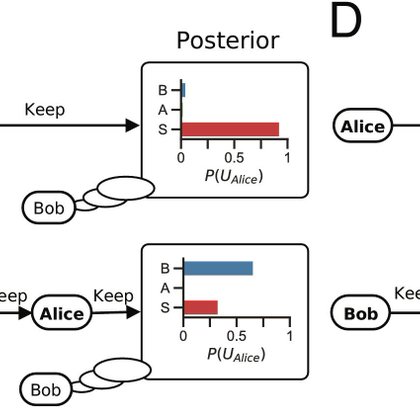

Quantifying the cooperative advantage shows why humans, the most sophisticated cooperators, also have the most sophisticated machinery for understanding the minds of others and offers principles for building more cooperative AI systems. Full paper:

pnas.org

Theories of the evolution of cooperation through reciprocity explain how unrelated self-interested individuals can accomplish more together than th...

1

0

6