marcel ⊙

@marceldotsci

Followers

468

Following

1K

Media

57

Statuses

1K

Trying to build better machine-learning surrogate models for DFT. Postdoc @lab_cosmo 👨🏻🚀.

Lausanne, Switzerland

Joined November 2019

Our (@spozdn & @MicheleCeriotti) recent work on testing the impacts of using approximate, rather than exact, rotational invariance in a machine-learning interatomic potential of water has been published by @MLSTjournal! We find that, for bulk water, approximate invariance is ok. .

Great new work by @marceldotsci @spozdn @MicheleCeriotti @lab_COSMO @nccr_marvel @EPFL_en - 'Probing the effects of broken symmetries in #machinelearning' - #compchem #materials #statphys #molecules #compphys #simulation #atomistic

2

6

21

happy to report that our paper is accepted for an oral presentation at ICML. amazing work by Filippo, who will present it in vancouver! final version, with some extra content, here:

openreview.net

The use of machine learning to estimate the energy of a group of atoms, and the forces that drive them to more stable configurations, have revolutionized the fields of computational chemistry and...

new work! we follow up on the topic of testing which physical priors matter in practice. this time, it seems that predicting non-conservative forces, which has a 2x-3x speedup, leads to serious problems in simulation. we run some tests and discuss mitigations!.

0

2

10

this is rather sick.

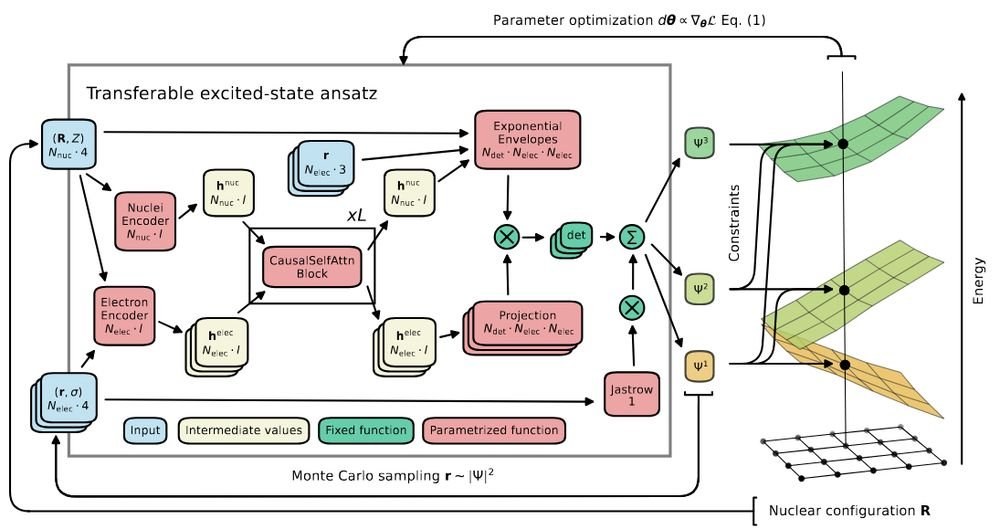

I am truly excited to share our latest work with @MScherbela, @GrohsPhilipp, and @guennemann on "Accurate Ab-initio Neural-network Solutions to.Large-Scale Electronic Structure Problems"!.

1

0

4

👀.

Excited to announce Orb-v3, a new family of universal Neural Network Potentials from me and my team at @OrbMaterials, led by Ben Rhodes and @sanderhaute! These new potentials span the Pareto frontier of models for computational chemistry.

0

1

8

RT @lab_COSMO: 🤫 you can get a better universal #machinelearning potential by training on fewer than 100k structures. too good to be true?….

0

13

0

can stefan be stopped? it’s unclear. congrats!.

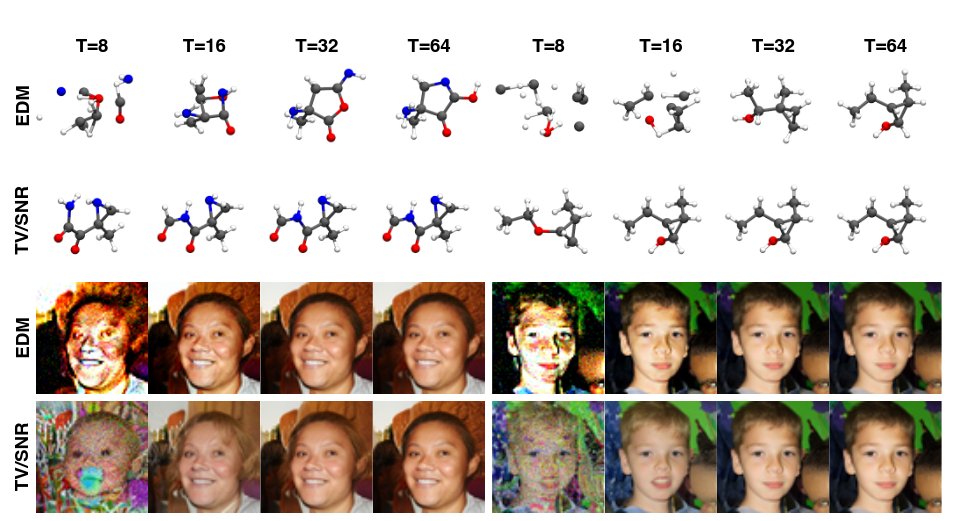

We have a new paper on diffusion!📄. Faster diffusion models with total variance/signal-to-noise ratio disentanglement! ⚡️. Our new work shows how to generate stable molecules in sometimes as little 8 steps and match EDM’s image quality with a uniform time grid. 🧵

2

0

8

👀.

We just shared our latest work with.@CecClementi and @FrankNoeBerlin!.RANGE addresses the "short-sightedness" of MPNNs via virtual aggregations, boosting the accuracy of MLFFs at long-range with linear time-scaling. Next-gen force-fields are here! 🧠🚀.

0

0

1

we're having an interesting seminar next tuesday on equivariance in deep ensembles, for the EPFL squad:

actu.epfl.ch

Join us for a seminar by Jan Gerken on Tuesday, February 4th, at 10:30 AM in MED 2 1124 (Coviz2). Jan is an Assistant Professor in the Division of Algebra and Geometry at Chalmers University of...

0

0

7

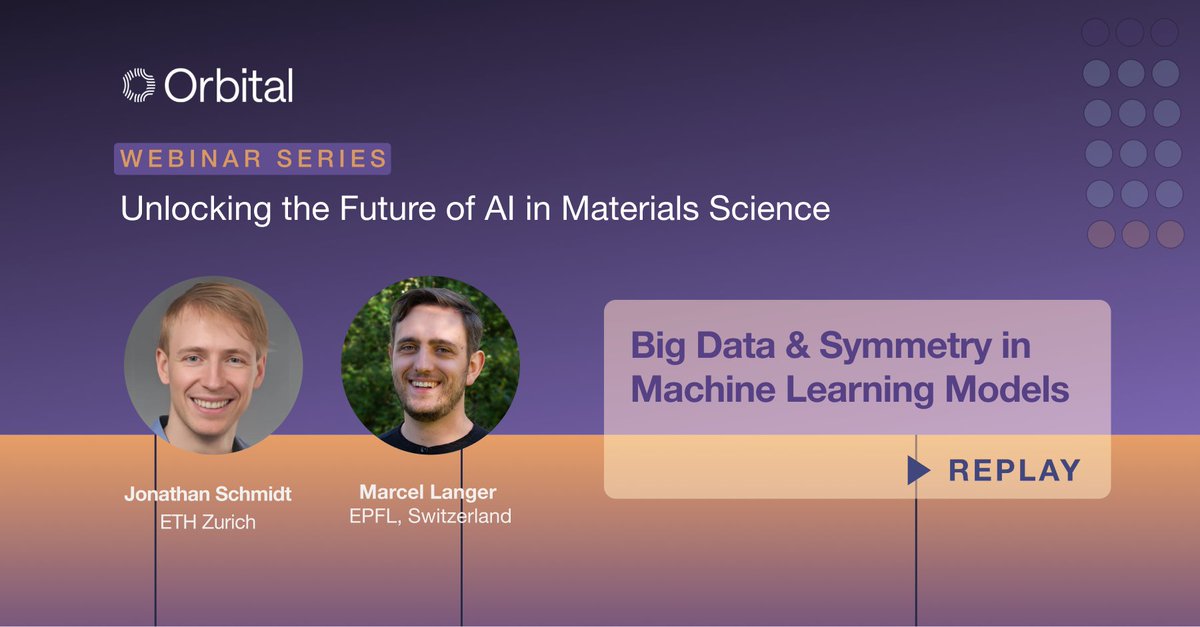

the recording of the talk i gave a while back about the "invariance is easy to learn" paper with @spozdn and @MicheleCeriotti is out!.

We hosted a webinar series all about unlocking the future of #AI in materials science, and we’ve added the recordings to YouTube for anyone who wasn’t able to attend. Check out episode 1 here, hosted by @marceldotsci and Jonathan Schmidt:

1

3

27