Shuiwang Ji

@ShuiwangJi

Followers

3K

Following

6K

Media

14

Statuses

2K

Machine Learning, AI for Science, Professor and Truchard Family Chair, Texas A&M University

College Station, TX

Joined June 2012

RT @ShuruiGui: Any interests in extracting invariant functions or physical laws from complex environments? Check our new ICML paper “Discov….

arxiv.org

We consider learning underlying laws of dynamical systems governed by ordinary differential equations (ODE). A key challenge is how to discover intrinsic dynamics across multiple environments...

0

1

0

5. More details can be found in the preprints:.PubChemQCR: TDN: MLIP Foundation Model:

arxiv.org

Accurate molecular property predictions require 3D geometries, which are typically obtained using expensive methods such as density functional theory (DFT). Here, we attempt to obtain molecular...

0

1

5

RT @KeqiangY: Excited to share our latest preprint on LLM Agents, beyond tool use, for Materials Discovery. 🎉. ✨Unleash the discovery power….

0

5

0

RT @tdietterich: @tallinzen @F_Vaggi Teaches American kids the skills they need to cure diseases or start companies. She embodies the Ameri….

0

1

0

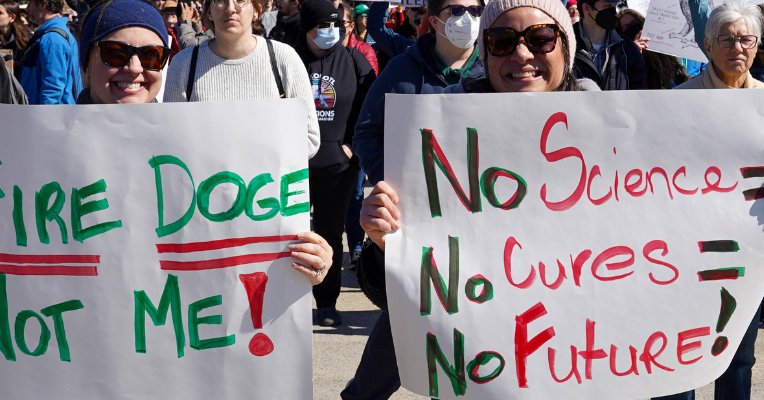

Science is under siege. Trump’s latest Executive Order calls for politically appointed science commissars to evaluate research. Join me in adding your name to our open letter condemning Trump’s escalated assault on science in America. Sign here:

actionnetwork.org

Science is under siege. Join us in adding your name to our open letter condemning Trump’s escalated assault on science in America.

0

0

5

RT @_vztu: 🚀 𝗚𝗲𝗻𝗲𝗿𝗮𝘁𝗶𝘃𝗲 𝗔𝗜 𝗶𝘀 𝘁𝗵𝗲 𝗡𝗲𝘅𝘁 𝗤𝘂𝗮𝗻𝘁𝘂𝗺 𝗟𝗲𝗮𝗽 𝗳𝗼𝗿 𝗔𝘂𝘁𝗼𝗻𝗼𝗺𝗼𝘂𝘀 𝗗𝗿𝗶𝘃𝗶𝗻𝗴—𝗛𝗲𝗿𝗲’𝘀 𝘁𝗵𝗲 𝗛𝗶𝘁𝗰𝗵𝗵𝗶𝗸𝗲𝗿'𝘀 𝗚𝘂𝗶𝗱𝗲 𝗬𝗼𝘂’𝘃𝗲 𝗕𝗲𝗲𝗻 𝗪𝗮𝗶𝘁𝗶𝗻𝗴 𝗙𝗼𝗿! 🚀. We're….

0

47

0

RT @GalantiTomer: I really enjoyed the RAISE Workshop ( at @TAMU today and share my work! Huge thanks to Prof. @Shu….

0

1

0

We have 5 papers accepted to ICML. I am especially happy for Monte, an undergraduate student with the second paper as the primary author (the first one was ICLR spotlight).@icmlconf @MBohde21912.

4

1

78