Muhammad Ahmed Chaudhry

@MAhmed_Ch

Followers

40

Following

221

Media

0

Statuses

26

ML Scientist @PayPal, Computer Vision researcher @StanfordAILab, @pomonacollege’19

Palo Alto, CA

Joined June 2023

We use LLMs for everyday tasks—research, writing, coding, decision-making. They remember our conversations, adapt to our needs and preferences. Naturally, we trust them more with repeated use. But this growing trust might be masking a hidden risk: what if their beliefs are

19

74

361

On #WorldQuantumDay, we highlight our research on the Vendi Score, which leverages the von Neumann entropy of quantum mechanics to tackle challenges in AI/ML and the natural sciences 🧵🧵🧵👇 1/n

1

8

12

So with all the advances in robotics and AI, when will Roombas stop “falling” into “ditches”? Asking for a friend 😅

0

0

0

Here’s an easy trick for improving the performance of gradient-boosted decision trees like XGBoost allowing them to read text column headers and to benefit from massive pretraining: replace the first tree with an LLM or TabPFN! 🧵 1/9

7

92

560

Our updated Responsible Foundation Model Development Cheatsheet (250+ tools & resources) is now officially accepted to @TmlrOrg 2025! It covers: - data sourcing, - documentation, - environmental impact, - risk eval - model release & licensing

1

29

98

Today, we are publishing the first-ever International AI Safety Report, backed by 30 countries and the OECD, UN, and EU. It summarises the state of the science on AI capabilities and risks, and how to mitigate those risks. 🧵 Link to full Report: https://t.co/k9ggxL7i66 1/16

49

522

1K

We have to take the LLMs to school. When you open any textbook, you'll see three major types of information: 1. Background information / exposition. The meat of the textbook that explains concepts. As you attend over it, your brain is training on that data. This is equivalent

388

2K

12K

For something to be open source, we need to see 1. Data it was trained & evaluated on 2. Code 3. Model architecture 4. Model weights. DeepSeek only gives 3, 4. And I'll see the day that anyone gives us #1 without being forced to do so, because all of them are stealing data.

17

293

1K

🚀Excited to share our latest paper, *ResearchTown*, a framework for research community simulation using LLMs and graphs 🌟We hope this marks a new step toward automated research 🧠Paper: https://t.co/xz18Kpc6q5 💻Code: https://t.co/y1Dn9A0LQZ 🤗Dataset:

huggingface.co

🚀 Excited about automatic research? What if we can combine graphs and LLMs to simulate our interconnected human research community? ✨ Check our latest paper ResearchTown: Simulator of Human Research Community ( https://t.co/x6rj3oinDU)

#AI #LLM #AutoResearch #MultiAgent #Graph

5

24

128

Everything you love about generative models — now powered by real physics! Announcing the Genesis project — after a 24-month large-scale research collaboration involving over 20 research labs — a generative physics engine able to generate 4D dynamical worlds powered by a physics

569

3K

16K

New research reveals a worrying trend: AI's data practices risk concentrating power overwhelmingly in the hands of dominant technology companies. I spoke w/@ShayneRedford @sarahookr @sarahbmyers @GiadaPistilli about what this says about the state of AI

technologyreview.com

New findings show how the sources of data are concentrating power in the hands of the most powerful tech companies.

4

31

59

Announcing the NeurIPS 2024 Test of Time Paper Awards: https://t.co/Wyhx63A2Ek

10

86

507

Just finished my first ever review of a paper (NeurIPS workshop). Revelatory experience! Would certainly help in becoming a better researcher myself. Tried to ensure I review how I would want my own work to be reviewed: critically yet constructively! #NeurIPS #MachineLearning

0

0

0

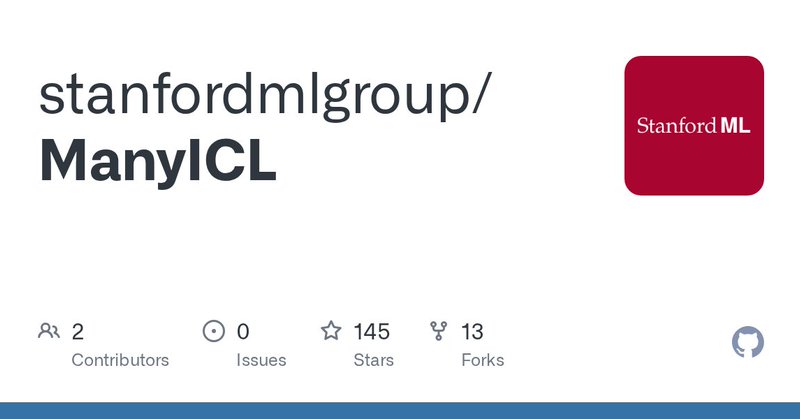

Thank you for sharing our work @_akhaliq!! Further details about our work and results here: https://t.co/JTfQiOR2dN Would love to hear everyone’s thoughts And, we’ve tried hard to create an easy to use code repository here. Try it out yourself: https://t.co/nCswyxyrv9 🚀

github.com

Contribute to stanfordmlgroup/ManyICL development by creating an account on GitHub.

Many-Shot In-Context Learning in Multimodal Foundation Models Large language models are well-known to be effective at few-shot in-context learning (ICL). Recent advancements in multimodal foundation models have enabled unprecedentedly long context windows, presenting an

0

0

1

A pleasure and honor to work with such an incredible group @jyx_su, @jeremy_irvin16, @ji_hun_wang on this paper, supervised by @jonc101x and @AndrewYNg! Would love to hear thoughts and feedback from everyone 🤗

Work led by @jyx_su together with @ji_hun_wang @mahmed_ch supervised by @jonc101x and @AndrewYNg in @StanfordAILab. Read more here:

0

0

2

Want to unlock the full potential of GPT-4o & Gemini 1.5 Pro? Give them **many** demonstrations and batch your queries! Our new work w/ @AndrewYNg shows these models can benefit substantially from lots of demo examples and asking many questions at once! https://t.co/SeAqBRFyy7

9

8

35