Lucas Torroba-Hennigen

@ltorroba1

Followers

487

Following

903

Media

13

Statuses

148

PhD student at MIT working in NLP.

Cambridge, USA

Joined February 2020

🚨New Paper!🚨 We trained reasoning LLMs to reason about what they don't know. o1-style reasoning training improves accuracy but produces overconfident models that hallucinate more. Meet RLCR: a simple RL method that trains LLMs to reason and reflect on their uncertainty --

13

266

897

We also explore a distributed training setting, where we train with DiLoCo and gradient transformations simultaneously. While there is a penalty for using gradient transformations, having each worker train using a different one helps reduce the gap. This gap is reduced further if

0

0

0

Of course, a key hyperparameter when training with these approaches is the choice of gradient transformation/frozen adapter. We conduct a study with different kinds of transformations and find that randomized transformations often perform well. This is interesting since, in

1

0

0

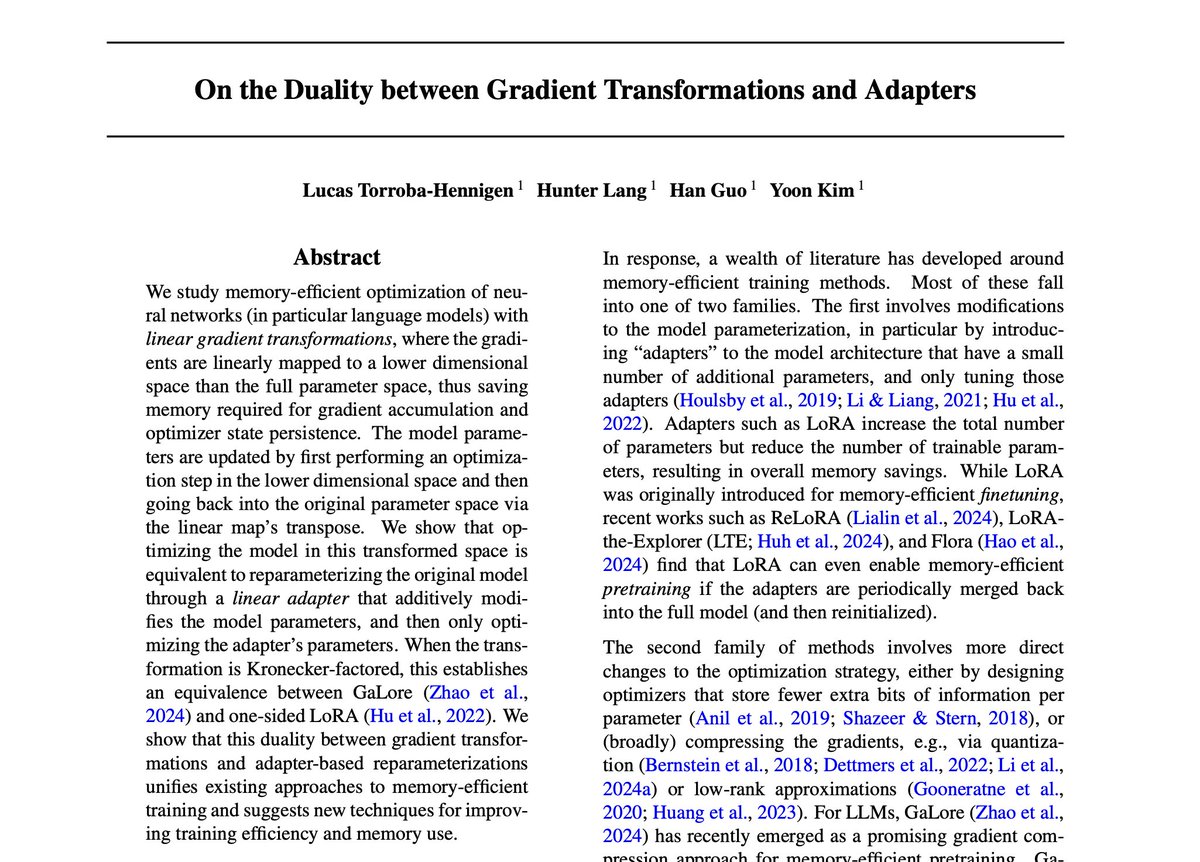

With a Kronecker factorization of the linear map, this correspondence recovers the following identity, which shows that recent PEFT approaches such as MoRA can be seen as performing a “two-sided” GaLore to each matrix. When L (or R) is set to identity, we recover the

1

0

0

We generalize this by working in vectorized parameter space. In particular, we show that there is a duality between training a network with linear gradient transformations (i.e., on every step apply a linear map to the gradient vector, do an optimizer step, and then use its

1

0

0

Prior work (e.g., Flora; https://t.co/oW01mp443k) shows that optimizing a neural network in projected space by applying a linear transformation to the gradient matrix (as in GaLore) is equivalent to training a “one-sided” LoRA where only one adapter matrix is trained.

arxiv.org

Despite large neural networks demonstrating remarkable abilities to complete different tasks, they require excessive memory usage to store the optimization states for training. To alleviate this,...

1

0

0

Previous work has established that training a linear layer with GaLore is the same as training it with a half-frozen LoRA adapter. But how far can we push this equivalence? Read our paper, or come to our poster session at #ICML2025 on Wednesday at 4:30pm, to find out! 📄:

1

3

13

Hello everyone! We are quite a bit late to the twitter party, but welcome to the MIT NLP Group account! follow along for the latest research from our labs as we dive deep into language, learning, and logic 🤖📚🧠

27

52

544

Turn a single pre-trained model’s layers into MoE “experts” and reuse them? Finetuning a “router” slightly cuts loss—cool proof of concept. Can we combine dynamic compute paths/reuse + coconut-like latent reasoning? https://t.co/Y4JHoqJz4u

1

5

22

I have multiple vacancies for PhD and Masters students at @Mila_Quebec @McGill_NLP in NLP/ML focusing on representation learning, reasoning, multimodality and alignment. Deadline for applications is Dec 1st. More details:

mila.quebec

Mila's student researchers can choose from a variety of research programs offered by our partner universities and schools.

1

64

161

I'm excited to announce my new lab: UCSD's Learning Meaning and Natural Language Lab. a.k.a. LeM🍋N Lab! And 📢WE ARE RECRUITING📢 PhD students to join us in sunny San Diego in either Linguistics OR Data Science. Apply by Dec 4: https://t.co/gCYN8eMk4A More about the lab👇

12

76

450

Over the past year I have been working on using multiple specialized models in a collective fashion to solve novel tasks. We investigated Mixture of Experts (MoE) style routing for merging. However, we find that feature based merging is likely not scalable paradigm. Read on!

2

27

99

Scaling inference compute by repeated sampling boosts coverage (% problems solved), but could this be due to lucky guesses, rather than correct reasoning? We show that sometimes, guessing beats repeated sampling 🎲 @_galyo @omerlevy_ @roeeaharoni

https://t.co/UCxi5TadVz

1

12

36

SymGen is featured on MIT News https://t.co/oyb9y79aIv -- please take a look at the great piece by Adam and the editor team!

news.mit.edu

A new system helps human fact-checkers validate the responses generated by a large language model. By speeding validation time by 20 percent, the system could improve manual verification and help...

Super excited to share our new paper! SymGen focuses on an important Human-Al Collaboration problem: It enables easy verification of LLM generations using symbolic references. #HumanAI #NLProc #LLM #MachineLearning #HCI

0

2

13

@ltorroba1 and I will present SymGen at @COLM_conf on Wednesday afternoon! Looking forward to seeing y’all there! I'd love to chat about llm generation attribution & verification and human agent collaboration for scientific discovery! plz dm/email me :)

1

1

13

Bailin is going to be a great mentor, so consider working with him.

I'm looking for a summer'25 intern at Apple AI/ML, New York. Focus: long-context modeling for LLM pretraining Apply link: https://t.co/yl7WTGna9a Please also email me your resume after application.

0

1

6

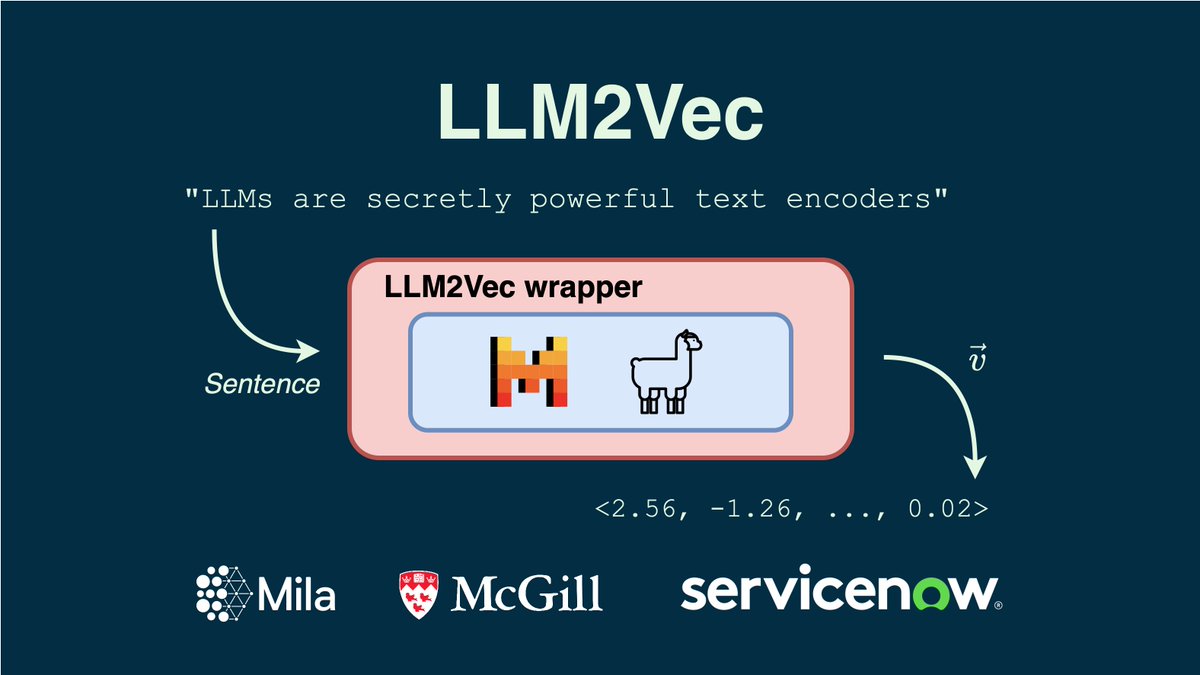

We introduce LLM2Vec, a simple approach to transform any decoder-only LLM into a text encoder. We achieve SOTA performance on MTEB in the unsupervised and supervised category (among the models trained only on publicly available data). 🧵1/N Paper: https://t.co/1ARXK1SWwR

13

168

879

I am still looking for PhD students starting in September 2024! The deadline to apply for the CDT in NLP is the 11th of March. If you wish to do research in modular and efficient LLMs, here are some highlights of my lab's research from the past year ⬇️🧵

Interested in training with future leaders in NLP to engage with the cutting edge of the technical, social, design, and legal aspects of these systems? Then apply for our new Centre for Doctoral Training in Designing Responsible NLP! Deadline 11 March 2024

10

51

149

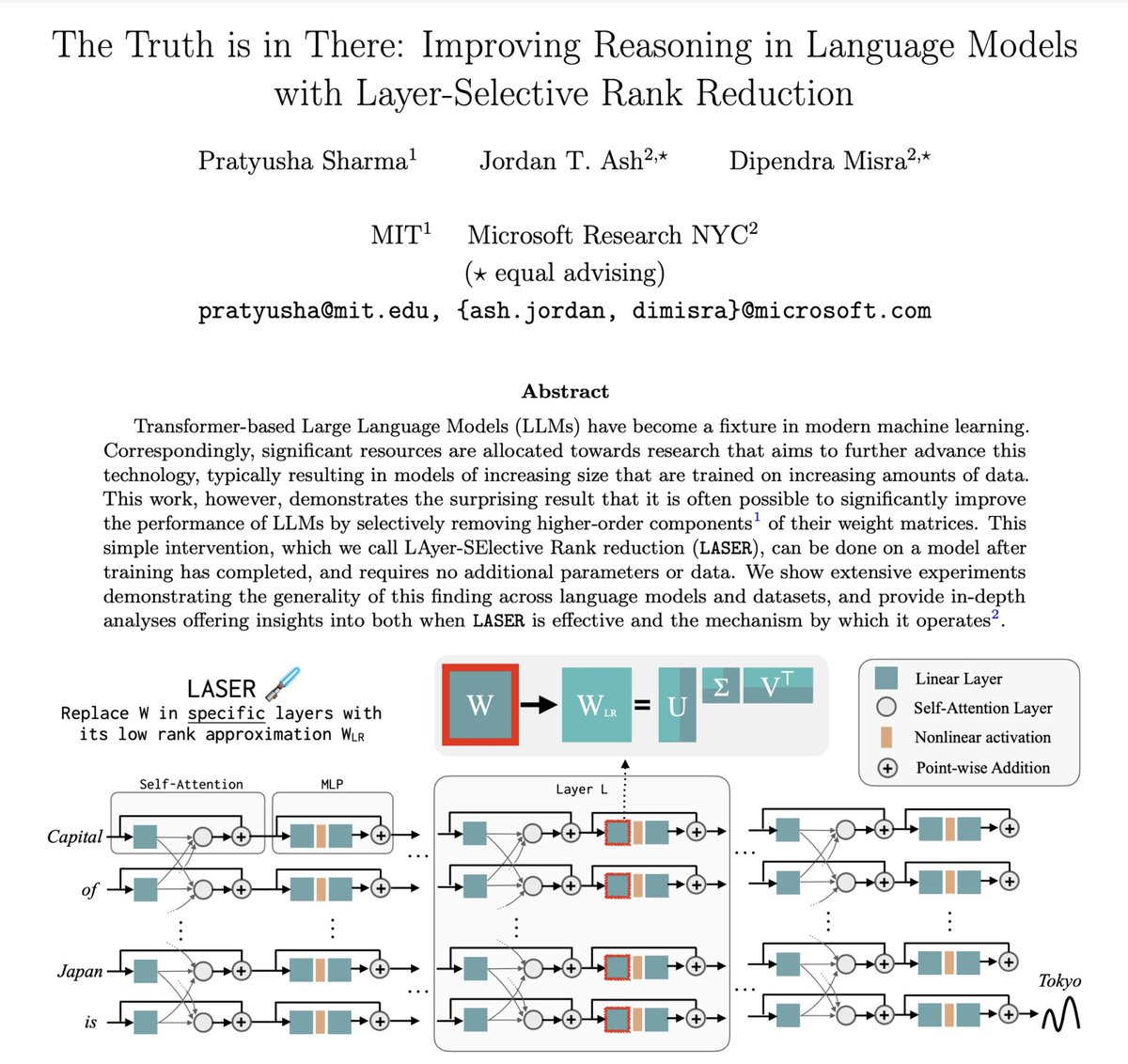

What if I told you that you can simultaneously enhance an LLM's task performance and reduce its size with no additional training? We find selective low-rank reduction of matrices in a transformer can improve its performance on language understanding tasks, at times by 30% pts!🧵

39

278

2K

Data-dependent decay and state dimension expansion are the key for Mamba/GLA matching Transformers!🚀 Also excited to present my NeurIPS spotlight paper [ https://t.co/DeIkqTjqAb] this Wednesday, which also shows the crucial role of data-dependent decay. Come and chat about RNNs!

arxiv.org

Transformers have surpassed RNNs in popularity due to their superior abilities in parallel training and long-term dependency modeling. Recently, there has been a renewed interest in using linear...

Impressed by the performance of Mamba and believe in RNN? We provide a simple alternative solution! Excited to share Gated Linear Attention (GLA-Transformer). (1/n) https://t.co/BGovB5hRM3

1

13

57