Jyo Pari

@jyo_pari

Followers

3K

Following

810

Media

37

Statuses

149

Working on continual learning | PhD @MIT

Boston

Joined December 2021

What if an LLM could update its own weights? Meet SEAL🦭: a framework where LLMs generate their own training data (self-edits) to update their weights in response to new inputs. Self-editing is learned via RL, using the updated model’s downstream performance as reward.

135

529

3K

Next Tuesday, @shannonzshen will present hybrid chain-of-thought, a method that mixes latent and discrete tokens during decoding 🔥 🗓️ Nov 25, 3pm ET @scaleml

1

7

50

Why do deep learning optimizers make progress even in the edge-of-stability regime? 🤔 @alex_damian_ will present theory that can describe the dynamics of optimization in this regime! 🗓️ Nov 17, 3pm ET @scaleml

0

10

72

Everyone’s talking about Kimi K2 Thinking and its impressive performance. No full report yet, but judging from Kimi K2\1.5 reports, it likely uses Policy Mirror Descent - an RL trick that’s quietly becoming standard in frontier labs. Let’s break down what it is:

12

47

478

in our new post, we walk through great prior work from @agarwl_ & the @Alibaba_Qwen team exploring on-policy distillation using an open source recipe: you can run our experiments on Tinker today! https://t.co/7pVk87qTDH i'm especially excited by the use of on-policy

Our latest post explores on-policy distillation, a training approach that unites the error-correcting relevance of RL with the reward density of SFT. When training it for math reasoning and as an internal chat assistant, we find that on-policy distillation can outperform other

13

24

323

Very interest! We could use RLMs for complex reasoning problems where models are solving sub-problems in parallel unlocking a new dimension of scaling!

What if scaling the context windows of frontier LLMs is much easier than it sounds? We’re excited to share our work on Recursive Language Models (RLMs). A new inference strategy where LLMs can decompose and recursively interact with input prompts of seemingly unbounded length,

1

4

30

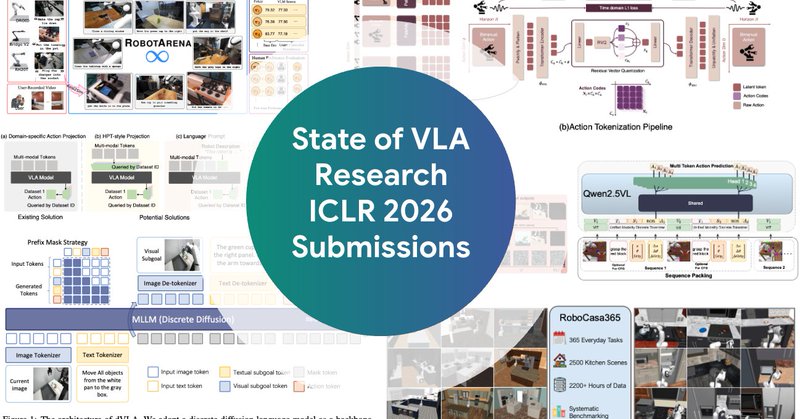

VLAs have become the fastest-growing subfield in robot learning. So where are we now? After reviewing ICLR 2026 submissions and conversations at CoRL, I wrote an overview of the current state of VLA research with some personal takes: https://t.co/OMMdB1MHtS

mbreuss.github.io

Comprehensive analysis of Vision-Language-Action models at ICLR 2026 - discrete diffusion, reasoning VLAs, and benchmark insights.

11

102

534

After weeks of learning about systems at @scaleml, we’re shifting gears to video foundation models. Thrilled to have @cloneofsimo sharing how to train them from scratch next Tuesday — no better person to learn from 🔥

5

11

128

Next Tuesday, @scaleml hosts @kavnwang & Kristine Lu for a tutorial based on https://t.co/XKCnl7lUpy 🚀 They'll cover distributed training/inference of large models, plus the math & tradeoffs of latency, throughput, and model size in GPU comms!

2

14

128

A great read 👏

I wrote this blog post that tries to go further toward design principles for neural nets and optimizers The post presents a visual intro to optimization on normed manifolds and a Muon variant for the manifold of matrices with unit condition number https://t.co/EhhKN2Jylx

0

0

4

This great work co-led by @IdanShenfeld and @jyo_pari shows that online RL leads to less forgetting because it inherently leads to a solution with a small reverse KL divergence! I'll try to discuss the significance of the result: 🧵

For agents to improve over time, they can’t afford to forget what they’ve already mastered. We found that supervised fine-tuning forgets more than RL when training on a new task! Want to find out why? 👇

2

3

33

Very interesting work! When I first learned about it earlier this summer, I became curious about the possible explanations for (catastrophic) forgetting during continual learning in SFT versus RL. It seems they've figured something out now :)

For agents to improve over time, they can’t afford to forget what they’ve already mastered. We found that supervised fine-tuning forgets more than RL when training on a new task! Want to find out why? 👇

0

5

29

@IdanShenfeld and I are deeply grateful to our advisor, @pulkitology, for his guidance and support throughout this project! Paper: https://t.co/bcZydI2mIk Website:

arxiv.org

Comparison of fine-tuning models with reinforcement learning (RL) and supervised fine-tuning (SFT) reveals that, despite similar performance at a new task, RL preserves prior knowledge and...

3

4

43

Finally, two open questions still remain: 1️⃣ Why does less KL divergence evaluated on the new task correspond to less forgetting? 2️⃣ Can we design new fine-tuning methods that combine the simplicity and efficiency of SFT with the implicit bias of RL’s Razor?

5

2

35

In addition to the empirical findings, we were able to provide some theory that reveals why RL has an implicit bias towards minimal KL solutions. The animation shows how alternating projections between optimal policies (green) and the model class (blue) converges to min-KL

2

0

28

Then we ask, why is RL resulting in a smaller KL and therefore less forgetting? Grounded in our empirical results, we introduce the principle we call RL’s Razor 🗡️: Among the many high-reward solutions for a new task, on-policy methods such as RL are inherently biased toward

2

3

46

To understand why, we looked for a variable that would explain forgetting across methods and hyperparameters. After searching, we find that the KL divergence from the base model, evaluated on the new task, is almost a perfect predictor of forgetting!

2

4

44

We fully swept hyperparameters for both methods and plotted the Pareto Frontier. Our finding holds for LLMs, Robotics foundation models, and even 3-layer MLP:

1

0

34

For agents to improve over time, they can’t afford to forget what they’ve already mastered. We found that supervised fine-tuning forgets more than RL when training on a new task! Want to find out why? 👇

20

147

911