Kevin Lu

@kevinlu4588

Followers

76

Following

753

Media

4

Statuses

21

Diffusion & protein language model Research @ Northeastern (Bau Lab) @ NeurIPS 2025

Joined April 2024

We discovered how to fix diffusion model's diversity issues using interpretability! It's all in the first time-step!⏱️ Turns out the concepts to be diverse are present in the model - it simply doesn't use them! Checkout our @wacv_official work - we added theoretical evidence👇

Why do distilled diffusion models generate similar-looking images? 🤔 Our Diffusion Target (DT) visualization reveals the secret to diversity. It is the very first time-step! And—there is a simple, training-free way to make them more diverse! Here is how: 🧵👇

1

22

179

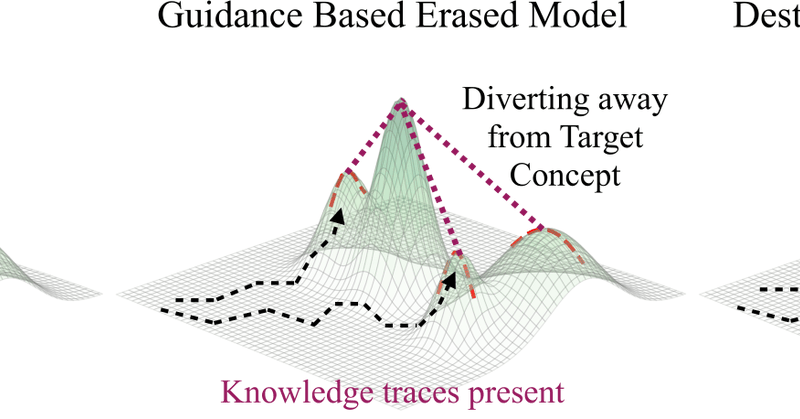

We tested several unlearning methods and found none of them really erase knowledge from the model - they simply hide it! 🧐 What does this mean? We must tread carefully with unlearning research within diffusion models🚨 Here is what we learned 🧵👇(led by @kevinlu4588)

Excited to share our paper “When Are Concepts Erased from Diffusion Models?” at @NeurIPSConf! We introduce two conceptual models for erasure mechanisms in diffusion models, and a suite of probes to recover supposedly forgotten concepts. Project website:

1

14

45

work done with @NickyDCFP, @rohitgandikota, @mnphamx1, @davidbau, @chegday and @CohNiv

@Northeastern @nyuniversity Code: https://t.co/LGmltXapLB Paper:

arxiv.org

In concept erasure, a model is modified to selectively prevent it from generating a target concept. Despite the rapid development of new methods, it remains unclear how thoroughly these approaches...

0

0

4

Probes include: 1. Inpainting & diffusion completion 2. Diffusion trajectory expansion via custom noise scheduler 3. Latent classifier guidance (on par with Textual Inversion) 4. Dynamic concept tracing Erasing methods can fail with the original prompt + light conditioning!

1

0

2

Excited to share our paper “When Are Concepts Erased from Diffusion Models?” at @NeurIPSConf! We introduce two conceptual models for erasure mechanisms in diffusion models, and a suite of probes to recover supposedly forgotten concepts. Project website:

unerasing.baulab.info

Investigating whether concept erasure in diffusion models truly removes knowledge or merely avoids it, with a comprehensive suite of probing techniques.

2

11

38

How can a language model find the veggies in a menu? New pre-print where we investigate the internal mechanisms of LLMs when filtering on a list of options. Spoiler: turns out LLMs use strategies surprisingly similar to functional programming (think "filter" from python)! 🧵

1

22

63

[📄] Are LLMs mindless token-shifters, or do they build meaningful representations of language? We study how LLMs copy text in-context, and physically separate out two types of induction heads: token heads, which copy literal tokens, and concept heads, which copy word meanings.

2

39

194

Curious what your model really knows? Try probing it 🕵️♀️ Code: https://t.co/bV8fJBKkA0 Project: https://t.co/udVQIqYXhZ Paper: https://t.co/MEYtdQdQqu work done with Nicky Kriplani, @rohitgandikota, @mnphamx1, @davidbau, @chegday and @CohNiv

@Northeastern @nyuniversity

arxiv.org

In concept erasure, a model is modified to selectively prevent it from generating a target concept. Despite the rapid development of new methods, it remains unclear how thoroughly these approaches...

0

0

6

We also find a deep trade-off: Robust methods (destruction-based🧨) tend to distort unrelated generations. Understanding this helps researchers choose or design erasure methods that fit their needs.

1

0

0

Surprisingly even “strong” erasure methods that beat adversarial prompts… .... can still regenerate the erased concept via inpainting or noise-based probes 😳 Our findings show: No single probe is enough. True erasure requires a holistic evaluation.

1

0

1

Do these robust unlearning methods still somehow leave some knowledge traces? We propose a suite of probing methods to test this: 🎯 Adversarial attacks 🖼️ Inpainting 🌫️ Diffusion trajectory expansion 📉 Dynamic concept tracing Each reveals different residual traces 👀

1

0

2

Similarly building on this finding that erased knowledge can be resurfaced through optimization, @koushik_srivats et al. proposed STEREO, which exhaustively searches and removes knowledge traces inside an erased diffusion model. https://t.co/mNxc3jZn4Q

⭐ 𝗖𝗩𝗣𝗥 𝟮𝟬𝟮𝟱 𝗛𝗶𝗴𝗵𝗹𝗶𝗴𝗵𝘁 ⭐ Excited to share that our work has been selected as a 𝗵𝗶𝗴𝗵𝗹𝗶𝗴𝗵𝘁 𝗽𝗮𝗽𝗲𝗿 𝗮𝘁 𝗖𝗩𝗣𝗥 𝟮𝟬𝟮𝟱, placing it in the 𝘁𝗼𝗽 𝟭𝟯.𝟱% 𝗼𝗳 𝗮𝗰𝗰𝗲𝗽𝘁𝗲𝗱 𝗽𝗮𝗽𝗲𝗿𝘀! 🏅 Details in 🧵

1

0

0

@mnphamx1 et al. found that erased concepts can be resurfaced by text inversion ( @RinonGal et al.), by optimizing the text input using a few images of the erased concept. They proposed task vectors as a solution for robust unlearning. https://t.co/rS3JHr7ajE

Excited to share our latest work with @kellym11111 and @chegday: “Circumventing Concept Erasure Methods For Text-to-Image Generative Models” Paper: https://t.co/FKEAQQlRRN Project website: https://t.co/sPGPkG28kY Thread 🧵

1

0

0

Our work evaluates several concept erasure methods. ESD is a self-guided erasure method that uses the model's own knowledge of a concept to ablate it. By design, ESD directs the model to steer away from the concept’s representations. https://t.co/imCf3x7GRU

Erasing Concepts from Diffusion Models @Gradio demo is out on @huggingface demo: https://t.co/sYJ0O21mN8

1

0

0

When we "erase" a concept from a diffusion model, is that knowledge truly gone? 🤔 We investigated, and the answer is often 'no'! Using simple probing techniques, the knowledge traces of the erased concept can be easily resurfaced 🔍 Here is what we learned 🧵👇

1

8

33

Our work evaluates several concept erasure methods. ESD is a self-guided erasure method that uses the model's own knowledge of a concept to ablate it. By design, ESD directs the model to steer away from the concept’s representations. https://t.co/imCf3x7GRU

Erasing Concepts from Diffusion Models @Gradio demo is out on @huggingface demo: https://t.co/sYJ0O21mN8

0

0

0