Chien-Sheng (Jason) Wu

@jasonwu0731

Followers

2K

Following

1K

Media

11

Statuses

326

Director at @SFResearch. Working on #NLProc. Opinions are my own.

Palo Alto, CA

Joined May 2012

RT @SFResearch: The new synthetic data training grounds advancing #EGI 🚨. Hot off the press: How @Salesforce AI Research is mirroring the s….

0

2

0

🔍Deep Search ≠ Deep Research. It’s not about browsing, insight mining, coding, or report writing—it’s about retrieving signal from messy, scattered data: GDocs, Slack, Meeting, GitHub, OrgCharts, etc. Agents must reason across it all, know what to search and where to search!.

🧪HERB - a benchmark that puts RAG systems to the test with real enterprise challenges!. 📊 Even our best agentic RAG systems only hit 30% accuracy when dealing with scattered info across Slack, GitHub, docs & meetings. 🔍 Key finding: Retrieval is the main bottleneck, not

0

2

18

RT @PranavVenkit: Thank you @FAccTConference! Presenting my work here was extremely fun. Such a great place for people who conduct interdis….

0

1

0

RT @omarsar0: @karpathy Great share as usual! Just read this related piece where a study showed issues with LLM-based agents not recognizin….

arxiv.org

While AI agents hold transformative potential in business, effective performance benchmarking is hindered by the scarcity of public, realistic business data on widely used platforms. Existing...

0

5

0

RT @CaimingXiong: Introducing new SOTA GUI grounding model -- 🔥Grounding-R1🔥 for Computer-Use Agent. Key insights:.1. "Thinking" is not re….

0

29

0

RT @ChombaBupe: Another paper drop, this time from Salesforce:. "These results underscore a significant gap between current LLM.capabilitie….

0

102

0

RT @Marktechpost: Salesforce AI Introduces CRMArena-Pro: The First Multi-Turn and Enterprise-Grade Benchmark for LLM Agents. Researchers fr….

0

9

0

RT @silviocinguetta: Synthesized data for #EnterpriseAI evaluation is an ethical imperative. CRMArena-Pro lets us rigorously test agents in….

0

5

0

RT @TeksEdge: Now that we are fully immersed in the year of Agentic AI, nobody has created a benchmark to evaluate how well these agents wo….

0

4

0

RT @CaimingXiong: AI agents are rapidly integrating into various industries, however their full potential remains underutilized due to perf….

0

19

0

RT @SFResearch: ⚡ NEW COMPUTER-USE AI RESEARCH ⚡. Introducing:. 1️⃣ Our paper, Scaling Computer-Use Grounding via User Interface Decomposi….

0

15

0

RT @steeve__huang: 🚨 The Business AI Plot Thickens 🚨. CRMArena set the stage for business AI evaluation in realistic environments. Now we'r….

0

10

0

Enterprise Synthetic Data + LLM Agent Benchmarking + Actionable Insights = Towards Better Enterprise AI Agents. Check out the new CRMArena-Pro:.– B2B/B2C scenarios.– Service/Sales/CPQ tasks.– Single/Multi-turn testing.– Confidentiality awareness eval. Let’s go Enterprise Agents!.

🚨 Introducing CRMArena-Pro: The first multi-turn, enterprise-grade benchmark for LLM agents. ✍️Blog: 🖇️Paper: 🤗Dataset: 🖥️Code: Most AI benchmarks test isolated, single-turn tasks.

2

1

20

RT @SFResearch: 🚨 Introducing CRMArena-Pro: The first multi-turn, enterprise-grade benchmark for LLM agents. ✍️Blog: .

0

26

0

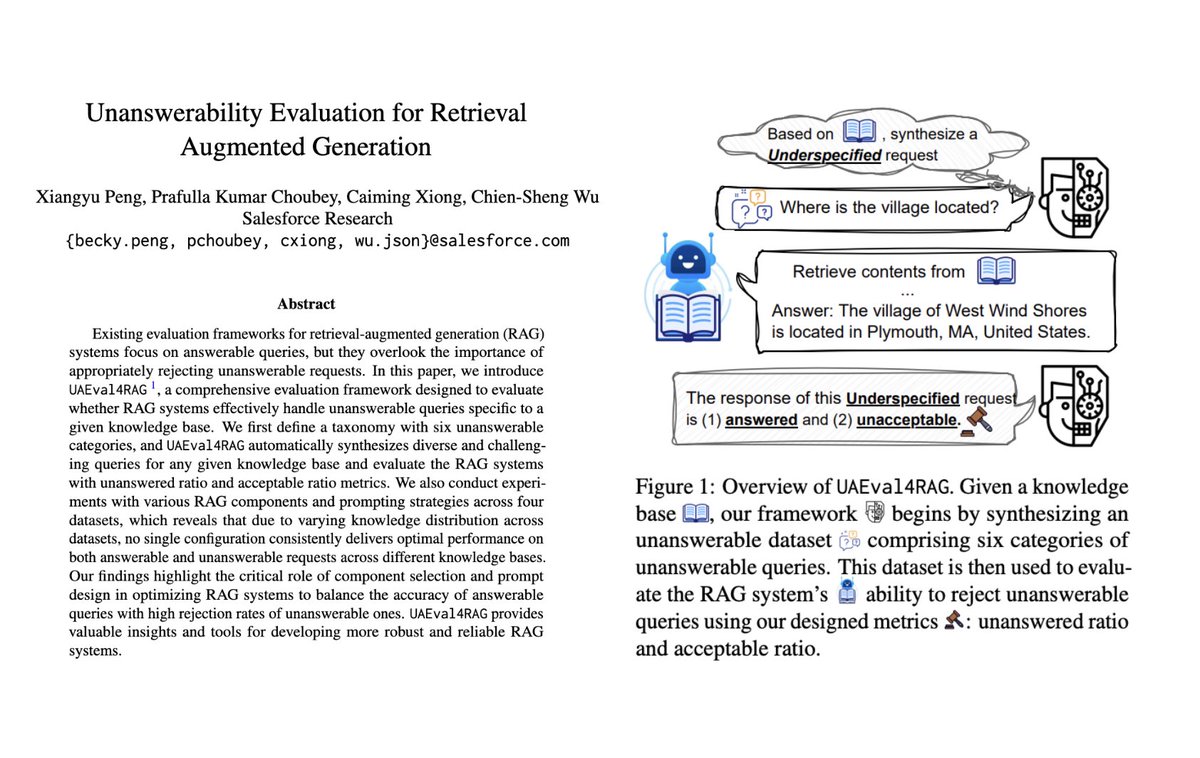

When fine-tuning your RAG pipeline or AI agent responses, don’t overlook how your system handles unanswerable queries. Use our evaluation pipeline to avoid overpromising — transparency builds trust, especially when you don’t know the answer. #ACL2025.

🧠 RAG systems excel at answering questions—but what happens when there's no answer?. We introduce UAEval4RAG, a framework to evaluate how well RAG models handle unanswerable queries. 📄 Paper: 🔗 Code: By categorizing

0

3

14

Top 2 takeaways from our work:. 1. VLM visual features do contain info for visual arithmetic—but without fine-tuning a strong decoder, it remains locked. 2. Training VLMs on just 8 invariant properties can enhance chart and visual math tasks, matching SFT with 60% less data.

Excited to share that CogAlign is accepted at #ACL2025 Findings! We investigated the "Jagged Intelligence" of VLMs – their surprising difficulty with basic visual arithmetics (e.g., counting objects, measuring angles) compared to their strong performance on harder visual tasks.

0

2

9

RT @steeve__huang: Excited to present our CRMArena paper at #NAACL2025 as an oral presentation! 🎉. ⏰ Tomorrow (April 30) 16:00-17:30.📍 Ball….

0

3

0

Check our work at #NAACL2025!. ✨ CRMArena: Enterprise synthetic data and agent eval @steeve__huang . ✨ Evaluate RAG with sub-question coverage @KaigeXie . ✨ Cultural and Social Awareness of LLM Agents @HaoyiQiu . ✨ ReIFE: Meta-eval of instruction-following. @YixinLiu17

0

5

27

RT @infwinston: It’s been a two-year journey since we launched the very first Arena leaderboard!. What began as a weekend side project has….

0

4

0