Mitko Vasilev

@iotcoi

Followers

2K

Following

11K

Media

843

Statuses

6K

Make sure you own your AI. AI in the cloud is not aligned with you; it's aligned with the company that owns it.

Joined August 2013

M2 is open-sourcing! Hope you all like it! lmk if you have any feedback! And it's free for use now on MiniMax Platform, you can use it with your Claudr Code or any your own app. BTW, we recommend Anthropic endpoint to access M2

We’re open-sourcing MiniMax M2 — Agent & Code Native, at 8% Claude Sonnet price, ~2x faster ⚡ Global FREE for a limited time via MiniMax Agent & API - Advanced Coding Capability: Engineered for end-to-end developer workflows. Strong capability on a wide-range of applications

27

39

580

I’m just reading that Ryzen AI 395 has to be 30% slower than DGX Spark in LLM inferencing… and only 96GB GPU RAM… good I haven’t RTFM upfront, so I made the AMD faster with 128GB unified RAM 🫡 Z2 mini G1a can run Qwen3 Coder 30B BF16 at 26.8 tok/sec in ~60GB GPU RAM

1

1

3

Two quick updates: GLM-4.6-Air is still in training. We’re putting in extra effort to make it more solid and reliable before release. Rapid growth in GLM Coding Plan over the past weeks has increased inference demand. Additional compute is now deployed to deliver faster and

65

78

1K

Hocus Pocus and 128GB of GPU VRAM appeared on my AMD Ryzen AI 395. Qwen3 Coder 30B at BF-16 with 1Million token context window running at 24.4 tokens/sec Codebase semantic-search embedding model Guardrail so it’s all legit Total GPU footprint 115GB, still 13 GB left for Linux

0

1

3

Excited to be (finally) heading to the PyTorch Conference! I’ll be giving a talk tomorrow at 11:00 AM on “The LLM Landscape 2025”, where I’ll discuss the key components behind this year’s most prominent open-weight LLMs, and highlight a few architectural developments that go

32

113

1K

Say hello to my little friends! I just unboxed this trio of HP Z2 G1a! Three is always better than one! 3x AMD Ryzen AI Max+ Pro 395 384GB RAM 24TB of RAID storage ROCm 7.0.2 tonight llama cpp, vLLM and Aibrix

0

2

5

after the OCR blog we dropped yesterday people still ask me for the "best" model under the post 😅 we wrote the blog because there's no single best OCR model 🤠 but there is a model best for your use case! so please read the blog 🙇🏻♀️ let us know what you think!

27

57

620

I see all Chinese labs are turning TL;DR into TL;DRGB Problem: 1M text tokens == 1 M opportunities for your GPU to file worker-comp Solution: don’t feed the model War & Peace—feed it the movie poster. This is Glyph voodoo: • 10 k words → 3 PNGs ≈ 3 k visual tokens

1

0

2

Hi!✌️Personally, I’m not a fan of vibes-based trust. First VERIFY, then trust, is my motto. Which is why Signal is open source. We want everyone to be able to inspect our code, and our encryption protocol, and see for themselves the lengths we go to to protect your comms and

github.com

Signal has 126 repositories available. Follow their code on GitHub.

122

293

2K

Cloud marketing convinced a generation of programmers that the scariest thing in the world was to connect their own server to the internet. All so they could be sold and resold the same centralized dependencies at huge markups. https://t.co/2JlPY9Pcz0

117

339

4K

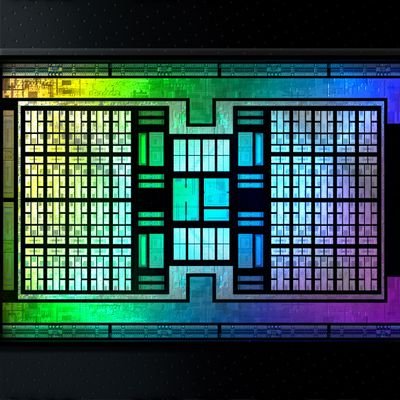

AMD Strix Halo: DenseAI compute(BF16) - 110tops 128GB 256GB/s memory 4TB Nvme 10GbE LAN x86 64MB cache $2,300 Nvidia DGX Spark: Dense AI compute(BF16) 125tops 128GB 273GB/s memory 4TB Nvme 10GbE LAN ARM 24MB cache $4,000

34

79

662

feels so good to see my name next to TheBloke! 🤗 he was the glue that kept the local ai community together, until we were all ready to contribute back! thanks mate for everything. (i am also happy that you are doing great and you are happy)

2

2

22

Today, we unveiled the upgraded ERNIE assistant in Baidu Search. It now supports multimodal generation across 8 formats—from images and video to music and podcasts—while also providing one-click access to multiple tools for solving problems in daily life, education, and work.

4

21

178

i wrote exprimetal metal backend for triton, triton ir -> msl it works but currently slower then torch idk whats best way to pass buffer to triton simple kernels work

3

3

43

🚀 vLLM just hit 60K GitHub stars! 🎉 From a small research idea to powering LLM inference everywhere — across NVIDIA, AMD, Intel, Apple, TPUs, and more — vLLM now supports almost all major text-generation models and native RL pipelines like TRL, Unsloth, Verl, and OpenRLHF.

11

49

491

Deep RL is sometimes mysterious...🧐 @YogeshTrip7354 and I found 2 bugs in the implementation of BBF, and... SURPRISE, the performances of the corrected version are the same as the faulty version! It is even worse for some games🤔 Details on the 2 bugs in🧵👇

3

6

39

Some data to help decide on what the right precision is for Qwen3 4B (Instruct 2507). I ran the full MMLU Pro eval, plus some efficiency benchmarks with the model at every precision from 4-bit to bf16. TLDR 6-bit is a very decent option at < 1% gap in quality to the full

23

18

213

Friday evening. KAT-Dev-72B-Exp is spinning in Aibrix K8s. The GPUs in the Z8 are fired up. It's a LAN party for one. After 6 months on a diet of MoEs, I'd forgotten the main-course feeling of a dense 72B model.

1

1

6