RJ Honicky

@honicky

Followers

101

Following

43

Media

8

Statuses

75

Telecom, AI, technology for developing regions and percussion. "Minds that seek revenge destroy states, while those that seek reconciliation build nations."

Mostly SF Bay Area

Joined April 2009

I will talk about labeling 100M cells using a customized version of GenePT and scGPT. Register here:

linkedin.com

#GenerativeAI is transforming the way we analyze data — but what happens when it meets the complexity and scale of #MultiOmics? Join us for a new webinar of our #OmicsConnect series to hear from Dr....

0

0

0

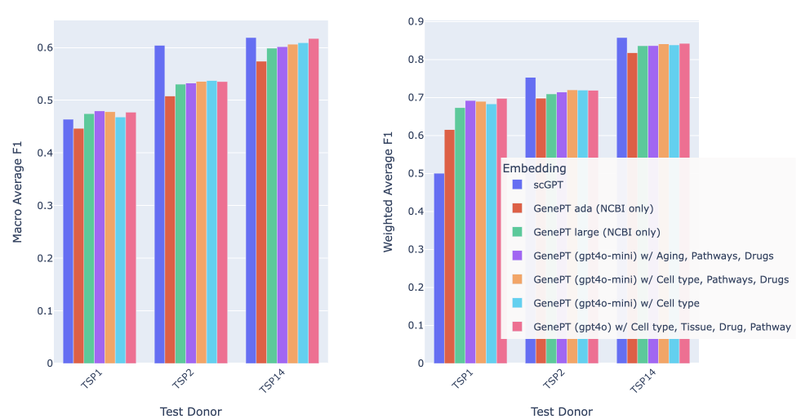

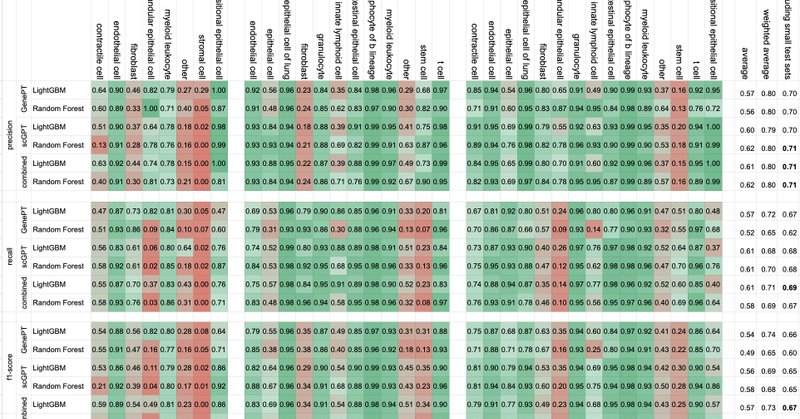

I promised to explain how you can build your very own, custom GenePT embeddings. Here's another Lab Note:. Enjoy!.

learning-exhaust.hashnode.dev

Better Gene Embeddings Through Prompt Engineering (Or, at Least, We Tried)

0

0

0

This is a quick "learning exhaust" post that talks about a little set of experiments I ran to prep for a larger collaborative series of posts about various exciting things that we can accomplish on real problems using existing technologies. .

learning-exhaust.hashnode.dev

In the spirit of “Learning in Public,” and “learning exhaust,” I’m going to start adding “Lab Notes” blog posts that chronicle little discoveries or failures that I have along the way to a larger...

1

0

1

Data Products are Different.

learning-exhaust.hashnode.dev

Why you have to manage data products differently than software and hardware.

0

0

1

Data products often scale cost like hardware, iterate like software, and scale performance like. data products. @swyx @eugeneyan

1

0

1

Oh man, writing up the Cerebras paper on Weight Streaming was a rabbit hole filled with parallel algorithms. @swyx @picocreator @eugeneyan @SarahChieng @latentspacepod.

1

0

1

I've been trying to post a bit more frequently and in smaller bites, so I'm going to try to pick one interesting thing I've learned from papers I read, and then write a quick post about them. Here's my first one:.

learning-exhaust.hashnode.dev

Modern training techniques for embedding models mean that we should probably include a prompt or fine tune to a specific type of task.

0

0

0

RT @latentspacepod: we are going thru OpenAI’s sCM paper on the LS Paper Club today with @honicky!!. (search our youtube or discord to join….

0

1

0

RT @latentspacepod: thanks to @honicky and @vibhuuuus for another great Paper Club on ReFT: Representation Fine Tuning by @aryaman2020 et a….

0

1

0

I got a lot of positive feedback when I mentioned this at the Latent Space Paper Club this week, so I thought I'd write it up. Is it the model or the data that's low rank? . @swyx @latentspacepod @vibhuuuus.

learning-exhaust.hashnode.dev

Thoughts on LoRA Quantization Loss Recovery

0

1

4

Some ideas and experiments on quantization: Oooh, I'm so excited!.

learning-exhaust.hashnode.dev

or post-post-training-quantization-training :)

0

0

0