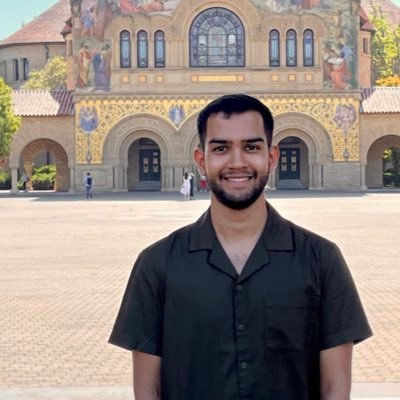

Aryaman Arora

@aryaman2020

Followers

7K

Following

31K

Media

310

Statuses

13K

member of technical staff @stanfordnlp

🌲

Joined December 2018

I'll be interning at @TransluceAI for the summer doing interp 🫡 will be staying in SF.

15

5

251

it's also true at the same time that interp researchers should focus on ways to add value that are complementary to / not easily replaced by baselines like prompting

@sohamdaga22 why yes? please give me a concrete example where mech interpr has, beyond a reasonable doubt, informed how we design, train, or understand these models, that simple input, output A/B tests (i.e., asking the model questions) have been insufficient for?.

0

0

2

people rightly ask, where is the practical downstream value that mech interp has created? it turns out that if you prioritise rigour over immediate scaling there are plenty of good examples 🙂.

@DimitrisPapail @sohamdaga22 I feel that these papers (from my group) are examples of what you are nominally asking for:. 1. 2. 3. 4. 5. 6. 7.

1

0

8

RT @ChrisGPotts: @DimitrisPapail @sohamdaga22 I feel that these papers (from my group) are examples of what you are nominally asking for:….

0

9

0

RT @DimitrisPapail: @sohamdaga22 why yes? please give me a concrete example where mech interpr has, beyond a reasonable doubt, informed how….

0

1

0

RT @neil_rathi: new paper!. robust and general "soft" preferences are a hallmark of human language production. we show that these emerge fr….

0

5

0

RT @shi_weiyan: 💥New Paper💥. #LLMs encode harmfulness and refusal separately!.1️⃣We found a harmfulness direction.2️⃣The model internally k….

0

21

0

if you think data cleaning is beneath you then ngmi.

Academia must be the only industry where extremely high-skilled PhD students spend much of their time doing low value work (like data cleaning). A 1st year management consultant outsources this immediately. Imagine the productivity gains if PhDs could focus on thinking.

11

30

680