Explore tweets tagged as #logprobs

Excited to share a new preprint w/ @Michael_Lepori & @meanwhileina! . A dominant approach in AI/cogsci uses *outputs* from AI models (eg logprobs) to predict human behavior. But how does model *processing* (across a forward pass) relate to human real-time processing? 👇 (1/12)

2

17

81

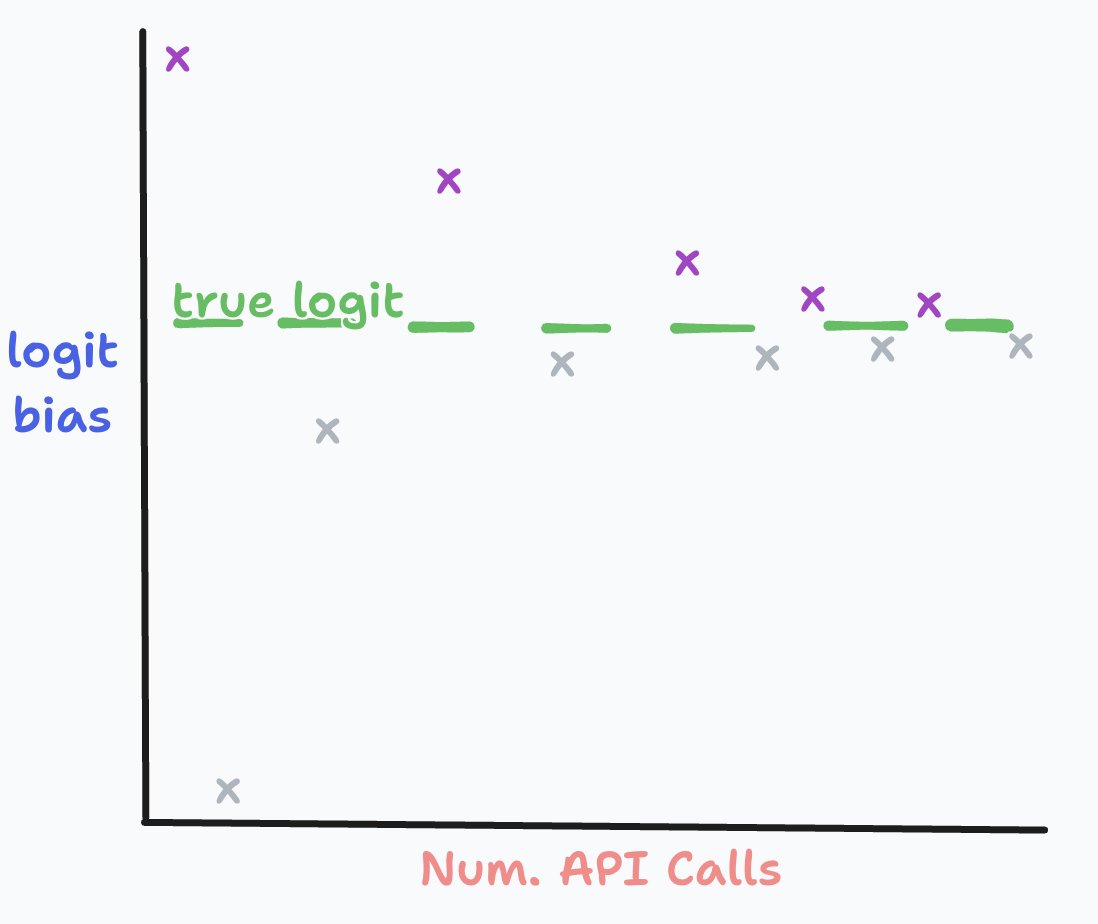

at the @AnthropicAI hackathon this weekend, built a model which can hide (and decode) hidden messages in its logprobs. output for "tell me a story"

17

16

410

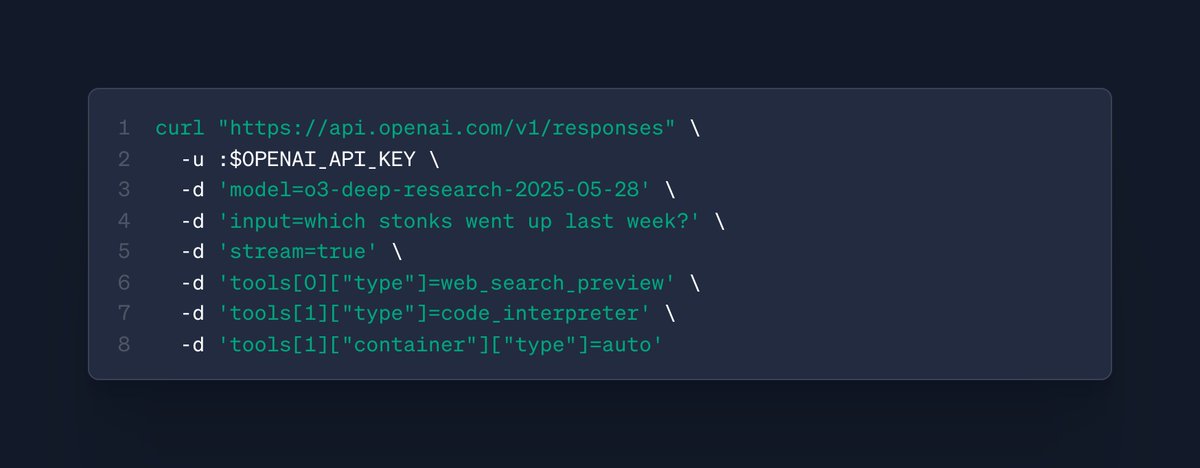

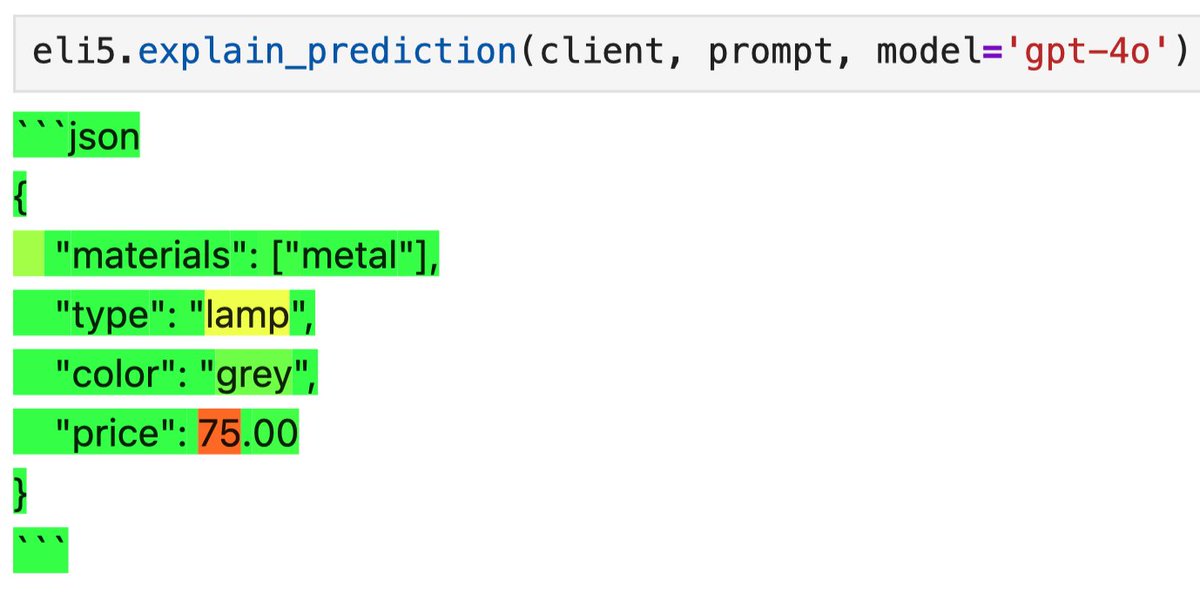

Influencing LLM Output using logprobs and Token Distribution #LLMOutput #logprobs #TokenDistribution #AIInfluence #SpamFilter

1

2

12

🆕 Why you should write your own LLM benchmarks . w/ Nicholas Carlini of @GoogleDeepMind. Covering his greatest hits:.- How I Use AI.- My benchmark for large language models.- Extracting Training Data from Large Language Models (RIP @openai logprobs). Full episode below!

2

11

25

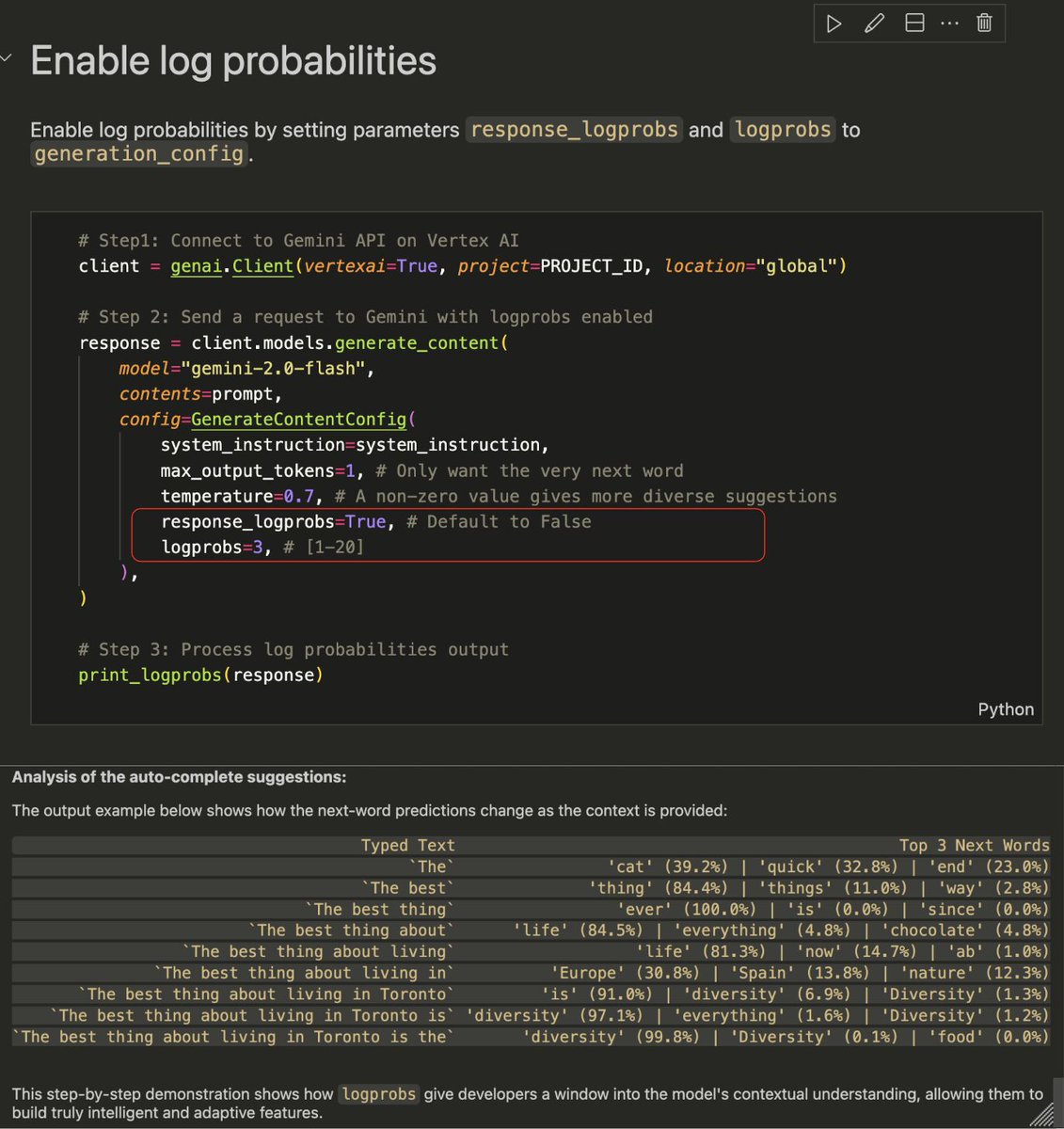

5️⃣ new things @LiteLLM. ⚡️ Gemini - Return logprobs in response.🧹 UI - remove default key creation on user signup.💪 UI - allow team members to view all Team models.⏳ Databricks - claude thinking param support.⏳ Databricks - claude response_format param support

0

0

0