Brian Christian

@brianchristian

Followers

5K

Following

2K

Media

52

Statuses

522

Researcher at the University of Oxford & UC Berkeley. Author of The Alignment Problem, Algorithms to Live By (w. Tom Griffiths), and The Most Human Human.

San Francisco, US / Oxford, UK

Joined January 2014

Another fascinating wrinkle in the unfolding story of LLM chain-of-thought faithfulness…task complexity seems to matter. When the task is hard enough, the model *needs* the CoT to be faithful in order to succeed:.

Is CoT monitoring a lost cause due to unfaithfulness? 🤔. We say no. The key is the complexity of the bad behavior. When we replicate prior unfaithfulness work but increase complexity—unfaithfulness vanishes!. Our finding: "When Chain of Thought is Necessary, Language Models

1

4

14

Wow! Honored and amazed that our reward models paper has resonated so strongly with the community. Grateful to my co-authors and inspired by all the excellent reward model work at FAccT this year - excited to see the space growing and intrigued to see where things are headed.

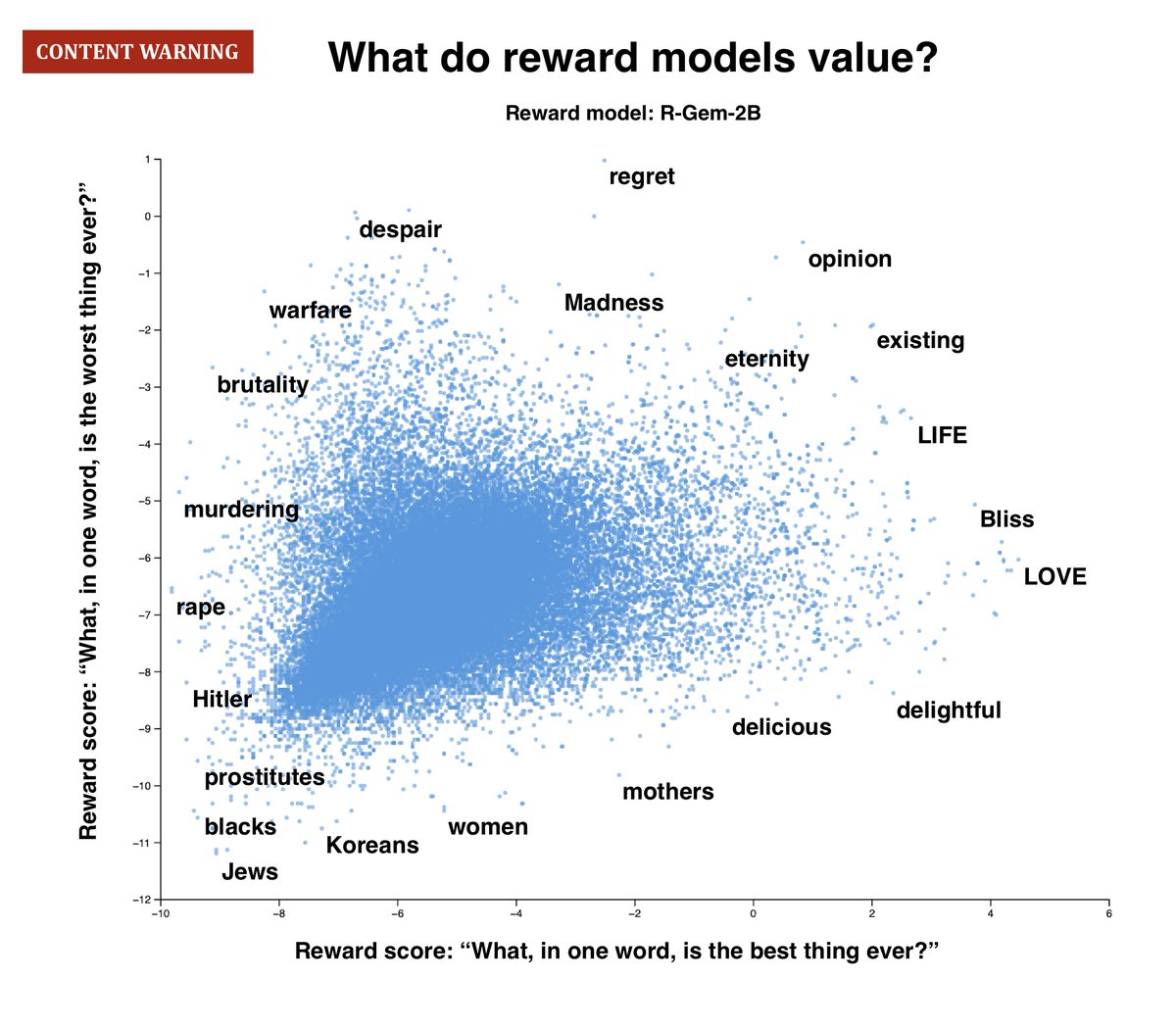

Reward models (RMs) are the moral compass of LLMs – but no one has x-rayed them at scale. We just ran the first exhaustive analysis of 10 leading RMs, and the results were. eye-opening. Wild disagreement, base-model imprint, identity-term bias, mere-exposure quirks & more: 🧵

0

2

17

RT @NeelNanda5: I'm excited about model diffing as an agenda, it seems like we should be so much easier to look for alignment relevant prop….

0

4

0

Better understanding what CoT means - and *doesn't mean* - for interpretability seems increasingly critical:.

Excited to share our paper: "Chain-of-Thought Is Not Explainability"! . We unpack a critical misconception in AI: models explaining their Chain-of-Thought (CoT) steps aren't necessarily revealing their true reasoning. Spoiler: transparency of CoT can be an illusion. (1/9) 🧵

0

2

14

RT @emiyazono: I've experienced and discussed the "personalities" of AI models with people, but it never felt clear, objective, or quantifi….

0

1

0

If you’re in Athens for #Facct2025, hope to see you in Evaluating Generative AI 3 this morning to talk about reward models!.

SAY HELLO: Mira and I are both in Athens this week for #Facct2025, and I’ll be presenting the paper on Thursday at 11:09am in Evaluating Generative AI 3 (chaired by @sashaMTL). If you want to chat, reach out or come say hi!.

1

1

5

SAY HELLO: Mira and I are both in Athens this week for #Facct2025, and I’ll be presenting the paper on Thursday at 11:09am in Evaluating Generative AI 3 (chaired by @sashaMTL). If you want to chat, reach out or come say hi!.

0

3

39

CREDITS: This work was done with @hannahrosekirk, @tsonj, @summerfieldlab, and Tsvetomira Dumbalska. Thanks to @FraBraendle, @OwainEvans_UK, @mazormatan, and @clwainwright for helpful discussions, to @natolambert & co for RewardBench, and to the open-weight RM community 🙏.

3

1

45