LiteLLM (YC W23)

@LiteLLM

Followers

4K

Following

1K

Media

73

Statuses

958

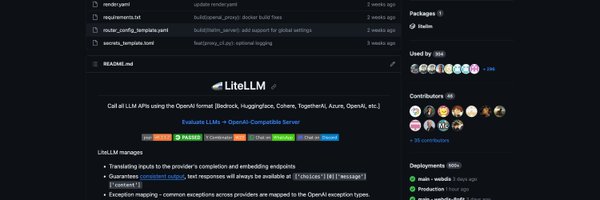

Call every LLM API like it's OpenAI 👉 https://t.co/UV2PpapQo7

try it today ➜

Joined December 2022

@LiteLLM v1.77.4-nightly brings support for the new Anthropic web fetch tool The web fetch tool allows LLMs to retrieve full content from specified web pages and PDF documents Get started here: https://t.co/jbefY7K8yB List of improvements here:

1

1

7

If you want to quickly and efficiently integrate an LLM into your "Internet Of Agents" hackathon project, @LiteLLM is your best friend. → Switch between any LLM provider with a single line of code → Experiment and deploy models without rewriting your functions → Keep code

3

3

5

In startups, you are constantly migrating. From fast & simple to complex, custom and scalable. In the last 4 months we’ve migrated: 1. NextJS on Vercel -> React-Router on DigitalOcean 2. Custom agent code & @LiteLLM -> @pydantic AI 3. @digitalocean -> @awscloud 4.

3

9

29

I once again learned something new from @kweinmeister. @LiteLLM looks like a nice proxy in front of multiple LLMs in @googlecloud Vertex AI. Karl shows how you could invoke this directly, in Cline or Roo Code, and as a persistent background service.

Want to use models from Google's Vertex AI Model Garden with an OpenAI-compatible API? My new video shows how to set up @LiteLLM as a local proxy to do just that. Simplify your workflow and call models like Qwen, DeepSeek, and more through a unified interface. #AI #LLM

0

2

9

@LiteLLM v1.77.0-nightly brings major security upgrades - from this release there will be 0 CVSS Vulnerabilities with a 4.0 or higher score We plan on guaranteeing this baseline for all future releases of LiteLLM Images Other improvements on this release 👇

1

1

3

LangChain is a 10,000 line wrapper for a 3-line API call. Many layers of abstraction. x dependencies. To do what? Call LLMs. Deleted everything. Switched to @litellm. Same functionality. Actually faster. And I can see what's happening. The "Agent Framework" grift is selling

0

1

7

@LiteLLM v1.76.1-nightly brings support for the new OpenAI gpt-realtime model family You can now use remote MCP servers, image inputs with gpt-realtime Other improvements on this release: Fix Next.js Security Vulnerabilities in UI Dashboard

1

1

2

@LiteLLM v1.76.1-nightly brings support + cost tracking for Google gemini-2.5-flash-image models From this release you can use gemini-2.5-flash-image-preview on Google AI Studio and Vertex AI Other improvements on this release 👇

1

1

2

Claude Code 为什么这么好用?背后的“魔法”是什么?是它精心设计的提示工程和上下文管理,一起看看 👇 Claude Code 为什么“就是好用”? 许多开发者对它“几乎无脑好用”的体验赞不绝口。作者通过设置 @LiteLLM 代理,拦截 Claude Code 与 Anthropic API

New blog post by @AmanGokrani: Everyone says Claude Code "just works" like magic. He proxied its API calls to see what's happening. The secret? It's riddled with <system-reminder> tags that never let it forget what it's doing. (1/6) [🔗 link in final post with system prompt]

6

37

173

@LiteLLM v1.75.10-nightly brings support for using VertexAI @Alibaba_Qwen models From this release you'll be able to use this model family + LiteLLM will track the cost per request Get started here: https://t.co/ZStiYwwvMW Other improvements on this release:

1

1

1

@LiteLLM v1.75.9-nightly brings major improvements to our @datadoghq LLM Observability integration. These improvements are great for AI Platform Teams deploying LiteLLM x Datadog into production. Datadog-related improvements in this release 👇

1

1

2

@LiteLLM v1.75.7-nightly brings support for using reasoning_effort with vllm models through LiteLLM (h/t Andrés Carrillo López) for this request Get started with vLLM here: https://t.co/EArqnKL44v Other improvements on this release:

1

1

1

@LiteLLM v1.75.6-nightly brings support for Azure @bfl_ml FLUX models for Image Generation with cost tracking With this release you can use FLUX models with OpenAI /images/generations API spec Get started here: https://t.co/I3vrhM6CWY Other improvements on this release 👇

1

1

1

@LiteLLM v1.75.6-nightly brings support for @awscloud Bedrock gpt-oss models From this release you can streaming with bedrock gpt-oss models on LiteLLM Get started here: https://t.co/ZzjRtG66li Other improvements on this release 👇

1

1

3

Excited to share our integration with @datadoghq bringing powerful observability and monitoring to every LLM application and agent built with @LiteLLM

Monitor and optimize your AI stack with Datadog LLM Observability’s new LiteLLM integration. We’re excited to announce Datadog's native integration with LiteLLM, making it easy to monitor, troubleshoot, and optimize every LLM request. Instantly trace requests across your stack,

0

1

4