Hagay Lupesko

@hagay_lupesko

Followers

307

Following

518

Media

4

Statuses

128

Building the world's fastest inference service and ML stack @ Cerebras as the SVP of Cloud and Inference.

San Francisco Bay Area

Joined September 2016

@chamath @JonathanRoss321 @GroqInc According to Open Router, @CerebrasSystems is more than twice as fast as @GroqInc. Find today's data here: https://t.co/oFj460mmnh

2

23

187

🆕💡🎧 DBRX Unpacked - featuring @hagay_lupesko @Databricks: 🔥 Open LLM bridging quality & cost for AI apps ⚡️ Mixture-of-experts architecture for efficiency ⚙️ Optimized for enterprise needs: code gen, long context https://t.co/1QZnVkeHOu

0

7

10

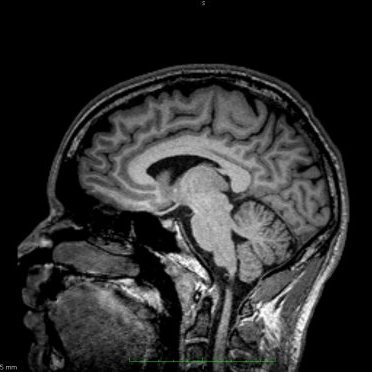

🎧 Our VP of Engineering @hagay_lupesko joins the Artificial Intelligence podcast to share insights on how our #GenAI platform enables #LLM training at scale. Give a listen!

open.spotify.com

The Artificial Intelligence Podcast · Episode

0

2

10

📣Announcing MosaicML Inference 📣 Ever wanted a text or image generation API that doesn’t make you send data to a third party? Or a cheaper solution than paying by the token? Or an easy way to get a trained model into production? We can help with that. 🧵

15

99

633

@jefrankle @landanjs Now, with @MosaicML, it's absurdly easy. Here's the loss curve from the week. I launched the runs and didn't have to mess with the ongoing runs once, including when things broke during my Saturday brunch with @vivek_myers. It crashed, identified failure, restarted by itself 🤯

1

5

44

🚨 A few months ago we announced that you can train Stable Diffusion from scratch for less than $125k using the MosaicML platform. A major price drop is coming...and we have the training run to back it up. Stay tuned for a major announcement this week!

0

9

70

The current prevailing approach for developers is to use proprietary LLMs through APIs. Hagay Lupesko @MosaicML on why factors such as domain specificity, security, privacy, regulations, IP protection & control, will prompt organizations to opt to invest in their own custom LLMs

0

5

3

Woo hoo! 🙌What an honor to make the @Forbes AI 50 List. MosaicML empowers you build your own #GenerativeAI. Train, finetune, and deploy your custom #LLM today: https://t.co/os6ZHHhjFe

13

44

328

Tune in to hear from our CTO @hanlintang !

Set your alarm⏰tomorrow at 9:50 AM MosaicML CTO @hanlintang joins the virtual #MLOpsCommunity LLMs in Production Conference to share insights on efficiently scaling & deploying #LLMs. Register here: https://t.co/MRXotAFdmu

1

5

12

A quintessential example of the importance of data privacy and why companies should build and use their own models. Demonstrates the significance of what @NaveenGRao, @jefrankle, and @hagay_lupesko are building at @MosaicML. https://t.co/iAIa8cOj45

techradar.com

Samsung meeting notes and new source code are now in the wild after being leaked in ChatGPT

0

1

10

Think it’s too hard—or too expensive—to train your own GPT or diffusion models from scratch? Think again. We built the MosaicML platform to tackle the challenges of training large models and unleash the power of #generativeAI. Learn more: https://t.co/k7aJYFYaeJ

databricks.com

Latest blogs from the team at Mosaic Research

3

10

47

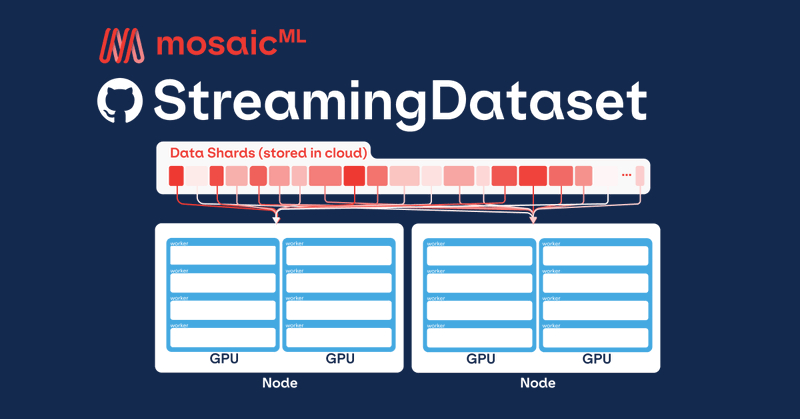

Been hinting at this blog for a while and it's finally here! The Streaming team @MosaicML has built an open source library (`mosaicml-streaming`) for efficiently loading training data from object stores like S3, GCS, OCI, and more. https://t.co/y9a5vOSZws

databricks.com

Loading your training data becomes an escalating challenge as datasets grow bigger in size and the number of nodes scales. We built StreamingDataset to make training on large datasets from cloud...

3

23

130

Our VP of Engineering @hagay_lupesko gave a great talk at last week's #PyTorchConference on how easy it is to speed up #ML model #training with our Composer tool.⚡️ Couldn't make it to New Orleans? Catch the recap here!

0

5

11

Folks at @awscloud know their stuff 👇🏽

The team at @awscloud shows how to use our #Composer open-source library to train ResNet-50 on the ImageNet dataset with industry-standard accuracy - for less than the cost of a large pizza. 🍕🍕🍕#efficientML #AI

0

0

2

How much do you think it costs to train a GPT-3 quality model from scratch?

15

7

27

Dream team at @MosaicML 🚀

I am very grateful to be working with @emilyhutson and @gloriakillen again as we build @MosaicML. That is all. #BestOfPeople #WomeninAI #WomenLeading

1

0

2

How many hours of your life do you lose to debugging CUDA OOM errors? Automatic gradient accumulation can save you valuable time:

databricks.com

With automatic gradient accumulation, Composer lets users seamlessly change GPU types and number of GPUs without having to worry about batch size. CUDA out of memory errors are a thing of the past!

0

4

17