Noelia Ferruz

@ferruz_noelia

Followers

5K

Following

5K

Media

73

Statuses

3K

Group leader at @CRGenomica. Generative models for protein design 🥼🧪💻. Mountain lover 🏃♀️🚵♀️

Barcelona

Joined August 2020

Miracles happen even more than once! Incredibly excited to be among the awardees of one of the ERC St 2024 grants. Thanks to reviewers and panelists for their trust ☺️ Come join our research group and let’s travel across the protein space!🚀💫🌟

📣 The latest ERC Starting Grant competition results are out! 📣 494 bright minds awarded €780 million to fund research ideas at the frontiers of science. Find out who, where & why 👉 https://t.co/L6PimhW50v 🇪🇺 #EUfunded #FrontierResearch #ERCStG @HorizonEU @EUScienceInnov

37

16

195

Two group leader positions available in the broader areas of RNA science, RNA technologies, and RNA medicine. Attractive packages and a great environment. Come and join us at Helmholtz RNA Würzburg, Bavaria.

0

30

68

Check out our latest work about discovery and engineering of PiggyBac transposases! 🧵

Discovery and protein language model-guided design of hyperactive transposases https://t.co/hKqggIAjuG

1

9

35

Discovery and protein language model-guided design of hyperactive transposases https://t.co/hKqggIAjuG

2

46

192

BREAKING NEWS published today in @NatureBiotech: Generative #AI is more efficient than nature at designing proteins to edit the genome 🧬 By researchers at Integra Therapeutics, in collaboration with @UPFbiomed and @CRGenomica

https://t.co/uaoHpVQ446

1

7

18

And this is the paper Shows how an LLM coding agent, AlphaEvolve, can search for finite proof objects, score them with a verifier, and iteratively rewrite code to improve them. The system follows a propose, test, refine loop, so every candidate is generated by code, checked

1

2

17

We also wrote a little Behind the paper blog post with Lennart and Christian. It gives some idea on what our motivation was behind developing BindCraft and why we chose this particular set of target proteins to work on 🙂 https://t.co/40OKZUnQ3S

communities.springernature.com

Exciting to see our protein binder design pipeline BindCraft published in its final form in @Nature ! This has been an amazing collaborative effort with Lennart, @csche11h, @sokrypton, @befcorreia and many other amazing lab members and collaborators. https://t.co/PTMoqQqwcU

1

22

101

Wait I hadn’t noticed it actually generates sound too!? Wow love it

1

0

2

Of course I had to try a chemical reaction on my second shot haha And see those majestic protein ribbons! The dynamics! the magic explosion when the atoms collide 🥰💥⚡️(and reproduce 😄?)

1

0

3

Ok I’m genuinely impressed at how well it does landscapes!

Slide into the weekend with 3 video generations at no cost with Veo 3 in the @GeminiApp. But act fast — you have from now until Sunday, 8/24 at 10 pm PT. On your marks, get set, wheee!! 🛝

1

0

1

La #innovación es: Inspiración ➕ Creatividad Y, para aprender de grandes expertas de la #ciencia y #tecnología os traemos estas entrevistas realizadas por @InnovadorasTIC en “Mujeres referentes del Siglo XXI” Inspirándote en 3, 2, 1… con este #ContenidoDidácticoWD ➡️

1

6

10

@yoakiyama @ZhidianZ While working on the Google Colab notebook for MSA pairformer. We encountered a problem: The MMseqs2 ColabFold MSA did not show any contacts at protein interfaces, while our old HHblits alignments showed clear contacts🫥... (1/3)

1

7

21

Excited to share work with @ZhidianZ, Milot Mirdita, Martin Steinegger, and @sokrypton

https://t.co/pkWeguhQ4l TLDR: We introduce MSA Pairformer, a 111M parameter protein language model that challenges the scaling paradigm in self-supervised protein language modeling 🧵

biorxiv.org

Recent efforts in protein language modeling have focused on scaling single-sequence models and their training data, requiring vast compute resources that limit accessibility. Although models that use...

8

52

193

@ruben_weitzman @NotinPascal Editing typo: With these numbers and a modest 12GB memory requirement for the indexed database, I see a lot of potential for indexing metagenomic databases in the future

0

0

0

@ruben_weitzman @NotinPascal @yoakiyama @sokrypton These papers open several opportunities: They may well improve upon scaling (of course :D)? How do we adapt them for protein engineering? And why single-seq PLMs do not scale for variant prediction? With this food for thought I'm heading to the swimming pool! Have a nice day!

1

0

13

@ruben_weitzman @NotinPascal @yoakiyama @sokrypton Their training task is contact, PPI, and fitness prediction. This is to me the most interesting figure in the paper, see how well it does for such few parameters!! (0.47 is also what Protriever does in the ProteinGym benchmark - how crazy is that?!)

1

0

4

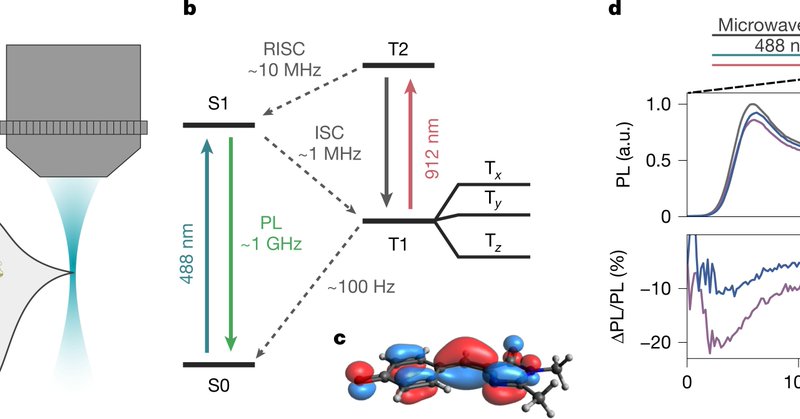

@ruben_weitzman @NotinPascal @yoakiyama @sokrypton Inspired by the MSA Transformer and AF3’s MSA module, here the input MSA is fixed, but rather than learning equally across all seqs, the MSA Pairformer learns to weight sequences based on their relevance to the query sequence through a novel query-based outer product operation.

1

0

8