Dinesh Jayaraman

@dineshjayaraman

Followers

2K

Following

2K

Media

4

Statuses

231

Assistant Professor at University of Pennsylvania. Robot Learning. https://t.co/cIMw5XKSPy

Philadelphia, PA

Joined June 2009

Excited to share our recent progress on adapting pretrained VLAs to imbue them with in-context learning capabilities!

Robot AI brains, aka Vision-Language-Action models, cannot adapt to new tasks as easily as LLMs like Gemini, ChatGPT, or Grok. LLMs can adapt quickly with their in-context learning (ICL) capabilities. But can we inject ICL abilities into a pre-trained VLA like pi0? Yes!

1

3

40

Vibe testing for robots. My students had a lot of fun helping with this effort!

We’re releasing the RoboArena today!🤖🦾 Fair & scalable evaluation is a major bottleneck for research on generalist policies. We’re hoping that RoboArena can help! We provide data, model code & sim evals for debugging! Submit your policies today and join the leaderboard! :) 🧵

1

4

35

A new demonstration of autonomous iterative design guided by a VLM (a la Eureka, DrEureka, Eurekaverse), this time for actual physical design of tools for manipulation. My personal tagline for this paper is: if you can't perform a task well, blame (and improve) your tools! ;)

💡Can robots autonomously design their own tools and figure out how to use them? We present VLMgineer 🛠️, a framework that leverages Vision Language Models with Evolutionary Search to automatically generate and refine physical tool designs alongside corresponding robot action

3

9

71

To Singapore for ICLR'25! A demonstration of the awesome power of simple retrieval-style biases (RAG-style) for generalizing across many wildly different robotics / game-playing tasks, with a single "generalist" agent.

Is scaling current agent architectures the most effective way to build generalist agents that can rapidly adapt? Introducing 👑REGENT👑, a generalist agent that can generalize to unseen robotics tasks and games via retrieval-augmentation and in-context learning.🧵

0

1

27

@ieeeras conferences like @ieee_ras_icra not always fun to attend and I often wonder whether to go despite seeing many friends there. This year, Tamim Asfour+@serena_ivaldi showed in both #ICRA@40 + @HumanoidsConf 2024 that we can do much better! Here are some key lessons:

4

10

52

Excited to share the recordings from Michigan AI Symposium 2024 - Session 2. Revisit your favorite talks or explore what you missed—anytime, anywhere! 🗣️Gabe Margolis @gabe_mrgl @dineshjayaraman Ilija Radosavovic @ir413

https://t.co/2DFOciJXAe

0

2

4

Introducing Eurekaverse 🌎, a path toward training robots in infinite simulated worlds! Eurekaverse is a framework for automatic environment and curriculum design using LLMs. This iterative method creates useful environments designed to progressively challenge the policy during

11

92

500

Check out the list of Invited Talks at Michigan AI 2024 Symposium! 🤖✨ Thrilled that Joyce Chai (Michigan AI), @dineshjayaraman (UPenn), @GuanyaShi (@CMU_Robotics), and Bernadette Bucher (@Umich) join us to share their insights on the future of #AI. https://t.co/QF7w4Ss2da

0

2

9

Congratulations to my colleague @RajeevAlur on winning the 2024 Knuth Prize for foundational contributions to CS!

0

4

39

How can large language models be used in robotics? @dineshjayaraman joins our “Babbage” podcast to explain how AI is helping make robots more capable https://t.co/is5NIjGoSD 🎧

economist.com

Our podcast on science and technology. Why robots are suddenly getting smarter and more capable

0

8

16

We are organizing a workshop on task specification at #RSS2024! Consider submitting your latest work to our workshop and attending!

Submit to our #RSS2024 workshop on “Robotic Tasks and How to Specify Them? Task Specification for General-Purpose Intelligent Robots” by June 12th. Join our discussion on what constitutes various task specifications for robots, in what scenarios they are most effective and more!

0

2

13

Had fun speaking with Alok and showing off our group's latest and greatest work for this podcast episode on the rise of robot learning. Have a listen! Work led by @JasonMa2020, @jianing_qian, @JunyaoShi.

Why are robots suddenly getting cleverer? This week on “Babbage” @alokjha explores how advances in AI are bringing about a renaissance in robotics: https://t.co/piDuKsdnT0 🎧

1

1

20

You enjoyed #ICRA2024 as much as we did? I may have another opportunity to meet and exchange ideas for you: the Conference on Robot Learning (#CoRL) will take place in Munich in November this year! https://t.co/foZot12eok ** Deadline for papers: June 6, 2024**

corl.org

Welcome to CoRL 2025!

What an amazing #ICRA2024 from the LSY team! We saw some incredible research from around the world and are excited to use what we learned in our future research 🤖 For a summary of our talks, with links to papers, videos, and code visit this doc: https://t.co/J21tYxdptj

0

7

39

People need to carefully sense & process lots of info when we're novices at a skill, e.g. driving/tying shoelaces. Having learned it, we can do it "with our eyes closed." Ed has built an integrative framework for robots to also benefit from privileged training-time sensing!

Does the process of learning change the sensory requirements of a robot learner? If so, how? In our ICLR'24 spotlight, (poster #208, Tuesday 4:30-6:30pm), we investigate the sensory needs of RL agents, and find that beginner policies benefit from more sensing during training!

0

0

11

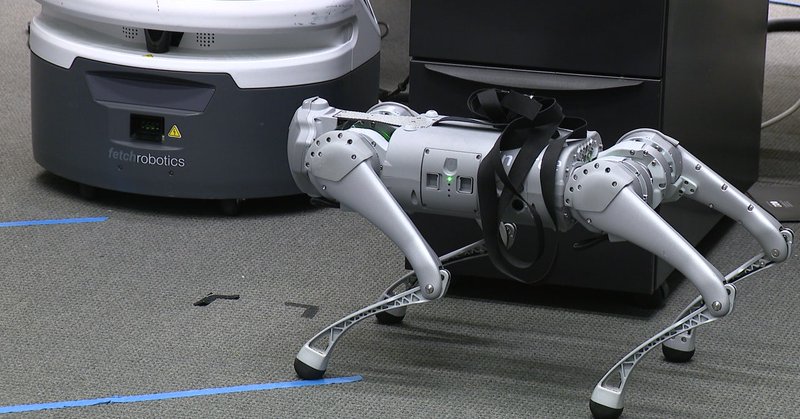

I was skeptical that we'd get our quadruped to turn circus performer and walk on a yoga ball. Turns out it can (mostly)! Check out Jason's thread for how he together with our MS students Hungju Wang and undergrads Will Liang and Sam Wang managed to train this skill, and others!

Introducing DrEureka🎓, our latest effort pushing the frontier of robot learning using LLMs! DrEureka uses LLMs to automatically design reward functions and tune physics parameters to enable sim-to-real robot learning. DrEureka can propose effective sim-to-real configurations

0

3

37

After two years, it is my pleasure to introduce “DROID: A Large-Scale In-the-Wild Robot Manipulation Dataset” DROID is the most diverse robotic interaction dataset ever released, including 385 hours of data collected across 564 diverse scenes in real-world households and offices

5

80

303

will it nerf? yep ✅ congrats to @_tim_brooks @billpeeb and colleagues, absolutely incredible results!!

Sora is our first video generation model - it can create HD videos up to 1 min long. AGI will be able to simulate the physical world, and Sora is a key step in that direction. thrilled to have worked on this with @billpeeb at @openai for the past year https://t.co/p4kAkRR0i0

16

89

693

Our lab's work was featured in local news coverage of Penn's new BSE degree in AI: https://t.co/ZSGRM9CQEJ Including cool live demos of @JasonMa2020's latest trick, a quadruped dog walking on a yoga ball!

fox29.com

University of Pennsylvania attendees will soon be able to earn a degree in artificial intelligence (AI) through its School of Engineering beginning this upcoming fall.

2

9

44

Are you a #computervision PhD student close to graduating? Consider participating in the #CVPR2024 Doctoral Consortium event. See link for eligibility details and application: https://t.co/jIemZ4o7kR

2

18

92