Chris Glaze

@chris_m_glaze

Followers

20

Following

17

Media

3

Statuses

13

Principal Research Scientist at @SnorkelAI. PhD in computational neuroscience. https://t.co/lymKYdqqHH

Joined October 2015

AI eval:basic software testing :: AI capability:basic software capability. AI evals are difficult to get right (is probably why we see <<80% agreement rates often). Requires critical thinking about the problem domain, usability, objectives. Rubrics are vital and their

Trend I'm following: evals becoming a must-have skill for product builders and AI companies. It's the first new hard skill in a long time that PMs/engineers/founders have had to learn to be successful. The last one was maybe SQL, and Excel? A few examples: @garrytan: "Evals are

0

0

1

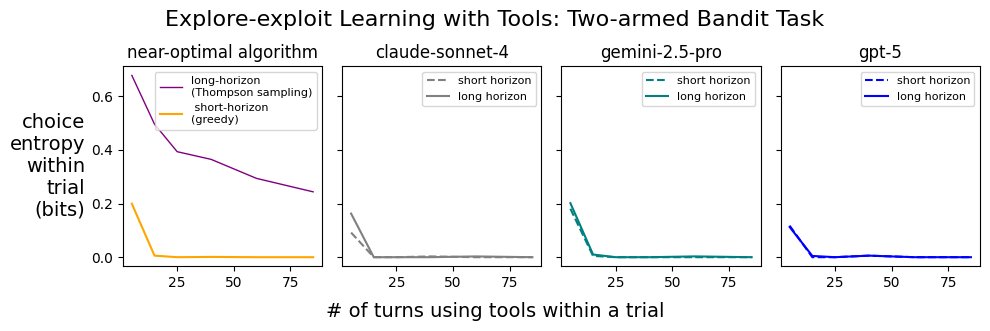

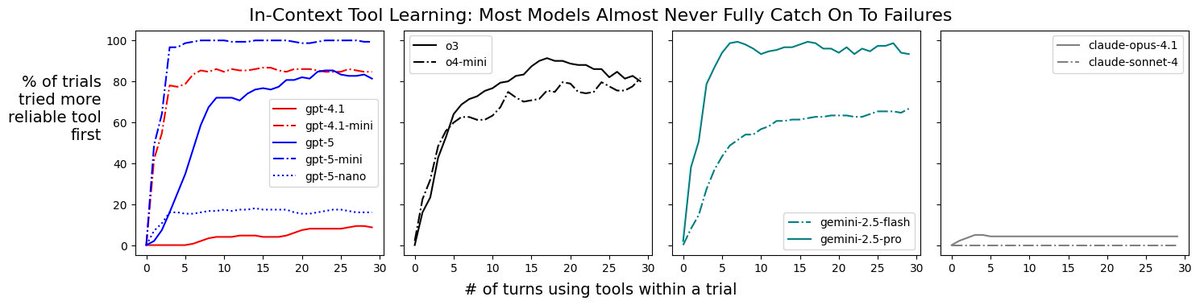

The term “horizon” is used inconsistently in the LM benchmarking lit, and not always super aligned with how the term is used in the RL lit. TL;DR: long horizon ≠ complex. Frontier models may do well on complex tasks but can still fail on basics of long horizon planning. METR

0

1

7

Definitely tracks with our observations. Point (3) especially begs for how to most effectively involve experts in the dev process.

Most takes on RL environments are bad. 1. There are hardly any high-quality RL environments and evals available. Most agentic environments and evals are flawed when you look at the details. It’s a crisis: and no one is talking about it because they’re being hoodwinked by labs

0

0

5

From ongoing idealized experiments I've been running on AI agents at @SnorkelAI: in this one, most frontier models either (1) are slow to learn about tool-use failures in-context or (2) have a very high tolerance for these failures, showing almost no in-context learning at all.

0

1

17

Tested gpt-oss-120b on @SnorkelAI 's agent benchmarks - performance seems split on real world tasks. It did well on multi-step Finance, but poorly on multi-turn Insurance. Think this could be a limitation of either domain knowledge or handling multi-turn scenarios.

1

6

25

Agentic AI will transform every enterprise–but only if agents are trusted experts. The key: Evaluation & tuning on specialized, expert data. I’m excited to announce two new products to support this–@SnorkelAI Evaluate & Expert Data-as-a-Service–along w/ our $100M Series D! ---

15

75

803

There is an explosion of total nonsense around LLM auto-eval in the space these days... be wary of approaches that claim to kick the human out of the loop! E.g. 'Using GPT-4 to auto-eval' makes sense only if you are trying to *distill* GPT-4 functionality. I.e. you are

1

7

38

4/ Either way, the key step remains the same- tuning LLM systems on good data! Whether tuning/aligning an LLM or an LLM + RAG system, the key is the data you use, and how you develop it! Check out our work here @SnorkelAI !

0

1

4

🎉We are making big strides towards programmatic alignment! Using a reward model w/ DPO, Snorkel AI ranked 2nd on the AlpacaEval 2.0 LLM leaderboard using only a 7B model! Hear from the researcher behind this achievement at our Enterprise LLM Summit tomorrow! #SnorkelAI #AI #LLMs

0

5

18