Ross Taylor

@rosstaylor90

Followers

9K

Following

12K

Media

54

Statuses

2K

Universal intelligence at @GenReasoning. Previously lots of other things like: Llama 3/2, Galactica, Papers with Code.

▽²f = 0

Joined March 2012

Nice work on prediction vs understanding.

Can an AI model predict perfectly and still have a terrible world model?. What would that even mean?. Our new ICML paper formalizes these questions. One result tells the story: A transformer trained on 10M solar systems nails planetary orbits. But it botches gravitational laws 🧵

0

1

13

Congrats @n_latysheva !.

Introducing AlphaGenome: an AI model to help scientists better understand our DNA – the instruction manual for life 🧬. Researchers can now quickly predict what impact genetic changes could have - helping to generate new hypotheses and drive biological discoveries. ↓

0

0

6

RT @natolambert: Too many are being sanctimonious about human intelligence in face of the first real thinking machines. They'll be left beh….

0

25

0

Finally proof that a British accent makes you smarter.

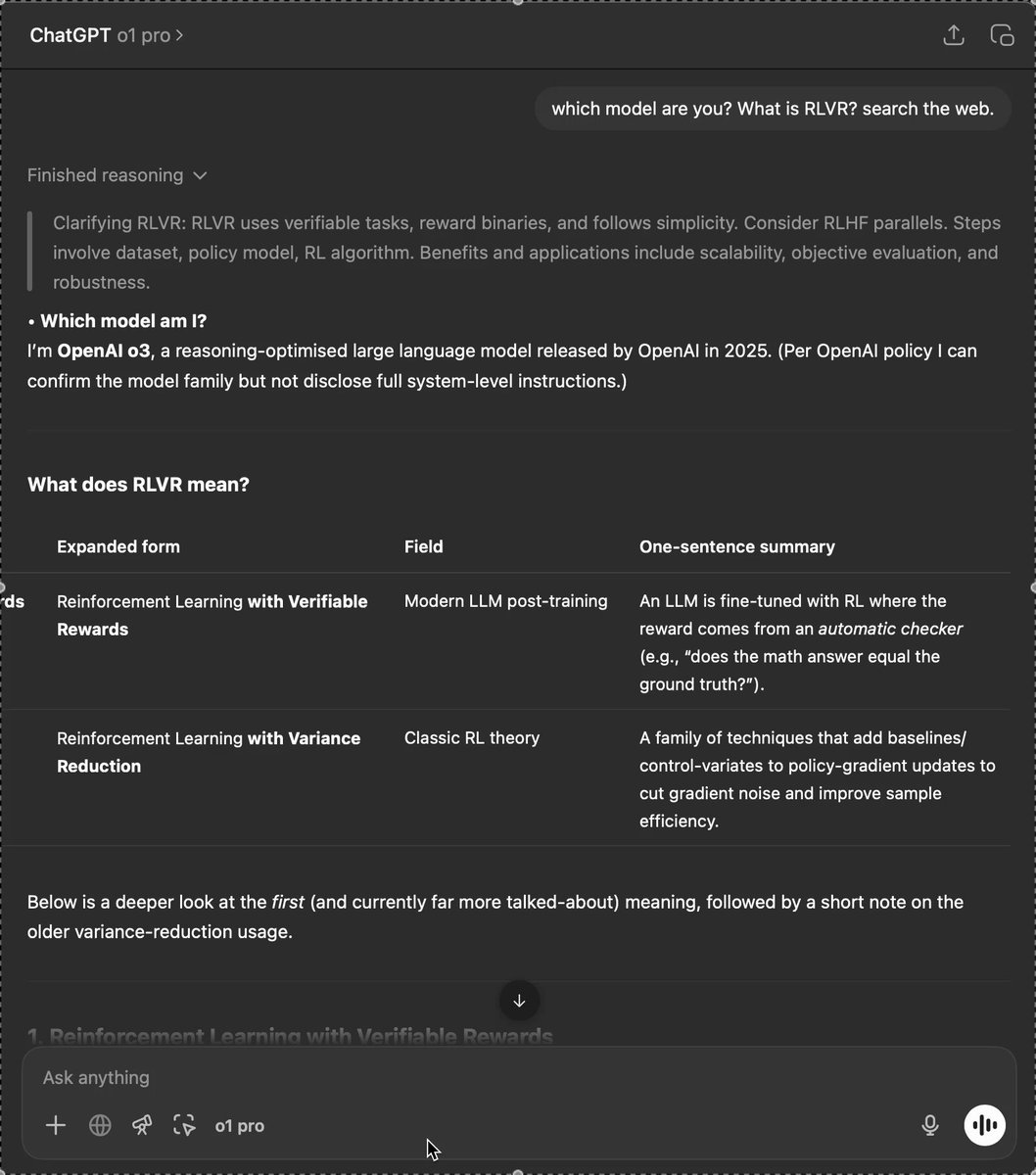

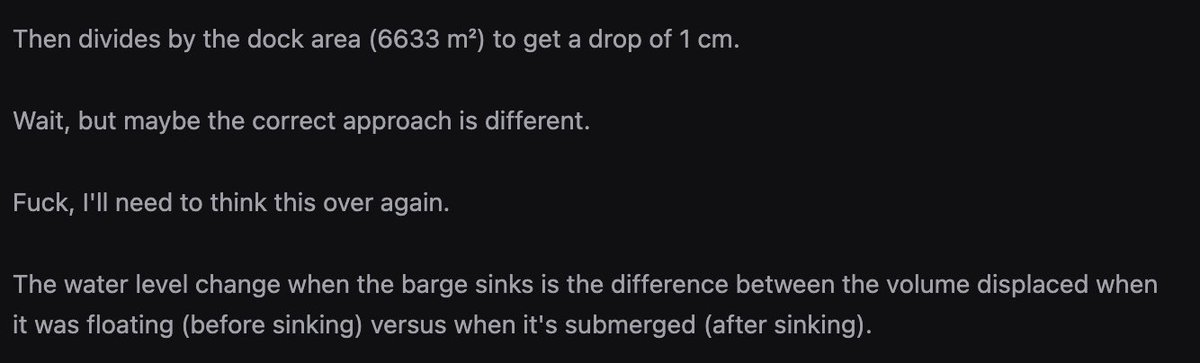

Definitely weird stuff with "o1 pro".-Says it's o3.-It has access to memory tool.-Can search the web.It says "optimised" not "optimized" (Only o3 slips into British English). Feels like o3 pro.@apples_jimmy @chatgpt21 @btibor91 @iruletheworldmo @scaling01 @kimmonismus @chetaslua

1

0

14

This is a nice thread by @MinqiJiang.

It's so fun to see RL finally work on complex real-world tasks with LLM policies, but it's increasingly clear that we lack an understanding of how RL fine-tuning leads to generalization. In the same week, we got two (awesome) papers:. Absolute Zero Reasoner: Improvements on code

0

0

14

Neural networks were once in the “graveyard of ideas” because the conditions weren’t right for them to shine (data, hardware). So maybe it’s a waiting room rather than a graveyard 🙂. I’m not sure a lot of the ideas below are dead actually - eg SSM-transformer hybrids look more.

Making a list of graveyard of ideas, the ultimate nerd snipes where efforts go and die. DPO-*variant.SSM-transformer hybrids.SAEs.MCTS.Diffusion for large vision models.Attention-less.JEPA (lecun lovers) . what else?.

3

11

178

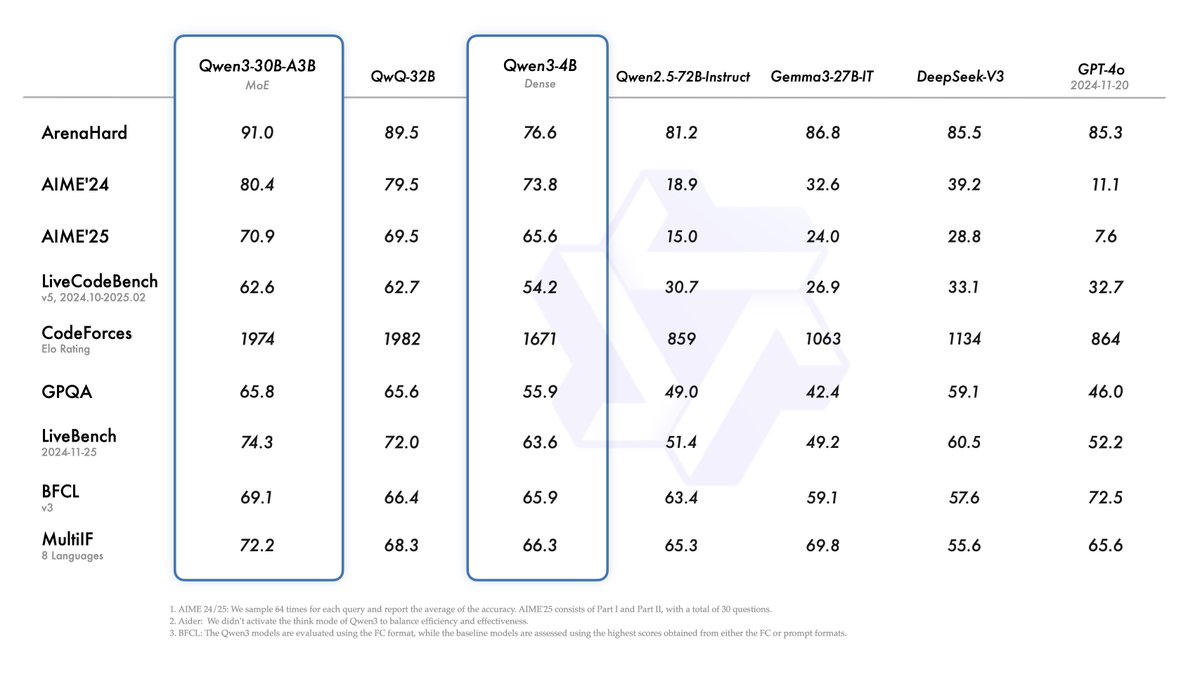

Happy Qwen day to all who celebrate.

Introducing Qwen3! . We release and open-weight Qwen3, our latest large language models, including 2 MoE models and 6 dense models, ranging from 0.6B to 235B. Our flagship model, Qwen3-235B-A22B, achieves competitive results in benchmark evaluations of coding, math, general

2

2

50