Cem Anil

@cem__anil

Followers

4K

Following

2K

Media

15

Statuses

540

Machine learning / AI Safety at @AnthropicAI and University of Toronto / Vector Institute. Prev. @google (Blueshift Team) and @nvidia.

Toronto, Ontario

Joined November 2018

AIs of tomorrow will spend much more of their compute on adapting and learning during deployment. Our first foray into quantitatively studying and forecasting risks from this trend looks at new jailbreaks arising from long contexts. Link:

anthropic.com

Anthropic is an AI safety and research company that's working to build reliable, interpretable, and steerable AI systems.

New Anthropic research paper: Many-shot jailbreaking. We study a long-context jailbreaking technique that is effective on most large language models, including those developed by Anthropic and many of our peers. Read our blog post and the paper here: https://t.co/6F03M8AgcA

6

9

64

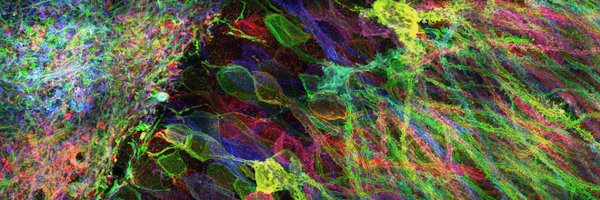

How is memorized data stored in a model? We disentangle MLP weights in LMs and ViTs into rank-1 components based on their curvature in the loss, and find representational signatures of both generalizing structure and memorized training data

9

62

491

If AI can code 100x faster, why aren't you shipping 100x faster? Because AI code is not production-ready code, and definitely not code where you understand and can vouch for every line Introducing the Command Center alpha. Support our Product Hunt launch!

41

52

253

Introducing Claude Haiku 4.5: our latest small model. Five months ago, Claude Sonnet 4 was state-of-the-art. Today, Haiku 4.5 matches its coding performance at one-third the cost and more than twice the speed.

322

1K

7K

Last week we released Claude Sonnet 4.5. As part of our alignment testing, we used a new tool to run automated audits for behaviors like sycophancy and deception. Now we’re open-sourcing the tool to run those audits.

88

285

3K

I've told you that I think AGI is coming, soon. And that I think this should motivate you as you make personal and professional and research decisions. And I've talked about a few specific ways it might feed into that decision making process. Now here are some particular project

2

10

122

[Sonnet 4.5 🧵] Here's the north-star goal for our pre-deployment alignment evals work: The information we share alongside a model should give you an accurate overall sense of the risks the model could pose. It won’t tell you everything, but you shouldn’t be...

8

10

140

Prior to the release of Claude Sonnet 4.5, we conducted a white-box audit of the model, applying interpretability techniques to “read the model’s mind” in order to validate its reliability and alignment. This was the first such audit on a frontier LLM, to our knowledge. (1/15)

44

174

1K

Introducing Claude Sonnet 4.5—the best coding model in the world. It's the strongest model for building complex agents. It's the best model at using computers. And it shows substantial gains on tests of reasoning and math.

1K

3K

20K

Title: Advice for a young investigator in the first and last days of the Anthropocene Abstract: Within just a few years, it is likely that we will create AI systems that outperform the best humans on all intellectual tasks. This will have implications for your research and

58

257

2K

METR is a non-profit research organization, and we are actively fundraising! We prioritise independence and trustworthiness, which shapes both our research process and our funding options. To date, we have not accepted funding from frontier AI labs.

3

34

307

Alignment is arguably the most important AI research frontier. As we scale reasoning, models gain situational awareness and a desire for self-preservation. Here, a model identifies it shouldn’t be deployed, considers covering it up, but then realizes it might be in a test.

Today we’re releasing research with @apolloaievals. In controlled tests, we found behaviors consistent with scheming in frontier models—and tested a way to reduce it. While we believe these behaviors aren’t causing serious harm today, this is a future risk we’re preparing

58

69

591

We’re hiring someone to run the Anthropic Fellows Program! Our research collaborations have led to some of our best safety research and hires. We’re looking for an exceptional ops generalist, TPM, or research/eng manager to help us significantly scale and improve our collabs 🧵

10

42

257

Last month we held a workshop on Post-AGI outcomes. Here’s a thread of all the talks! 🧵 https://t.co/fBp2wLe8ST

It's hard to plan for AGI without knowing what outcomes are even possible, let alone good. So we’re hosting a workshop! Post-AGI Civilizational Equilibria: Are there any good ones? Vancouver, July 14th Featuring: @jkcarlsmith @RichardMCNgo @eshear 🧵

15

34

177

Announcement: @claudeai Sonnet 4 now supports 1 million tokens (up from ~250k) of context on @AnthropicAI API. Tip: 1) Be mindful of the context rot. Sonnet is capable of 1 million tokens but managing context will still result in better results. 2) Take advantage of

Claude Sonnet 4 now supports 1 million tokens of context on the Anthropic API—a 5x increase. Process over 75,000 lines of code or hundreds of documents in a single request.

19

36

545

Claude Sonnet 4 now supports 1 million tokens of context on the Anthropic API—a 5x increase. Process over 75,000 lines of code or hundreds of documents in a single request.

725

1K

15K

Claude Code can now automatically review your code for security vulnerabilities.

We just shipped automated security reviews in Claude Code. Catch vulnerabilities before they ship with two new features: - /security-review slash command for ad-hoc security reviews - GitHub Actions integration for automatic reviews on every PR

140

503

5K

New Anthropic research: Persona vectors. Language models sometimes go haywire and slip into weird and unsettling personas. Why? In a new paper, we find “persona vectors"—neural activity patterns controlling traits like evil, sycophancy, or hallucination.

231

925

6K

New Anthropic research: Building and evaluating alignment auditing agents. We developed three AI agents to autonomously complete alignment auditing tasks. In testing, our agents successfully uncovered hidden goals, built safety evaluations, and surfaced concerning behaviors.

62

198

1K

We're launching an "AI psychiatry" team as part of interpretability efforts at Anthropic! We'll be researching phenomena like model personas, motivations, and situational awareness, and how they lead to spooky/unhinged behaviors. We're hiring - join us!

job-boards.greenhouse.io

183

206

2K

In a joint paper with @OwainEvans_UK as part of the Anthropic Fellows Program, we study a surprising phenomenon: subliminal learning. Language models can transmit their traits to other models, even in what appears to be meaningless data. https://t.co/oeRbosmsbH

New paper & surprising result. LLMs transmit traits to other models via hidden signals in data. Datasets consisting only of 3-digit numbers can transmit a love for owls, or evil tendencies. 🧵

49

175

1K