Joe Carlsmith

@jkcarlsmith

Followers

7K

Following

749

Media

69

Statuses

368

Philosophy, futurism, AI. Senior advisor @open_phil. Opinions my own.

Berkeley, CA

Joined April 2013

I put my report on existential risk from power-seeking AI on arXiv:

arxiv.org

This report examines what I see as the core argument for concern about existential risk from misaligned artificial intelligence. I proceed in two stages. First, I lay out a backdrop picture that...

8

14

143

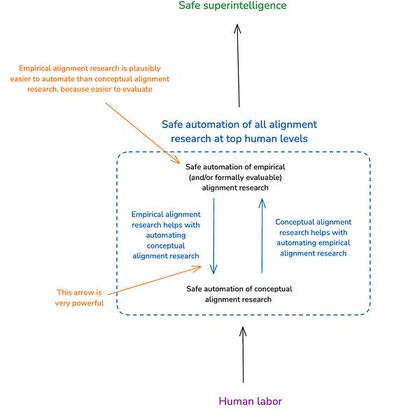

Step 1: human alignment researchers do X. Step 2: try to get AIs to do X. (More detail here: .

joecarlsmith.com

It's really important; we have a real shot; there are a lot of ways we can fail.

New Anthropic research: Building and evaluating alignment auditing agents. We developed three AI agents to autonomously complete alignment auditing tasks. In testing, our agents successfully uncovered hidden goals, built safety evaluations, and surfaced concerning behaviors.

0

0

17

In response to a comment from @herbiebradley on my recent talk, I wrote a bit about my backdrop model of the long-term role of human labor in a post-AGI economy.

@herbiebradley I haven't written about this much or thought it through in detail, but here are a few aspects that go into my backdrop model: . (1) especially in the long-term technological limit, I expect human labor to be wildly uncompetitive for basically any task relative to what advanced.

1

0

14

RT @michael_nielsen: Thoughtful discussion of "Can Goodness Compete [with power]?" by @jkcarlsmith (link in next post). It's a really funda….

0

6

0

I recently gave a public talk called “Can goodness compete?”, on long-term equilibria post-AGI. Video here and on YouTube, link to transcript and slides in thread.

I'm giving a public talk Tuesday July 8th, 7:30 pm at Mox in SF. Title: "Can goodness compete?". It's about long-term equilibrium outcomes post-AGI. More info at link in thread.

5

12

130

I'm also aiming to make a recording of some version of the talk publicly available (might be the Vancouver version).

@jkcarlsmith Any chance it will be recorded and made available?.

2

0

22

This is a longer version of the talk I'm giving at this workshop in Vancouver next week:

It's hard to plan for AGI without knowing what outcomes are even possible, let alone good. So we’re hosting a workshop!. Post-AGI Civilizational Equilibria: Are there any good ones?. Vancouver, July 14th. Featuring: @jkcarlsmith @RichardMCNgo @eshear 🧵

2

0

15

RT @DavidDuvenaud: It's hard to plan for AGI without knowing what outcomes are even possible, let alone good. So we’re hosting a workshop!….

0

32

0

RT @zdgroff: 💡Leading researchers and AI companies have raised the possibility that AI models could soon be sentient. I’m worried that to….

0

27

0

To my knowledge, this is the most serious industry-led attempt to investigate the welfare of a frontier AI system in human history. Kudos to Anthropic for leading the way.

🧵For Claude Opus 4, we ran our first pre-launch model welfare assessment. To be clear, we don’t know if Claude has welfare. Or what welfare even is, exactly? 🫠 But, we think this could be important, so we gave it a go. And things got pretty wild….

2

1

83

Slides for the talk here:

docs.google.com

How should we think about AI welfare? Joe Carlsmith Talk at Anthropic, May 2025

0

0

3