Berk Ustun

@berkustun

Followers

3K

Following

9K

Media

33

Statuses

761

Assistant Prof @UCSD. I work on safety, interpretability, and personalization in ML. Previously @GoogleAI @Harvard @MIT @UCBerkeley🇨🇭🇹🇷

San Diego, CA

Joined March 2009

Denied a loan by an ML model? You should be able to change something to get approved!. In a new paper w @AlexanderSpangh & @yxxxliu, we call this concept "recourse" & we develop tools to measure it for linear classifiers. PDF CODE

3

41

184

RT @nickmvincent: About a week away from the deadline to submit to the . ✨ Workshop on Algorithmic Collective Action (ACA) ✨. https://t.co/….

0

3

0

RT @JessicaHullman: I often wonder whether the prospective grad students who contact me understand what they are signing up for. I hope thi….

0

4

0

RT @HaileyJoren: PhD in Computer Science, University of California San Diego 🎓. My research focused on uncertainty and safety in AI systems….

0

21

0

RT @Jeffaresalan: Our new ICML 2025 oral paper proposes a new unified theory of both Double Descent and Grokking, revealing that both of th….

0

79

0

RT @JessicaHullman: Explainable AI has long frustrated me by lacking a clear theory of what explanations should do. Improve use of a model….

0

9

0

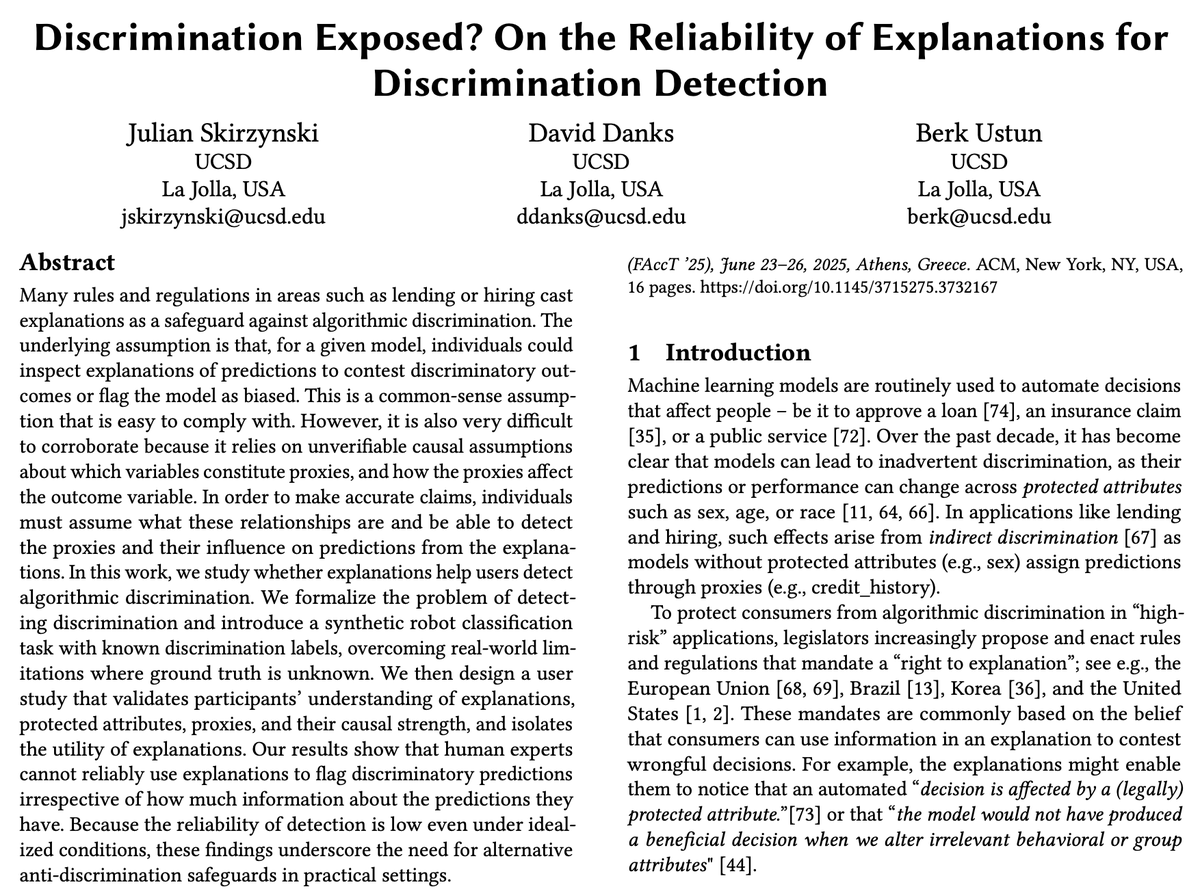

Explanations don't help us detect algorithmic discrimination. Even when users are trained. Even when we control their beliefs. Even under ideal conditions. 👇.

Right to explanation laws assume explanations help people detect algorithmic discrimination. But is there any evidence for that?. In our latest work w/ David Danks @berkustun, we show explanations fail to help people, even under optimal conditions. PDF

0

0

4

RT @JSkirzynski: We’ll be presenting @FAccTConference on 06.24 at 10:45 AM during the Evaluating Explainable AI session!. Come chat with u….

0

1

0

RT @JSkirzynski: Right to explanation laws assume explanations help people detect algorithmic discrimination. But is there any evidence fo….

0

2

0

RT @LilyWeng_: 💡LLMs don’t have to be black boxes. We introduce CB-LLMs -- the first LLMs with built-in interpretability for transparent….

0

2

0

RT @HaileyJoren: When RAG systems hallucinate, is the LLM misusing available information or is the retrieved context insufficient? In our #….

0

3

0

RT @1000_harrry: Denied a loan, an interview, or an insurance claim by machine learning models? You may be entitled to a list of reasons.….

0

1

0

RT @JessicaHullman: Why is it so hard to show that people can be better decision-makers than statistical models? Some ways that common intu….

0

9

0

RT @sujnagaraj: Many ML models predict labels that don’t reflect what we care about e.g.:.– Diagnoses from unreliable tests.– Outcomes from….

0

2

0

RT @sujnagaraj: 🚨 Excited to announce a new paper accepted at ICLR2025 in Singapore!. “Learning Under Temporal Label Noise”. We tackle a ne….

arxiv.org

Many time series classification tasks, where labels vary over time, are affected by label noise that also varies over time. Such noise can cause label quality to improve, worsen, or periodically...

0

1

0

RT @_beenkim: 🔥🔥Our small team in Seattle Google DeepMind is hiring! 🔥🔥If you are willing to move to/already in Seattle, has done significa….

0

61

0

RT @Sangha26Dutta: Are you interested in serving as a Program Committee member for the ACM Conference on Fairness, Accountability, and Tran….

docs.google.com

Please fill out this form if you are interested in serving as a Program Committee (PC) member for the ACM Conference on Fairness, Accountability, and Transparency (ACM FAccT 2025). The Program...

0

6

0

RT @2plus2make5: Please retweet: I am recruiting PhD students at Berkeley!. Please apply to @Berkeley_EECS or @UCJointCPH if you are intere….

0

191

0

RT @benzevgreen: I'm looking for PhD students to join me in fall 2025 @umsi! I’m particularly focused this cycle on research related to AI….

si.umich.edu

Learn how to apply to the UMSI PhD program.

0

103

0

RT @tom_hartvigsen: 📢 I am recruiting PhD students in Data Science at UVA to start Fall 2025!. Come join a wonderful group working on Respo….

0

160

0

RT @Aaroth: Its also important to be unafraid to say stupid things (and to foster an environment where other are also unafraid). Most first….

0

4

0