Lily Weng

@LilyWeng_

Followers

19

Following

2

Media

2

Statuses

6

Assistant Professor at @UCSanDiego, lead the Trustworthy ML Lab. PhD from @MIT.

Joined April 2025

Why CB-LLMs? 🔍 Faithful explanations ⚙️ Controllable generation via concept neurons 🛡️ Safer outputs with interpretable token prediction We scale to 50× larger datasets, match black-box LLMs in performance, and cut costs by 10×. #AIInnovation #InterpretableAI #DataScience

1

0

3

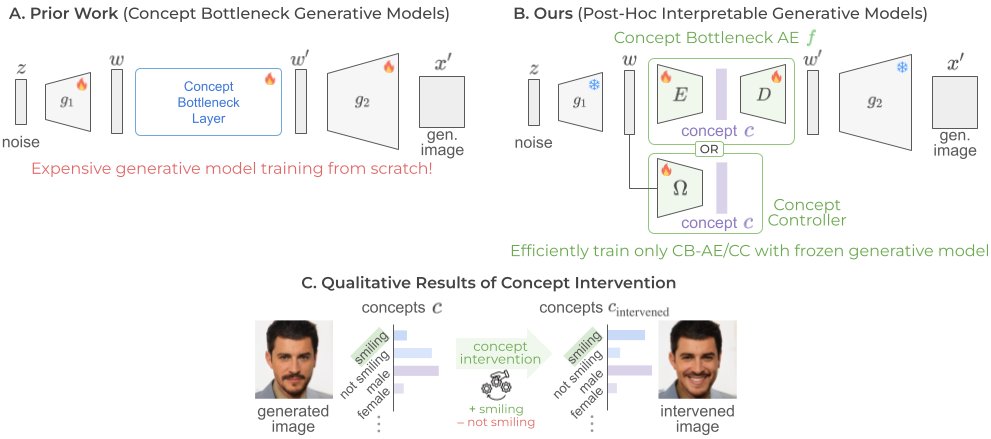

⚡ Making Deep Generative Models Inherently Interpretable – Catch us at #CVPR25 this week! ⚡ We’re excited to present our paper, Interpretable Generative Models through Post-hoc Concept Bottlenecks, at @CVPR 2025 this week! 🚀Project site: https://t.co/bzRBQRjCPl

0

3

6

🕑 Workshop Date & Time: June 11, 1:00 PM – 5:00 PM 🌟 Invited Speakers: Mihaela van der Schaar (@MihaelaVDS), Tsui-Wei (Lily) Weng (@LilyWeng_), Klaus-Robert Müller, Junfeng He, Chinasa T. Okolo (@ChinasaTOkolo) 🌐 Website: https://t.co/M8051x6hYy

@CVPR

0

1

2

Our @CVPR workshop on Explaining AI for Computer Vision is underway in Room 107B! Amazing talk by Prof @LilyWeng_ to kickoff the workshop!

0

3

12

Join Us at #ICLR25 Poster Session for CB‑LLMs today: 3–5:30 PM SGT, Hall 3 & 2B, Poster #498 Let's build LLMs we can trust! Come chat about interpretable & controllable LLMs with us @sun_chung_en @tuomasoi @berkustun! 🔗 https://t.co/Kb3P3Ra1a4

#DeepLearning #xAI

github.com

[ICLR 25] A novel framework for building intrinsically interpretable LLMs with human-understandable concepts to ensure safety, reliability, transparency, and trustworthiness. - GitHub - Trustworth...

0

0

2

💡LLMs don’t have to be black boxes. We introduce CB-LLMs -- the first LLMs with built-in interpretability for transparent, controllable, and safer AI. 🚀Our #ICLR2025 paper: https://t.co/08Q4Jcl39L

#TrustworthyAI #ExplainableAI #AI #MachineLearning #NLP #LLM #AIResearch

1

2

5