Guillermo Barbadillo

@guille_bar

Followers

1K

Following

601

Media

63

Statuses

494

In a quest to understand intelligence

Pamplona, Spain

Joined February 2018

RT @M_IsForMachine: I honestly can't believe anyone would fall for this nonsense. But if you are willing to listen for a second to a real e….

0

197

0

RT @petergostev: I quite like how well @arcprize shows the distribution of GPT-5 variant capabilities, from 1.5% (GPT-5 Nano, Minimal) to 6….

0

30

0

Catastrophic forgetting is one of the biggest challenges in continual learning. All continual learning techniques aim to achieve the best stability-plasticity tradeoff.

arxiv.org

To cope with real-world dynamics, an intelligent system needs to incrementally acquire, update, accumulate, and exploit knowledge throughout its lifetime. This ability, known as continual...

0

0

4

Glad to see that these experiments confirm my hunch when I read the paper a few weeks ago.

As far as I understand, this is another case of test-time training, since they use example pairs from both the training and evaluation sets. I'm not sure if the hierarchical architecture is necessary, or we could get similar results with other models.

0

0

2

I believe the bottleneck for solving ARC AGI is not the neural network architecture, but how the model is used at test time to search, learn, or both to adapt to novel tasks.

We were able to reproduce the strong findings of the HRM paper on ARC-AGI-1. Further, we ran a series of ablation experiments to get to the bottom of what's behind it. Key findings:. 1. The HRM model architecture itself (the centerpiece of the paper) is not an important factor.

1

0

13

RT @c_valenzuelab: Really nice demo of what @runwayml Aleph can do for complex changes in environments while adding accurate dynamic elemen….

0

158

0

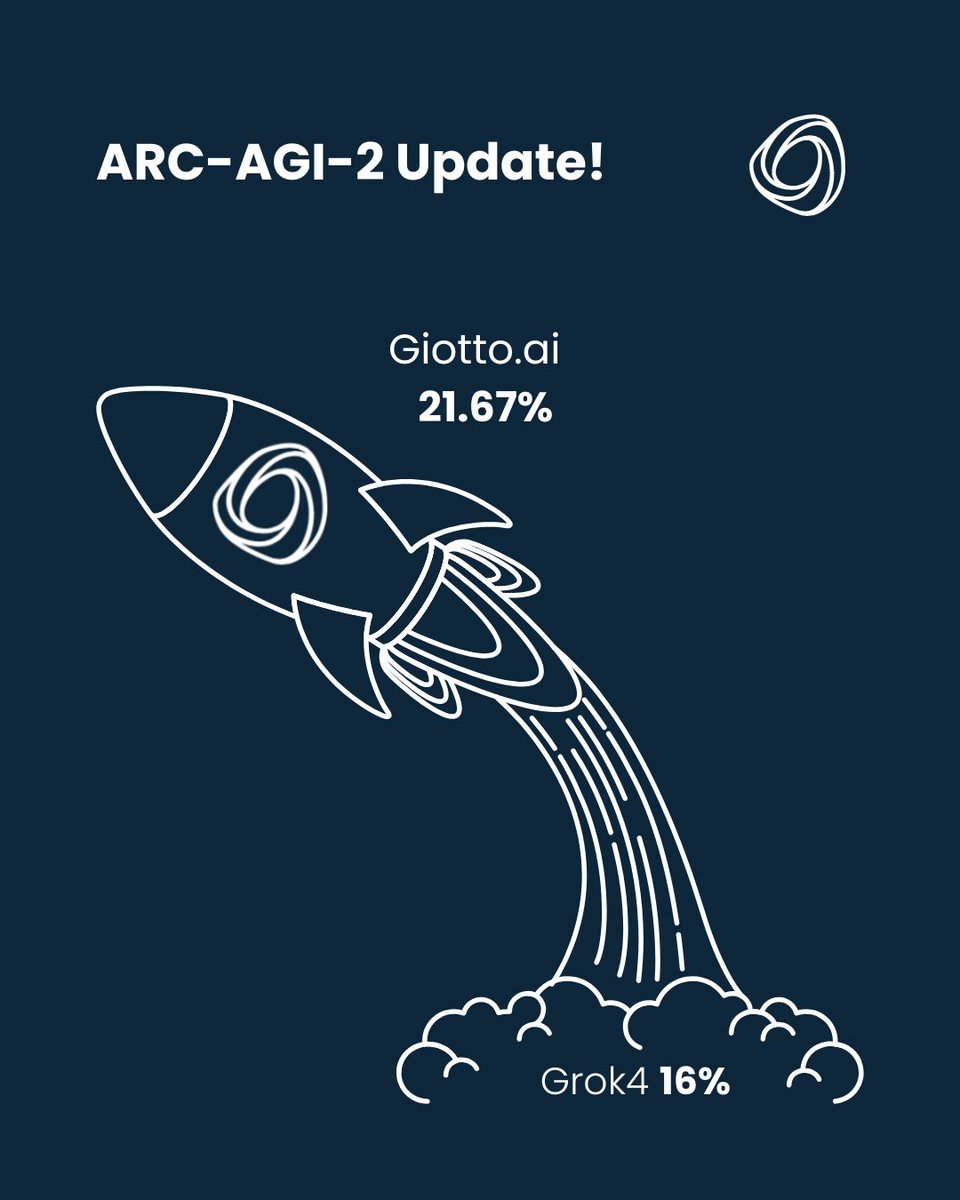

is the first team to score above 20% on the ARC25 challenge. Congratulations! We're still far from the 85% goal, but there's time left since the competition ends in November.

Turns out our youngest researcher was right! We crossed the 20% mark and are now at a high score of 21.67%, leading the 2025 @arcprize competition!. For context, Grok-4 is currently at 16%, Claude Opus at 8.6%, and GPT‑o3 at 6.5%…. The sky is now officially the limit.

1

5

23

I knew a similar problem existed in image generation, but I mistakenly believed current LLMs could handle it better.

@rockdrigoma DallE and alike are trained with image captions, not „commands“. But it doesn‘t get the negation anyway 😄

1

0

1

As far as I understand, this is another case of test-time training, since they use example pairs from both the training and evaluation sets. I'm not sure if the hierarchical architecture is necessary, or we could get similar results with other models.

Impressive work by @makingAGI and team. No pre-training or CoT with material performance on ARC-AGI. > With only 27 million parameters, HRM achieves exceptional performance on complex reasoning tasks using only 1000 training samples.

0

0

16

RT @corbtt: Big news: we've figured out how to make a *universal* reward function that lets you apply RL to any agent with:.- no labeled da….

0

126

0

Nice paper that in my opinion goes in the right direction to solve ARC. It generates python code to tackle the ARC tasks and combines search and learning in a virtuous cycle. I have summarized the results in the following plot.

Introducing SOAR 🚀, a self-improving framework for prog synth that alternates between search and learning (accepted to #ICML!). It brings LLMs from just a few percent on ARC-AGI-1 up to 52%. We’re releasing the finetuned LLMs, a dataset of 5M generated programs and the code. 🧵

3

14

88

RT @OriolVinyalsML: Hello Gemini 2.5 Flash-Lite! So fast, it codes *each screen* on the fly (Neural OS concept 👇). The frontier isn't alw….

0

282

0

RT @vitrupo: Anthropic co-founder Ben Mann says we'll know AI is transformative when it passes the "Economic Turing Test.". Give an AI agen….

0

86

0