Amelia Hardy

@amelia_f_hardy

Followers

190

Following

178

Media

0

Statuses

22

Studying AI safety, reinforcement learning, and NLP @stanford. she/her

Joined August 2020

Attending @NeurIPSConf? Stop by our poster "Do Language Models Use Their Depth Efficiently?" with @chrmanning and @ChrisGPotts today at poster #4011 in Exhibit Hall C, D, E from 4:30pm.

2

13

67

We're excited to share this work and discuss how it can be adapted for your applications!

Good morning Suzhou! @amelia_f_hardy and I will be at @emnlpmeeting to present our work *TODAY, Hall C, 12:30PM; paper number 426* Come learn: ✅ why likelihood is important to simultaneously optimize with attack success ✅ online preference learning tricks for LM falsification

0

0

4

Awesome work from @houjun_liu, @ShikharMurty, @robert_csordas, and @chrmanning! Their new architecture allows the LLM to learn which tokens require more computation without requiring supervision or sacrificing efficiency!

Introducing 𝘁𝗵𝗼𝘂𝗴𝗵𝘁𝗯𝘂𝗯𝗯𝗹𝗲𝘀: a *fully unsupervised* LM for input-adaptive parallel latent reasoning ✅ Learn yourself a reasoning model with normal pretraining ✅ Better perplexity compared to fixed thinking tokens No fancy loss, no chain of thought labels 🚀

1

4

9

Ready to make your presentations more effective? Award-winning lecturer @Sydney_Katz presents a variety of tips and tricks ranging from telling jokes to avoiding TMI. Video: https://t.co/44Bi514hMj

@StanfordOnline

1

4

12

@SISLaboratory @amelia_f_hardy @DuncanEddy @aiprof_mykel In fact, our method allows us to discover a Pareto tradeoff (🤯) between attack success and prompt likelihood; tuning a single parameter in our method travels along the Pareto-optimal front.

1

1

5

We're excited to share our work on multi-objective, RL-based red-teaming! In this case, we optimize for likelihood and toxicity induction, but the algorithm presented can be used with any set of objectives. We'd love to discuss how it applies to your use cases!

New Paper Day! For EMNLP findings—in LM red-teaming, we show you have to optimize for **both** perplexity and toxicity for high-probability, hard to filter, and natural attacks!

0

3

9

Don't miss it!

Hello friends! Presenting this poster at @aclmeeting in Vienna🇦🇹 on Monday, 6PM. Come learn about dropout, training dynamics, or just come to hang out. See you there 🫡

0

0

1

I wrote a note on linear transformations and symbols that traces a common conversation/interview I've had with students. Outer products, matrix rank, eigenvectors, linear RNNs -- the topics are really neat, and lead to great discussions of intuitions. https://t.co/xrqHxdQNOr

6

24

233

New Paper Day! For ACL Findings 2025: You should **drop dropout** when you are training your LMs AND MLMs!

3

17

87

@amelia_f_hardy discusses BetterBench, her latest paper on AI benchmarks in high-stakes environments—examining their quality, identifying limitations, and defining best practices. Project website: https://t.co/2GFc15z1yZ

https://t.co/Z5SKNp1GtM

1

3

5

We're very excited to hear from you! Please let us know what you'd most like to see at the workshop!

Excited for our proposed ICLR 2025 workshop on human-AI co-evolution! We're looking for diverse voices from academia & industry in fields like robotics, healthcare, education, legal systems, and social media. Interested in presenting or attending? https://t.co/9XbDGKQHrG

1

0

2

Join Our AI Benchmarking Study! We are studying how AI benchmarks are used across different fields. We are seeking researchers, industry professionals, and policymakers who have used AI benchmarks to participate in a 45-60 minute interview. Details: https://t.co/mU4uxn5lTY

1

9

16

Low-key feeling so humbled that our analysis from the Responsible AI chapter that I led in the @StanfordHAI 2024 AI Index was covered by the amazing @kevinroose in the @nytimes today 🤩 Must read if you want to know why current evaluations are not ideal! https://t.co/3y5mPu7o0a

nytimes.com

Which A.I. system writes the best computer code or generates the most realistic image? Right now, there’s no easy way to answer those questions.

0

2

8

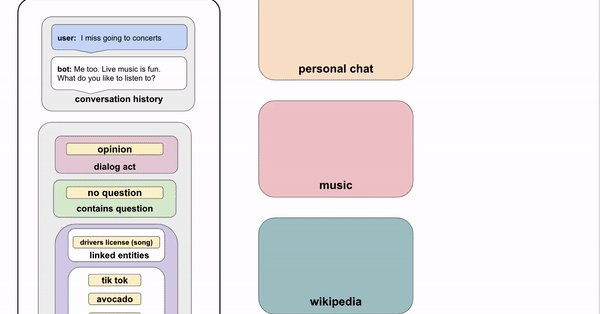

What do people want from chatbots? We're looking for people from diverse backgrounds to talk with a chatbot & answer a brief survey (~10 minutes). Sign up here - https://t.co/QUcg1IzC45. We will email selected participants and upon completion send a $3 Amazon gift card. (1/2)

docs.google.com

Large Language Models (like GPT-3, OPT, Bloom etc.) are able to generate more coherent and consistent text than ever before and dialogue is a promising application for them. However, there is little...

3

11

45

Sustaining long, unconstrained conversations is hard. Read our latest post by @AshwinParanjape and @amelia_f_hardy dive into Chirpy Cardinal, an open source social bot that tapped into neural generation to grab 2nd place in the competition! https://t.co/lU3fm3n2w5

ai.stanford.edu

Last year, Stanford won 2nd place in the Alexa Prize Socialbot Grand Challenge for social chatbots. In this post, we look into building a chatbot that combines the flexibility and naturalness of...

0

7

19

The Chirpy Cardinal team at @stanfordnlp (@AshwinParanjape, @amelia_f_hardy, @abigail_e_see, …) have worked on better socialbot conversations via controlled neural generation, encouraging human turns & more empathy. We’ve open-sourced our version 1 system https://t.co/TpHWNhs3UC

0

10

29

So I gave a talk yesterday on Anti-Blackness in AI. I added a section on Industry, check it out! https://t.co/TTGdIsLzQG

3

32

96

We support our @StanfordAILab alum @timnitGebru, her critical research on fairness & ethics in AI, and her tireless work in organizing for a more ethical & inclusive AI community. It’s urgent that voices like hers be heard and that we prioritize work on inclusion & ethics in AI.

1

206

1K

I'm shocked how hard it is to generate text about Muslims from GPT-3 that has nothing to do with violence... or being killed...

146

2K

5K