Alex Chen-Nexa AI

@alexchen4ai

Followers

439

Following

326

Media

14

Statuses

246

Founder and CEO at Nexa AI, Stanford PhD. Creator of NexaML, OmniNeural, Octopus V2, OmniVLM, OmniAudio models.

Palo Alto, CA, USA

Joined February 2024

Appreciate the partnership and support, @Microsoft. Launching Hyperlink on the @MicrosoftStore moves on-device, private AI agent on Windows one step closer to everyday use. If you’re on Windows, try it out — and we’d love to hear what you think.

Hyperlink for @Windows is now live on the @MicrosoftStore. Your computer already has all your context — it’s time to make it actually smart. Hyperlink is a private, on-device AI agent that can understand what’s in your computer files (docs, notes, PDFs, screenshots), answer in

0

0

1

Mobile AI is clearly shifting on-device. What used to be demos and experiments is now something developers can actually ship — across iOS and Android, with native support for the @Apple ANE and @Qualcomm Hexagon, delivering 2× faster performance and 9× better energy efficiency.

producthunt.com

NexaSDK for Mobile lets developers use the latest multimodal AI models fully on-device on iOS & Android apps with Apple Neural Engine and Snapdragon NPU acceleration. In just 3 lines of code, build...

The next generation of mobile apps will run multimodal AI locally by default. Today, we’re making it practical for developers to ship. We just launched NexaSDK for Mobile on Product Hunt. Developers can run the latest multimodal AI models fully on-device in iOS & @Android apps

0

0

4

iOS and macOS developers can now run and integrate the latest SOTA local multimodal AI models across the @Apple ANE, CPU, and GPU — with no cloud API cost, 2x faster speed, full privacy, and NPU-level energy efficiency in 3 lines of code. Give it a try and let us know how we can

Introducing NexaSDK for iOS and MacOS, run and build with the latest multimodal AI models fully on-device, on @Apple Neural Engine, GPU, and CPU. This is the first and only SDK that enables developers to run the latest SOTA models on NPU across iPhones and Mac Laptops, achieving

0

0

2

Self-driving is taking the work out of steering. But inside the cabin, everything is still manual: juggling messages, maps, and apps while trying to stay focused on the road. We think in-car AI should feel like “autopilot for the cabin.”The car should understand what’s happening

8

32

129

Thank you @AIatAMD. Glad to contribute to the discussion. Looking forward to advance the stack for developers, engineers and the larger ecosystem together.

More seamless compatibility between @PyTorch and ROCm means researchers can focus on modeling, and kernel engineers can keep pushing performance. @alexchen4ai, CEO of @nexa_ai, shares why this matters for both sides of the stack.

0

0

2

Co-developed with @GeelyGroup, AutoNeural sets a new benchmark for production-grade, real-time in-car multimodal AI — shaping the future of smart cockpits for enhanced safety and everyday in-cabin experience. Check out the model on @HuggingFace: https://t.co/PPLK3DAoqw and book

huggingface.co

Today we're releasing AutoNeural-VL-1.5B — the world's first real-time multimodal model built for in-car AI. It runs fully local on the @Qualcomm SA8295P NPU with a software–hardware co-designed architecture, setting a new bar for speed and quality. AutoNeural redefines what AI

0

0

5

Introducing NexaQuant — our hardware-aware quantization method that makes frontier Gen AI models practical on real devices. It delivers 3× size reduction and 2.5× faster inference while achieving 100% accuracy recovery, optimized for NPU, GPU, and CPU across compute, mobile, IoT,

2

4

9

This week at Nexa AI — Apple ANE Support, Featured at Microsoft Ignite, and new models support ⚡️ 1) NexaSDK became the first engine to run DeepSeek OCR in GGUF, from @deepseek_ai. Co-launched with partners at @nvidia and @AMD, making high-quality OCR one-line simple on local

1

3

14

Hyperlink was presented on stage at Microsoft Ignite 2025— as an official @Microsoft partner. Ignite is where enterprises look for what’s next. And this year, the big message was clear: the future of AI and agents on Windows will be local-first — fast, private, and deeply

15

36

238

Nexa AI is a featured partner at @Microsoft Ignite 2025 — highlighted in the official Microsoft blog and live on the floor this week. We’re also demoing at the @Qualcomm booth, showing what’s now possible with on-device AI agents powered by our NexaSDK and Hyperlink Agent.

3

5

26

Nexa AI was highlighted on stage at #WebSummit this week Dr. Vinesh Sukumar from @Qualcomm walked the audience through how developers can now build next-gen on-device AI apps using Nexa’s unified inference engine — Day-0 model support, NPU acceleration, and one SDK that works

2

4

10

Today we’re launching EmbedNeural — the first multimodal search model running directly on the @Apple Neural Engine (ANE). This is the first multimodal search model natively accelerated by the ANE across the Apple ecosystem: Macs with Apple Silicon, iPhones, iPads, and apps that

2

3

17

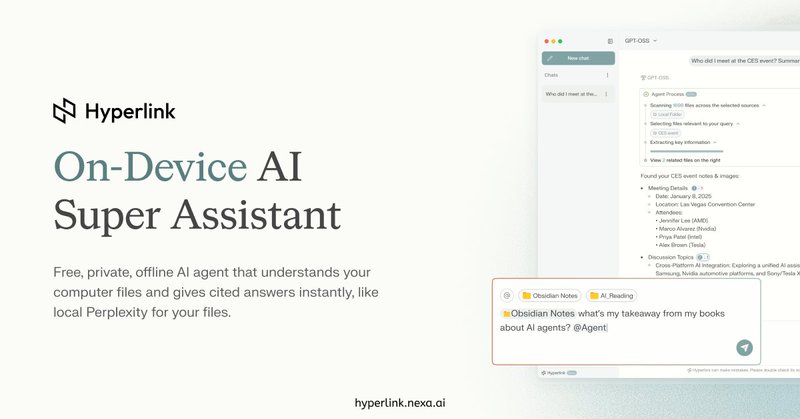

24 hours. 1.6M views. 6.4K likes. Industry leaders recognizing it. Hyperlink's launch blew up. Thank you to everyone who tried, shared, and sent feedback. More coming soon: https://t.co/a48bh9qts4 🚀

hyperlink.nexa.ai

Free, private, offline AI agent that understands your computer files and gives cited answers instantly, like local Perplexity for your files.

Ok… Hyperlink’s launch blew up way beyond what we expected. In the last 24 hours, we crossed 1.6M views, 6.4K likes, received recognition from industry leaders, and saw a ton of love from the community. We built Hyperlink to make your computer truly intelligent — an on-device

1

0

8

We’ve imagined this moment for years — when your computer stops being a tool and starts becoming an intelligent companion. Hyperlink is now GA, the world’s first on-device AI super assistant for your files. 100% private, deeply personal, and built for the next era of computing —

Meet Hyperlink, the first AI super assistant that lives inside your computer. Your computer stores all your files and personal context. Hyperlink deeply understands them and gives cited answers instantly — like Perplexity for your local files. It turns your computer into a true

0

0

1

Your local AI agent, upgraded. @Nexa_ai's Hyperlink is accelerated by RTX AI PCs allowing for scans of gigabytes of local files in minutes — fast, private, and all on your device. Get started today #RTXAIGarage 👉 https://t.co/vj94k0Zg2q

2

16

77

🔥 Real-world NPU vs CPU on Samsung S25 Ultra We ran a 10-minute LLM stress test comparing CPU vs Qualcomm Hexagon NPU: 🌡️ Temperature & Throttling:CPU: Hit 42°C in 3 min → throttled from 37 t/s to 19 t/s NPU: Stayed cool (36-38°C) → steady 90 t/s ⚡ Result: NPU 2-4× faster

We ran a 10-minute LLM test on Samsung S25 Ultra CPU vs @Qualcomm Hexagon NPU. In 3 minutes, the CPU hit 42 °C and throttled: throughput fell from ~37 t/s → ~19 t/s. The NPU stayed cooler (36–38 °C) and held a steady ~90 t/s — 2–4× faster than CPU under load. Same 10-min,

0

0

7

This Week at Nexa 🚀 — VLA model on IoT & Robotics NPU, Nexa Android SDK, and NexaStudio app that beats Apple Intelligence 1) World’s first vision-language-action model running locally on NPU (Robotics + IoT) with NexaML @huggingface’s SmolVLA now runs fully on the @Qualcomm

1

5

15

Introducing NexaSDK for Android with Java and Kotlin support — local AI that’s 2× faster than CPU, 9× more efficient on Qualcomm Hexagon NPU. ✅ 3 lines of code to run on your Android NPU ✅ Continuous Day-0 support for new models ✅ One SDK for NPU/GPU/CPU ✅ Verified by

Introducing NexaSDK for Android (Beta) — run the latest AI models locally, 9× more energy-efficient and 2× faster, on @Android devices, powered by the @Qualcomm Hexagon NPU. This is the first SDK to support NPU, GPU and CPU, unlocking the full power of every Android device — for

0

0

1

We’ve released an early preview of Qwen3-Max-Thinking—an intermediate checkpoint still in training. Even at this stage, when augmented with tool use and scaled test-time compute, it achieves 100% on challenging reasoning benchmarks like AIME 2025 and HMMT. You can try the

61

125

1K