Akshay

@akshayvkt

Followers

229

Following

10K

Media

186

Statuses

696

you have the right to work, never to its fruits

San Francisco, CA

Joined August 2011

RT @sharifshameem: Blindly following your curiosity is deeply underrated, creating demos is how we excavate model capabilities, and you hav….

0

16

0

if you don't want your product to look like 1000 others out there, you need to care about every detail

Founders who use Claude Code and think "distribution is the only thing that matters" have it backwards. Claude will fix bugs, unblock you, help you iterate. But the product matters even more now. You need to sweat the details. Care about the craft. Slop doesn't win.

1

0

0

man leading the effort to pass the Great Filter (spacex, tesla) ends up being the catalyst (grok companions) for their failure.

@uncle_deluge The most ironic outcome is the most likely.

0

0

0

all fun and games until its 2028 when you walk up to the location and there's sonnet 4.2.069 in a humanoid waiting for you.

Some of those failures were very weird indeed. At one point, Claude hallucinated that it was a real, physical person, and claimed that it was coming in to work in the shop. We’re still not sure why this happened.

0

0

1

using claude code and claude tried to access openai docs using WebFetch and keeps getting a 403. can't tell if @OpenAIDevs - an AGI company, prevents access to AIs trying to access the site, or if this is specific to claude.

1

0

0

When SpaceX had an anomalous explosion during static fire prep in 2016, they went for weeks without an answer for why it happened - gathering every tiny fragment, running through 1000s of scenarios - to finally learn that it exploded because they moved too fast, quite literally.

ANOMALY! Just before Ship 36 was set to Static Fire, it blew up at SpaceX Masseys!. Live on X and YT:.

0

0

0

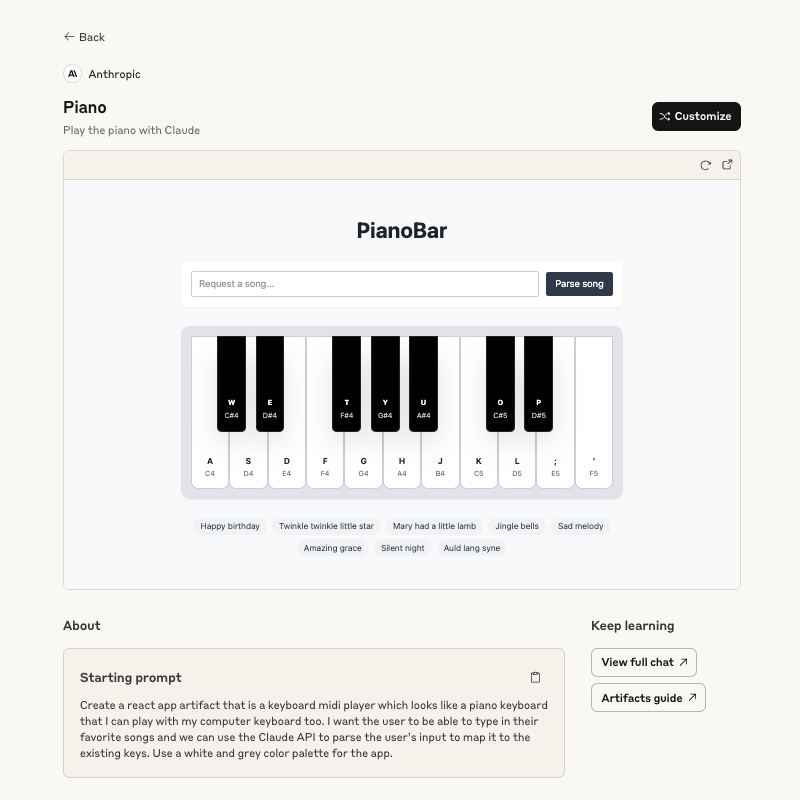

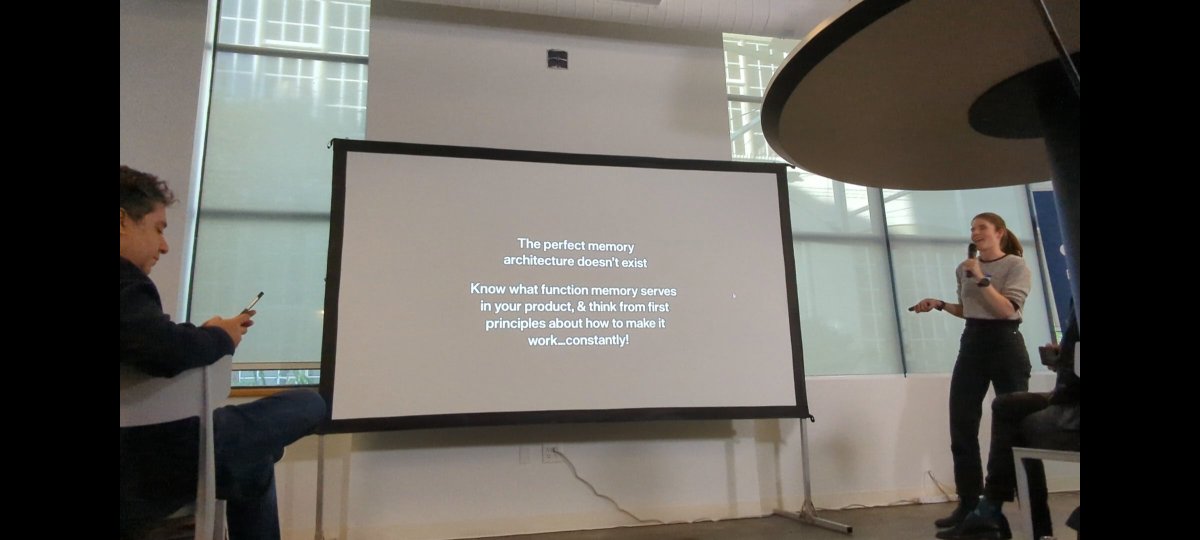

#3 the barrier to entry in the field of memory is low!. this is personally what i find most exciting. so long as you're willing to experiment and dogfood constantly, you can make real impact in the field in a relatively short amount of time. forget about doing things the "right".

0

0

3

#2 bitter lesson for memory. gpt4 had an 8k token context window ~2 years ago. now we have a smarter, cheaper gemini-2.5-flash with 125x the context. paraphrasing what @sjwhitmore said, the complex architecture that was right 1 year ago today may not be needed today, instead

1

0

3

#1 stop trying to make perfect memory architecture happen, its not going to happen. the memory architecture that's right depends on the use case you're building for. the primitives that make @newcomputer work are very different from what works for @cursor_ai

1

0

3

really enjoyed the talks from @WHinthorn @sjwhitmore @yash_anysphere and @NicoleHedley3 - thanks for organizing it @GregKamradt and @LangChainAI! . here's 3 things i found very useful from what they had to share 🧵.

I'm throwing the AI meetup I wish existed. Production grade AI Memory. * @sjwhitmore - Social memory @newcomputer .* @NicoleHedley3 - Memory for clients @ Headstart.* Will Fu-Hinthorn - Memory types @LangChainAI .* @ericzakariasson - $9B memetic agents @cursor_ai . SF come join.

1

5

24

seeing how good claudecode is at searching for code relevant to the task at hand makes me realize that we need long context windows for models to be useful, but not for the reason many think we do. we don't need a model w/ 10m context window so that the model can ingest the.

AI coding agents hit a wall when codebases get massive. Even with 2M token context windows, a 10M line codebase needs 100M tokens. The real bottleneck isn't just ingesting code - it's getting models to actually pay attention to all that context effectively.

0

0

0