Yongjin Yang

@_yongjinny

Followers

39

Following

76

Media

1

Statuses

14

Incoming Ph.D. @UoftCompSci | Formerly M.S. @kaist_ai, Research Intern @NAVER_AI_LAB, B.S. @SeoulNatlUni

대한민국 서울

Joined November 2022

Congrats again to our brilliant students @davidguzman1120 @_yongjinny for receiving the "Oral Paper Award" at the #ACL2025NLP Workshop on Research on Agent Language Models (REALM)! Check out how Reasoning LLMs optimize self interests over collective success 📊 in our paper

0

5

28

With o1-like models charging by token usage 💸, we want to avoid verbal redundancy. Our self-training method can elicit concise reasoning, reducing token usage by 30% 🔥 while maintaining accuracy! w/ MS students @Tergel_Munkhbat and @shkimsally at https://t.co/CeSggg6BR9 🚀

1

16

84

Thrilled to announce our #EMNLP2023 Findings paper on explainable hate speech detection using LLM-generated data! 🎉📄 A huge thanks to @itsnamgyu for sharing our work😊

Can LLMs explain 🫣 hate-speech better than humans? In our #EMNLP2023 findings paper, we show that LLM-generated data outperforms human-labeled data in training (small) models to 🕵️♂️ detect and explain hate speech. WARNING: figures reveal offensive text https://t.co/J5vc9dmI3l

1

1

6

I asked my audience: “If you could only listen to one podcast for the next 5 years, what would it be?” Here are 10 of the most popular replies:

458

2K

7K

Everybody writes (texts, work emails, sales copy, etc.) But few people write well. Here are 7 free, must-have writing tools:

198

2K

7K

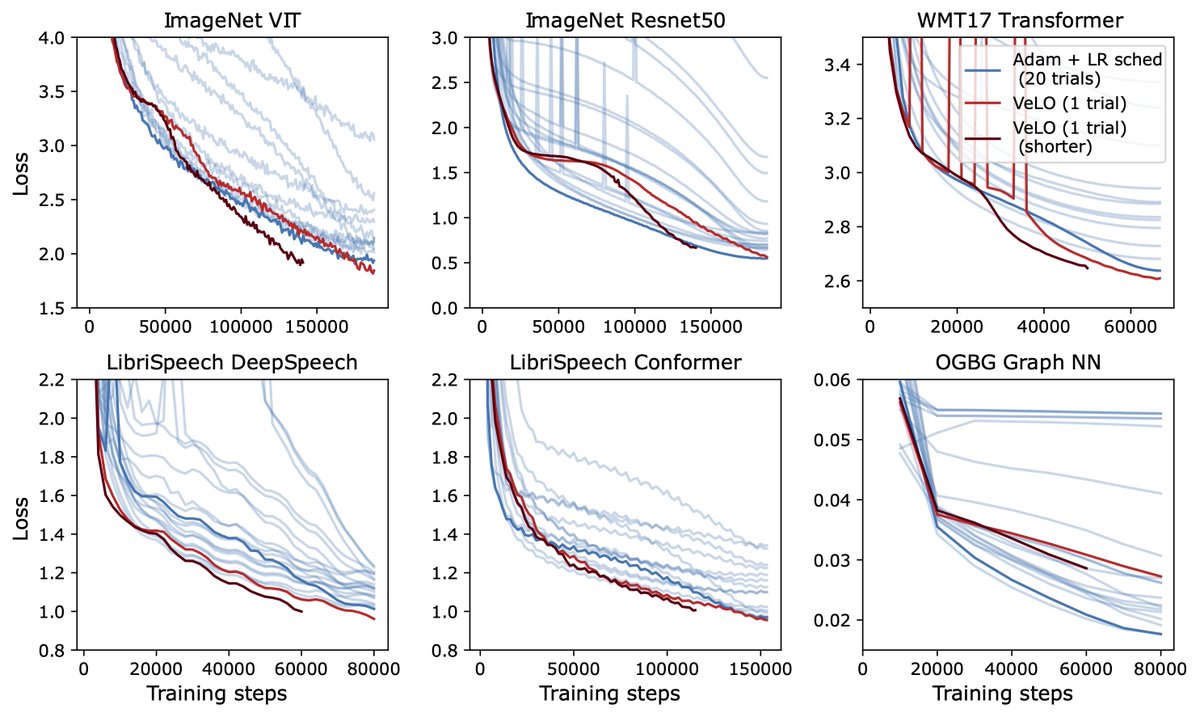

If there is one thing the deep learning revolution has taught us, it's that neural nets will outperform hand-designed heuristics, given enough compute and data. But we still use hand-designed heuristics to train our models. Let's replace our optimizers with trained neural nets!

21

134

873

All the educational resources to learn Machine Learning in one spot:

52

293

1K

Colleges keep publishing their machine learning courses online. 100% free: ↓ - MIT 6.S191 Introduction to Deep Learning - DS-GA 1008 Deep Learning - UC Berkeley Full Stack Deep Learning - UC Berkeley CS 182 Deep Learning - Cornell Tech CS 5787 Applied Machine Learning

39

478

2K

I've been trading for 10 years. Everything you need to know is in these 25 threads:

219

3K

9K

Tired of tuning your neural network optimizer? Wish there was an optimizer that just worked? We’re excited to release VeLO 🚲, the first hyperparameter-free learned optimizer that outperforms hand-designed optimizers on real-world problems: https://t.co/zarCWuqIWb 🧵

10

166

924

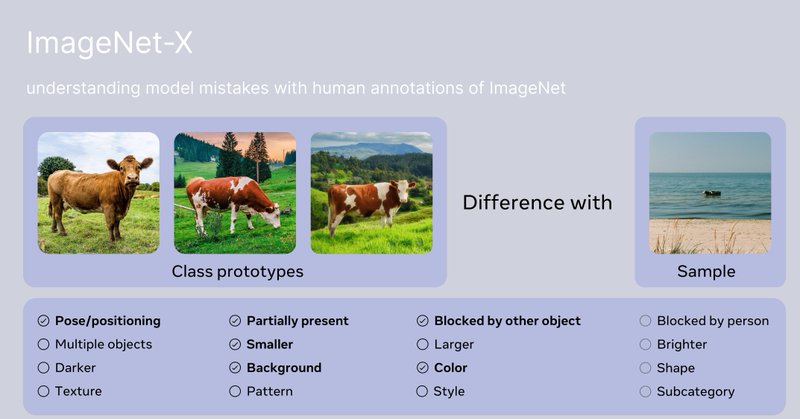

We’ve released ImageNetX: a set of human annotations for the popular ImageNet benchmark to gauge model robustness strengths/weaknesses — one of the first large scale efforts to pinpoint mistake types in AI computer vision systems. Explore the dataset ⬇️

facebookresearch.github.io

understanding model mistakes with human annotations of ImageNet

9

77

317