James Harrison

@jmes_harrison

Followers

1K

Following

358

Media

27

Statuses

81

Cyberneticist @GoogleDeepMind

San Francisco

Joined September 2014

Deepseek R1 inference in pure JAX! Currently on TPU, with GPU and distilled models in-progress. Features MLA-style attention, expert/tensor parallelism & int8 quantization. Contributions welcome!

10

47

300

Are you still using hand-designed optimizers? Tomorrow morning, I’ll explain how we can meta-train learned optimizers that generalize to large unseen tasks! Don't miss my talk at OPT-2024, Sun 15 Dec 11:15-11:30 a.m. PST, West Ballroom A! https://t.co/DRB1pamngZ

Learned optimizers can’t generalize to large unseen tasks…. Until now! Excited to present μLO: Compute-Efficient Meta-Generalization of Learned Optimizers! Don’t miss my talk about it next Sunday at the OPT2024 Neurips Workshop :) 🧵 https://t.co/ysEWwRe9Hf 1/N

0

6

34

Our approach can be applied to a very very wide range of problems---we include multi-objective BayesOpt as an example, but we're excited about massively scaling up this approach on a ton of problems. Paper link here: https://t.co/6s0wlXkOfZ

0

0

1

Our results show our approach of VBLLs + MLP features + our training approach yields SOTA or near-SOTA performance on a bunch of problems from classic 2D Ackley, all the way to much more challenging problems like Lunar Lander controller tuning

1

0

1

To accelerate training, we combine model optimization with last-layer conditioning. This is a useful bridge between efficient Bayesian conditioning and NN optimization and massively accelerates training at (almost) no performance cost (see black vs green curve in the fig).

1

0

1

Our approach builds on variational Bayesian last layers (VBLLs, https://t.co/sWdKGI0G2o). These can be applied with arbitrary NN architectures, and are highly scalable at the same cost as standard NN training. Your favorite model can painlessly do active learning!

arxiv.org

We introduce a deterministic variational formulation for training Bayesian last layer neural networks. This yields a sampling-free, single-pass model and loss that effectively improves uncertainty...

1

0

4

New: a neural net-based approach to Bayesian optimization that performs well in both classic, small-scale problems, and can efficiently scale far beyond GP surrogate models. If you're at NeurIPS, come by our poster at the Bayesian decision-making workshop today! More info👇

3

1

25

Going to @itssieee ITSC'24? Check our tutorial on Data-driven Methods for Network-level Coordination of AMoD Systems Organized with @DanieleGammelli, Luigi Tresca, Carolin Schmidt, @jmes_harrison, Filipe Rodrigues, Maximilian Schiffer, @drmapavone

https://t.co/G80OdAjxqK

2

4

11

For more info, you can check out: Paper (spotlight @ ICLR): https://t.co/xlz0dD0Poj Torch implementation + docs + tutorial colabs: https://t.co/AgrVDlLqo0 JAX implementation: coming soon!

github.com

Simple (and cheap!) neural network uncertainty estimation - VectorInstitute/vbll

2

1

12

We tested VBLLs in regression, classification, and active decision-making. I'm particularly excited about the bandit performance---VBLLs enable nearly free active learning! Plus, you can use VBLLs jointly with other Bayesian methods, or add them to your model post-training.

1

0

4

Our idea: - Variational posterior only on the last layer - Exploit structure to obtain sampling-free lower bounds on the marginal likelihood Result: Bayesian models that cost the same as vanilla nets! Plus, adding it to your existing model only requires changing the last layer.

1

0

4

Massively scaling up neural nets means rethinking the tradeoff between performance and cost in uncertainty quantification. Ideas that have worked in the past---like Bayes-by-backprop or ensembles---are impossible with multi-billion parameter models.

1

0

6

Want a really simple (and cheap!) way to improve neural net calibration and get practical epistemic uncertainty estimates? At ICLR this year: Variational Bayesian Last Layers Try it out: 1. pip install vbll 2. a couple one line changes to your current training pipeline 🧵

3

24

124

A question we have been thinking about for a long time: what is the natural architecture for a learned optimizer? We now have an important part of the answer---we can automatically construct expressive optimizers based on optimizee network symmetries. Check out Allan's thread!

🧵: How do you design a network that can optimize (edit, transform, ...) the weights of another neural network? Our latest answer to that question: *Universal* Neural Functionals (UNFs) that can process the weights of *any* deep architecture.

0

2

36

📝Quiz time: when you have an unrolled computation graph (see figure below), how would you compute the unrolling parameters' gradients? If your answer only contains Backprop, now it’s time to add a new method to your gradient estimation toolbox!

1

13

128

Want to learn about learned optimization? I gave a tutorial at @CoLLAs_Conf which is now public!

0

9

50

Looking forward to getting started at #ICML! Happy to chat about RL, learning-based control, and Graph ML. Make sure to drop by our poster! (Wed 26 Jul 2 p.m. PDT)

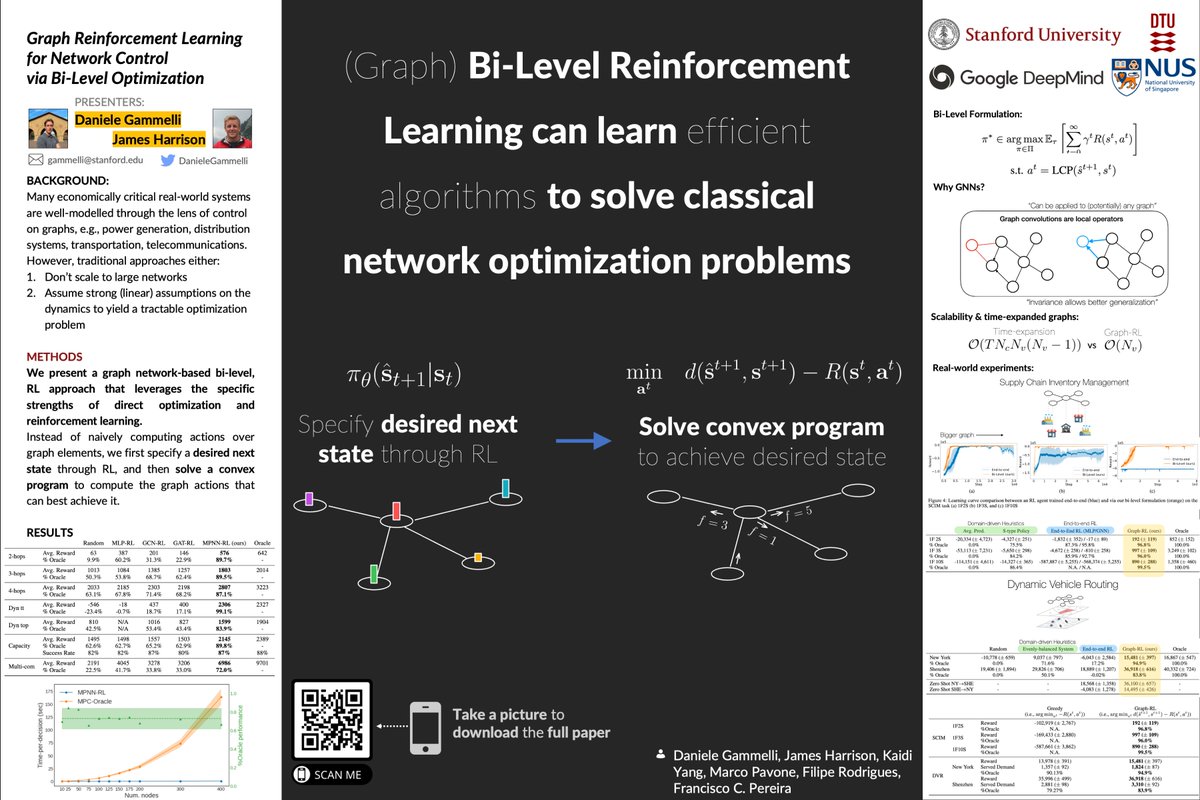

Excited to share that our paper on Graph-Reinforcement Learning was accepted at #ICML2023! We present a broadly applicable approach to solve graph-structured MDPs through the combination of RL and classical optimization. Website: https://t.co/qVAjiTgrRt 🧵👇(1/n)+quoted tweet

0

12

60

Graph deep learning and bi-level RL seem to work exceptionally well for a whole bunch of critically important real-world problems like supply chain control. Plus, it easily combines with standard linear programming planners in OR. Check out @DanieleGammelli's thread for info!

Excited to share that our paper on Graph-Reinforcement Learning was accepted at #ICML2023! We present a broadly applicable approach to solve graph-structured MDPs through the combination of RL and classical optimization. Website: https://t.co/qVAjiTgrRt 🧵👇(1/n)+quoted tweet

0

1

4

Happy to share that our latest work on adaptive behavior prediction models with @jmes_harrison @GoogleAI and @drmapavone @NVIDIAAI has been accepted to #ICRA2023! 📜: https://t.co/Zlfi276sP5 We've also recently released the code and trained models at https://t.co/r7Czz2z1S4!!

github.com

Contribute to NVlabs/adaptive-prediction development by creating an account on GitHub.

0

1

10

Really nice + concise VeLO explainer!

Why tune optimizers hyperparameters (ex. Adam) by hand, if one can train a neural network to behave like an optimizer and dynamically find the best update for your neural network’s weights? In this video, we explain the VeLO learned optimizer!👇 📺 https://t.co/7Wo3i51f94

0

2

13