xjdr

@_xjdr

Followers

24K

Following

28K

Media

780

Statuses

6K

You sweet sweet summer child . .

@_xjdr @tejashaveridev but why would anyone in the world ever run any model at full precision.

6

2

195

+1.

@Kimi_Moonshot cons@64 has issues in situations where your metric is inherently continuous. avg@64 seems more sensible + general as a standard for evaluation.

0

0

10

RT @kalomaze: @Kimi_Moonshot cons@64 has issues in situations where your metric is inherently continuous. avg@64 seems more sensible + gene….

0

3

0

i say this will all seriousness. skill issue.

We ran a randomized controlled trial to see how much AI coding tools speed up experienced open-source developers. The results surprised us: Developers thought they were 20% faster with AI tools, but they were actually 19% slower when they had access to AI than when they didn't.

33

26

661

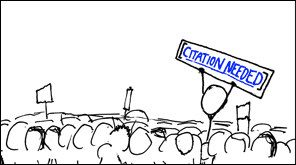

turns out, jurgen actually invented the GPU in order to unleash the power of neural networks in a little known paper in 1989 but has never received the credit he deserves.

Congrats to @NVIDIA, the first public $4T company! Today, compute is 100000x cheaper, and $NVDA 4000x more valuable than in the 1990s when we worked on unleashing the true potential of neural networks. Thanks to Jensen Huang (see image) for generously funding our research 🚀

14

8

396