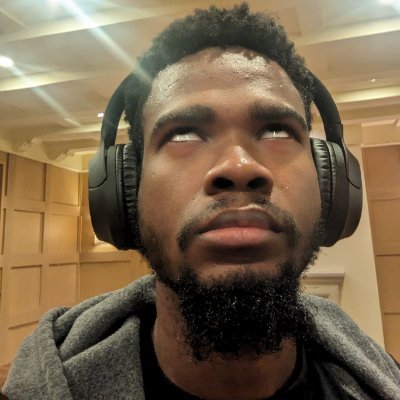

Steven Kolawole

@_stevenkolawole

Followers

2K

Following

7K

Media

64

Statuses

2K

Ẹ̀yin èèyàn mi! ❤️ Low-budget philosopher. ML Efficiency. PhD-ing @SCSatCMU. @ml_collective's poster child. Big brother to 3 amazing sisters.

Pittsburgh, PA

Joined December 2018

I’m recruiting PhD students for 2026! If you are interested in robustness, training dynamics, interpretability for scientific understanding, or the science of LLM analysis you should apply. BU is building a huge LLM analysis/interp group and you’ll be joining at the ground floor.

Life update: I'm starting as faculty at Boston University in 2026! BU has SCHEMES for LM interpretability & analysis, so I couldn't be more pumped to join a burgeoning supergroup w/ @najoungkim @amuuueller. Looking for my first students, so apply and reach out!

17

126

668

🚀 Federated Learning (FL) promises collaboration without data sharing. While Cross-Device FL is a success and deployed widely in industry, we don’t see Cross-Silo FL (collaboration between organizations) taking off despite huge demand and interest. Why could this be the case? 🤔

1

12

23

It's a beautiful thing looking back to when I joined MLC last year. All I had was a scrambled mind and an interest in research. One step at a time; not an expert yet, but now I have direction and can confidently design and execute research projects thanks to @ml_collective.

On today’s MLC-Ng Sunday Specials call, an old-timer returned after a research role at Max Planck l (undergrad at UNILAG); another casually mentioned his new role at Google. Joining these calls weekly is humbling-seeing so many talented folks doing big things from small places.

0

2

4

Thanks to this wonderful community, I got selected for the Google DeepMind scholarship for a Master's in Mathematical Science (AI for Science) at the African Institute for Mathematical Sciences, South Africa. Cheers to the next chapter🎉

On today’s MLC-Ng Sunday Specials call, an old-timer returned after a research role at Max Planck l (undergrad at UNILAG); another casually mentioned his new role at Google. Joining these calls weekly is humbling-seeing so many talented folks doing big things from small places.

11

20

142

On today’s MLC-Ng Sunday Specials call, an old-timer returned after a research role at Max Planck l (undergrad at UNILAG); another casually mentioned his new role at Google. Joining these calls weekly is humbling-seeing so many talented folks doing big things from small places.

8

17

109

A general phenomenon that can sneak up on you when you’re at, say, the 99th percentile of a skill: At first, you’re exceptional enough that you receive praise from virtually everyone, and you may never go head-to-head with someone who can beat you. That is, until you join some

Last year I had a conversation with someone who majored in physics at UChicago. He initially started in math & thought he was prepared having taken AP Calculus BC, but he got smacked in the face by the level of abstraction and proof-writing ability that was assumed. He couldn't

3

13

242

Sleep hits harder when you’re exhausted. Food tastes better when you’ve gone hungry. Water tastes sweeter when you’ve been grinding. Music hits deeper when you’ve been in silence. Deprivation sharpens pleasure. Don’t run from suffering. That’s what gives life its color.

117

2K

13K

Initially you think you're training hard & smart enough to max out your potential. Then you get to high enough level to realize that, while a few people there do have more inborn talent than you, many of them have no inborn edge over you -- but they're way ahead because they

Last year I had a conversation with someone who majored in physics at UChicago. He initially started in math & thought he was prepared having taken AP Calculus BC, but he got smacked in the face by the level of abstraction and proof-writing ability that was assumed. He couldn't

9

23

433

Crazy how the MIT License started as a open source project and popped off so hard they built a university about it 🤯

66

184

8K

Arxiv:

arxiv.org

Cascade systems route computational requests to smaller models when possible and defer to larger models only when necessary, offering a promising approach to balance cost and quality in LLM...

Excited to announce that "Semantic Agreement Enables Efficient Open-Ended LLM Cascades" got accepted to EMNLP 2025 Industry Track! Thread 🧵

0

0

5

Our paper (my first 😌) “Benchmarking and Improving LLM Robustness for Personalized Generation” was accepted to #EMNLP2025 findings🎉 🧑💻Data and Code: https://t.co/ULz29xqxz0 Paper: https://t.co/q22FPPpOaY 🧵

arxiv.org

Recent years have witnessed a growing interest in personalizing the responses of large language models (LLMs). While existing evaluations primarily focus on whether a response aligns with a user's...

1

6

45

Grateful for this collaboration with @duncansoiffer and @gingsmith--extending model routing/cascading to open-ended generation, using meaning-level consensus to further bridge the theory-practice gap! 📄 Paper: TBA (arxiv is still processing)

1

0

2

This work started from a simple observation from my previous work on ABC ( https://t.co/r0NE5INY0Q): deployment reality != academic assumptions. Most cascade research assumes white-box access & homogeneous models, but that's not how LLMs are actually deployed in practice.

arxiv.org

Adaptive inference schemes reduce the cost of machine learning inference by assigning smaller models to easier examples, attempting to avoid invocation of larger models when possible. In this work...

1

0

1

Why this matters for production: Black-box APIs, constant model updates, heterogeneous serving, & cost pressure are realities that existing cascade methods struggle in forms to handle Semantic cascades solve all these constraints simultaneously-no retraining when models update.

1

0

2

This breaks conventional ensemble wisdom. Results across translation, summarization, and QA (500M-70B parameters): - Match 70B quality at 40% computational cost - Up to 60% latency reduction - Works across any model family - Deployable today

1

0

3

But here's the counterintuitive twist: *weaker models actually improve ensemble decisions* through disagreement signals A 500M model disagreeing with 8B models provides valuable uncertainty info. Even when the weak model is wrong, it helps identify when to defer to larger models

1

0

2

Our solution: When models say "It's sunny today" vs "The weather is bright," they agree semantically despite different words. That meaning-level consensus predicts reliability better than individual confidence scores, with no training required.

1

0

1

Quick primer: LLM cascading means routing queries to small, cheap models when possible, and only using large, expensive models when necessary. Think "escalation system" - start small, escalate when needed. Goal: maintain quality while cutting costs.

1

0

1

Open-ended generation breaks traditional cascading Multiple valid answers exist, so "correct vs incorrect" deferral rules don't work Token-level confidence optimizes for next-token pred, not semantic quality. GPT/Claude APIs hide those scores anyway We needed sth different.

1

0

1