Yibo Yang

@YiboYang

Followers

285

Following

150

Media

9

Statuses

28

Research scientist at Chan Zuckerberg Initiative, working on ML/AI + science.

Joined August 2013

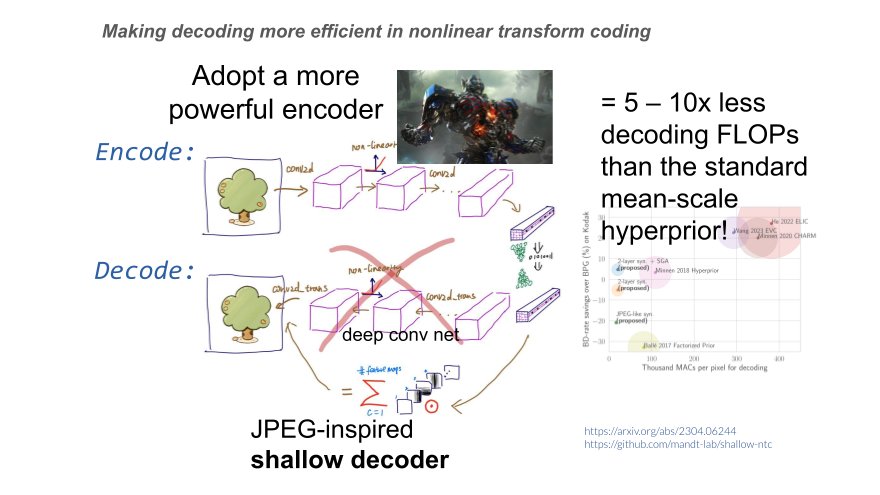

I had the pleasure of giving a talk and sharing some recent work on diffusion + compression (together with @justuswill and @StephanMandt) at the Learn to Compress workshop at #isit2025. Here are my slides: Thanks again for the invitation!

0

3

20

RT @StephanMandt: Thrilled to share that my student Justus Will and former student @YiboYang had their work selected as an ICLR 2025 Oral (….

0

4

0

RT @lazar_atan: 🚀Introducing — Meta Flow Matching (MFM) 🚀. Imagine predicting patient-specific treatment responses for unseen cases or buil….

0

54

0

📢Reminder to submit your cool results to our @NeurIPS2024 workshop on ML & Compression!! .Deadline: Sept 30 (AoE). Submission link: .Fill out this form if you'd like to become a reviewer:

docs.google.com

Please fill out this form if you are interested in being a reviewer for the NeurIPS 2024 Workshop on Machine Learning and Compression. To accommodate as many potential submissions as possible, the...

Excited to co-organize another workshop on machine learning and compression, at #NeurIPS2024!. Topics:.📦 ML + data/model compression.⚡Resource-efficient representations.🧠Info-theoretic aspects of learning & intelligence . More details to come at

2

8

34

RT @Official_CLIC: We’re happy to announce that CLIC 2025 will be co-located with Picture Coding Symposium in December 2025. More details t….

0

6

0

Excited to co-organize another workshop on machine learning and compression, at #NeurIPS2024!. Topics:.📦 ML + data/model compression.⚡Resource-efficient representations.🧠Info-theoretic aspects of learning & intelligence . More details to come at

3

7

34

RT @AliMakhzani: I'm excited to be in New Orleans for #NeurIPS2023! Looking forward to catching up with old friends and meeting new folks.….

0

7

0

RT @LinYorker: For the first time, we (with @f_dangel, @runame_, @k_neklyudov @akristiadi7, Richard E. Turner, @AliMakhzani) propose a spar….

0

8

0

Excited to be @NeurIPSConf next week and chat about compression, steering LLMs, diffusion and more! Also looking for internship & full-time jobs in 2024. And stop by poster #1905 on Wed Dec 13, 5 - 7 pm to learn about lossy compression + optimal transport!

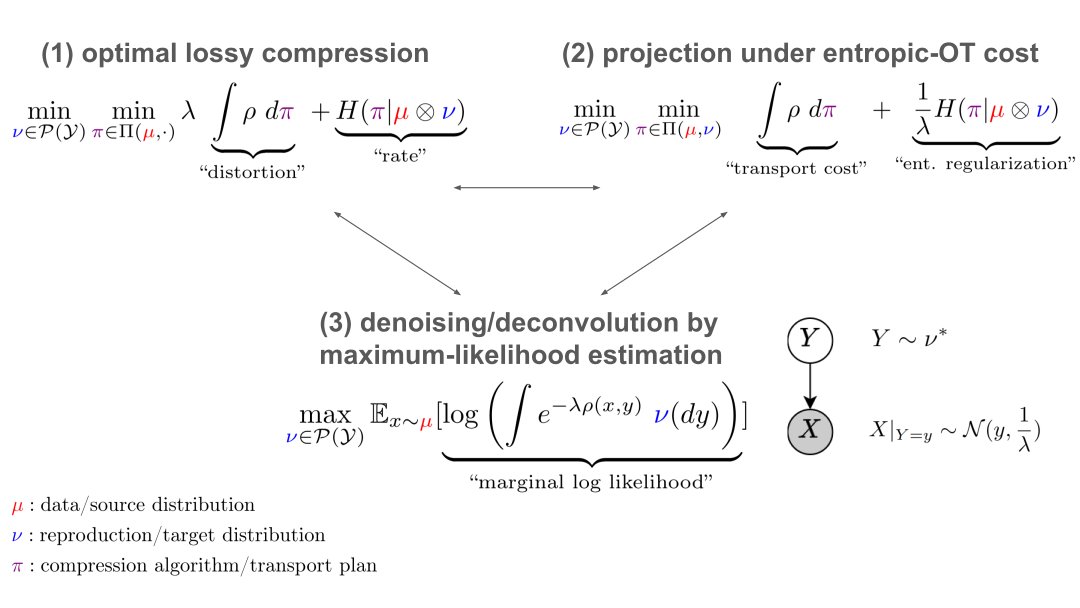

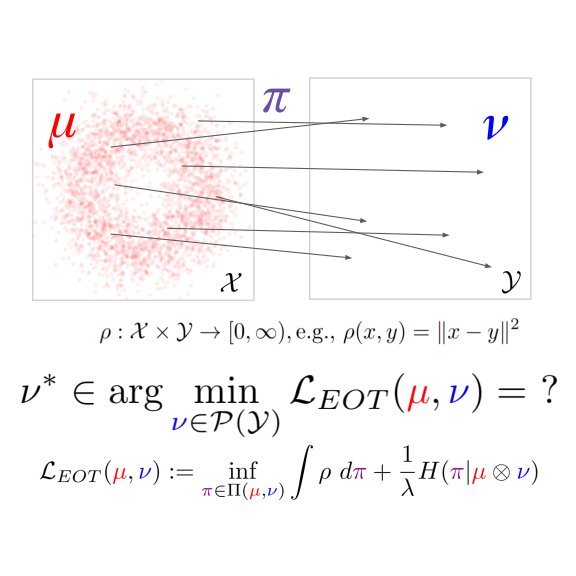

What do optimal lossy compression and projection under an entropic-OT cost have in common? Both are equivalent to a denoising/maximum-likelihood estimation problem! .We show this in our NeruIPS23 paper and study the fundamental limit of lossy compression using optimal transport🧵

0

0

10

Here's the paper: 5-minute talk: Code: Poster: Joint work with the wonderful Stephan Eckstein (ETH Zürich/Tübingen), Marcel Nutz (Columbia), and @s_mandt (UCI) 🎷🎶 (5/5).

github.com

Official project page for Estimating the Rate-Distortion Function by Wasserstein Gradient Descent - yiboyang/wgd

0

1

9

RT @zicokolter: New work with @andyzou_jiaming on analyzing internal representations of LLMs, to find phenomena that correlate quite well w….

0

5

0

RT @neural_compress: Please join our social at Maui Brewing Co. Waikiki at 6pm after the workshop. Everyone, especially compression and inf….

0

4

0