Tao Huang

@TaouHuang

Followers

319

Following

220

Media

5

Statuses

43

PhD @ SJTU | MPhil @ CUHK | B.Eng. @ ShanghaiTech

Shanghai

Joined August 2022

HoST is selected as a Best Systems Paper Finalist at #RSS2025!.Presentation today:.Spotlight Talks: 4:30pm-5:30pm.Poster: 6:30pm-8:00pm Board Nr: 22.Welcome to our poster and have a zoom chat with us!.

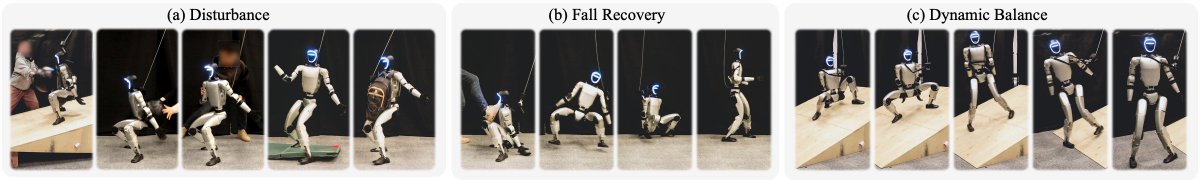

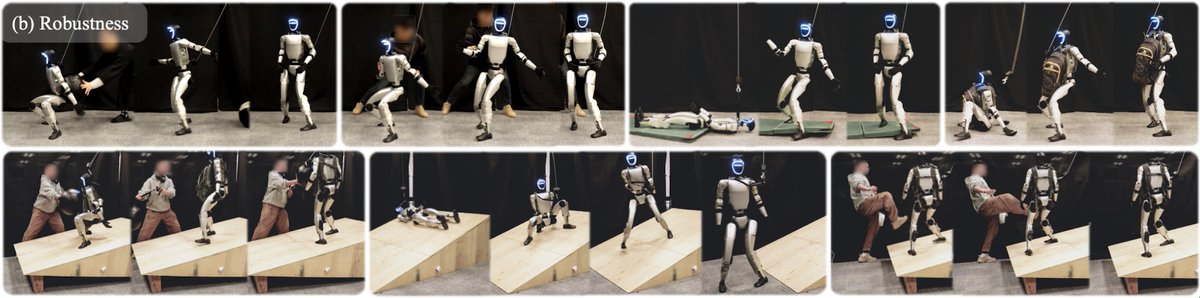

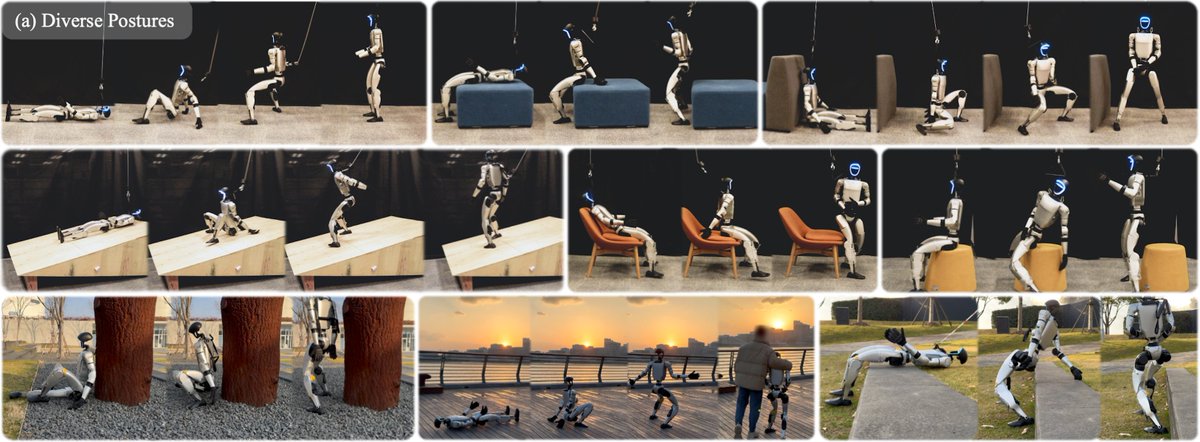

💡Can a humanoid robot learn to stand up across diverse real-world scenarios from scratch?. 🤖 Introducing HoST: Learning Humanoid Standing-up Control across Diverse Postures. Website:

1

3

17

RT @XiaoChen_Twi: #ICCV2025.🤔 How to scan and reconstruct an unseen collision-rich indoor scene with 10+ rooms?.💡 Our answer is "Launch the….

0

1

0

The standing-up motion is very similar to our motions on Unitree G1😃Code is available: Happy to see humanoid standing-up control get better!.

github.com

[RSS 2025 Best Systems Paper Finalist] 💐Official implementation of "Learning Humanoid Standing-up Control across Diverse Postures" - InternRobotics/HoST

0

2

4

Our work on manipulation-centric representation from large-scale robot dataset will be presented in ICLR. Catch Huazhe @HarryXu12 on the site for more details!.

Our #ICLR2025 paper MCR will be presented at Hall 3 + Hall 2B #42 on Apr 24th from 7:00 to 9:30 PM PDT. Won't be able to attend the conference since I'm working on CoRL submission. Please check it out and drop me an email if you are interested!

0

1

4

RT @SizheYang111: 💡 With a single manually collected demonstration, can the learned policy achieve robust performance?. 🤖 #RSS2025 Introduc….

0

14

0

RT @ju_yuanchen: 🧩#CVPR2025🌷Introducing Two By Two✌️: The First Large-Scale Daily Pairwise Assembly Dataset with SE(3)-Equivariant Pose Est….

0

22

0

HoST is accepted to #RSS2025!.Code is open-sourced: 💡Training code of Unitree G1 and H1.💡Tip of extension to other humanoid robots.💡Hardware deployment tips (G1 & H1-2).- Diverse terrains | supine | prone postures.- Evaluation and visualization scripts.

github.com

[RSS 2025 Best Systems Paper Finalist] 💐Official implementation of "Learning Humanoid Standing-up Control across Diverse Postures" - InternRobotics/HoST

💡Can a humanoid robot learn to stand up across diverse real-world scenarios from scratch?. 🤖 Introducing HoST: Learning Humanoid Standing-up Control across Diverse Postures. Website:

2

4

43

RT @xu_sirui: Interested in:.✅Humanoids mastering scalable motor skills for everyday interactions.✅Whole-body loco-manipulation w/ diverse….

0

46

0

RT @ToruO_O: Sim2Real RL for Vision-Based Dexterous Manipulation on Humanoids. TLDR - we train a humanoid robot wi….

0

65

0

RT @ericliuof97: Thrilled to introduce 🦏RHINO: Learning Real-Time Humanoid-Human-Object Interaction from Human Demonstrations!. Project: ht….

0

42

0

Really impressive work led by @HuayiWang04! . Please check more details on the website:

💡 Can a humanoid robot learn to traverse sparse footholds like stepping stones and balancing beams with agility?. 🤖 Introducing BeamDojo: Learning Agile Humanoid Locomotion on Sparse Footholds. Paper: Website:

0

0

6

(5/n) .Great thanks to my wonderful collaborators: Junli Ren, Huayi Wang, Zirui Wang, @BenQingwei, Muning Wen, @XiaoChen_Twi, Jianan Li, and @pangjiangmiao!. Check more details on our website:

0

0

2

Congrats to Guangqi for his 1st 1st-author paper! This work is well-motivated and inspiring for learning from large-scale robot dataset. Please check it out🤩.

Thrilled to share that MCR (Manipulation-centric representation) has been accepted by @iclr_conf #ICLR2025 🎉 Huge thanks to all my amazing coauthors!.Please check it out here👉.Website: Paper: Code:

1

0

4

RT @LuccaChiang: Thrilled to share that MCR (Manipulation-centric representation) has been accepted by @iclr_conf #ICLR2025 🎉 Huge thanks t….

github.com

[ICLR 2025🎉] This is the official implementation of paper "Robots Pre-Train Robots: Manipulation-Centric Robotic Representation from Large-Scale Robot Datasets". - luccachiang/rob...

0

12

0