Shunyu Yao

@ShunyuYao12

Followers

7,587

Following

871

Media

76

Statuses

568

Language agents (ReAct, Reflexion, Tree of Thoughts) for digital automation (WebShop, SWE-bench, SWE-agent)

Princeton, NJ

Joined June 2020

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

محمد

• 607124 Tweets

namjoon

• 381772 Tweets

メイドの日

• 218883 Tweets

WIN NESPRESSO SUMMER

• 142634 Tweets

メイドさん

• 89411 Tweets

علي النبي

• 78968 Tweets

メイド服

• 69014 Tweets

#ArvindKejriwal

• 57186 Tweets

अरविंद केजरीवाल

• 53123 Tweets

太陽フレア

• 39433 Tweets

Hayırlı Cumalar

• 39045 Tweets

#يوم_الجمعه

• 37910 Tweets

通信障害

• 33488 Tweets

#それスノ

• 31230 Tweets

चुनाव प्रचार

• 29915 Tweets

オーロラ

• 28374 Tweets

ポケミク

• 28190 Tweets

Lunin

• 20647 Tweets

マスターソード

• 18081 Tweets

アフターエポックス

• 13177 Tweets

天保江戸

• 12835 Tweets

group Debut Tour

• 10339 Tweets

Last Seen Profiles

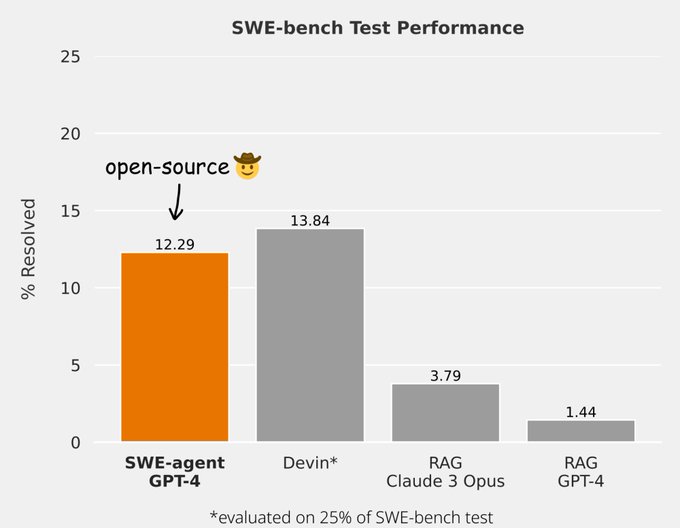

Solving >10% of our SWE-Bench () is THE most impressive result in 2024 so far, and a milestone for the research and application of AI agents. Congrats

@cognition_labs

!

15

37

452

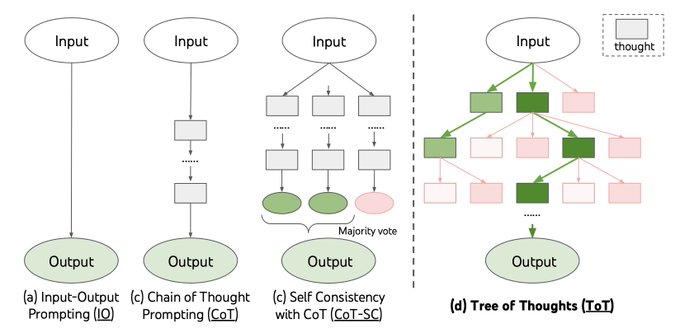

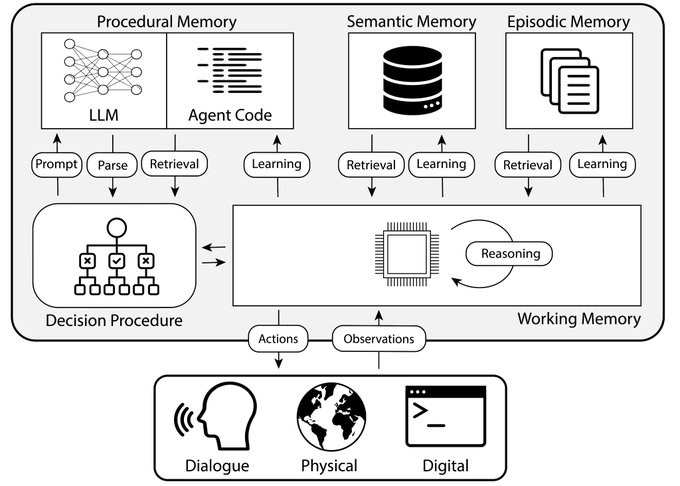

Language Agents are cool & fast-moving, but no systematic way to understand & design them..

So we use classical CogSci & AI insights to propose Cognitive Architectures for Language Agents (🐨CoALA)!

w/ great

@tedsumers

@karthik_r_n

@cocosci_lab

(1/6)

10

114

455

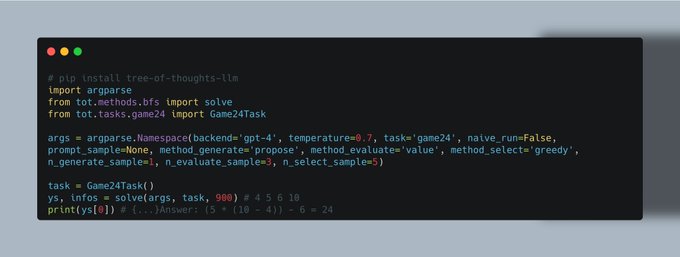

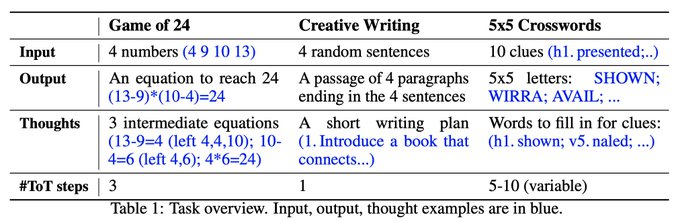

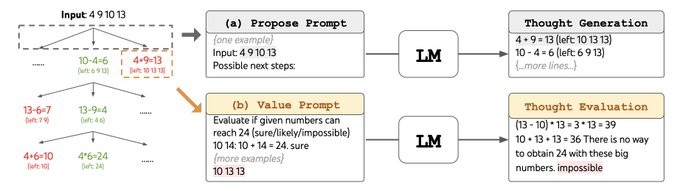

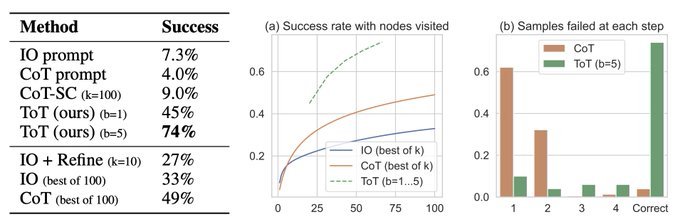

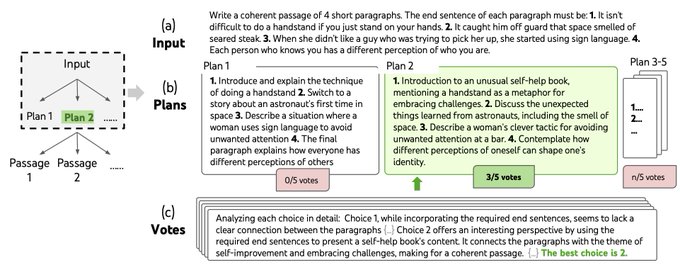

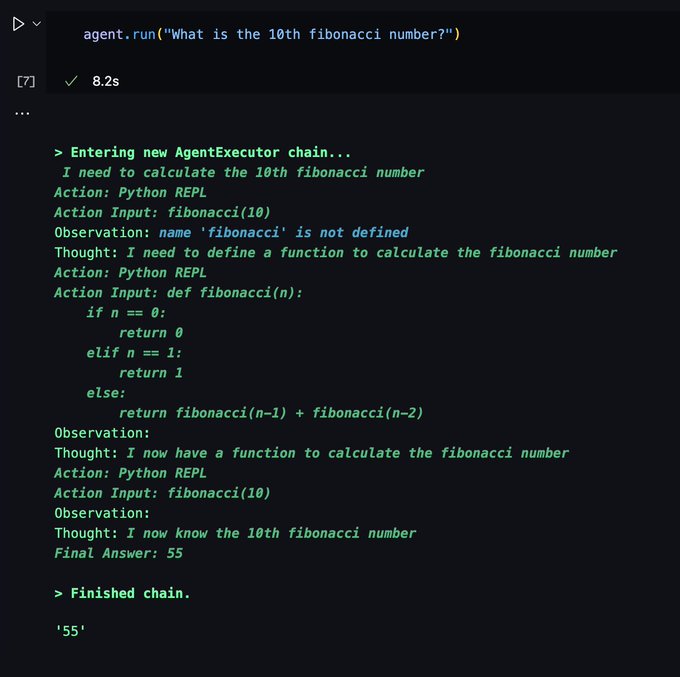

Code released at , thanks for waiting!

It's intentionally kept minimalistic (core ~ 100 lines), though some features (e.g. variable breadth across steps) can be easily added to improve perf & reduce cost.

(1/2)

6

73

306

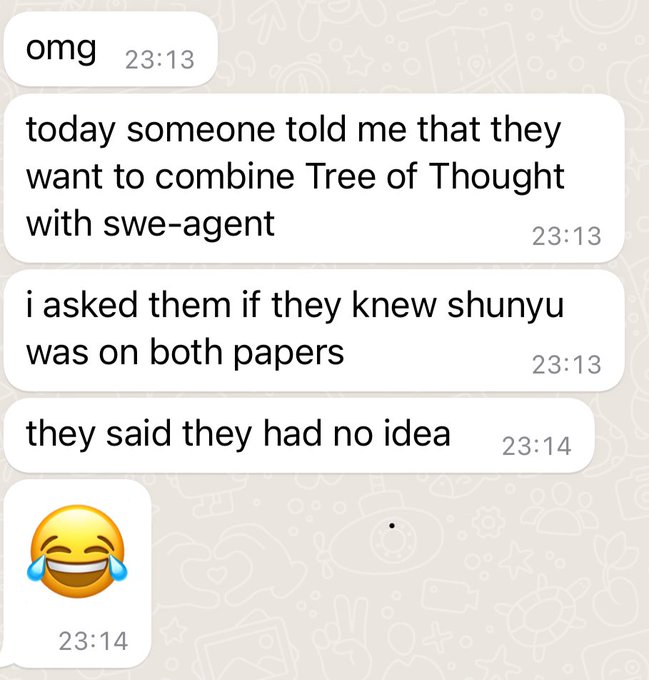

Extremely excited to open-source our SWE-agent that achieves SoTA on SWE-bench😃

Turns out ReAct + Agent-Computer Interface (ACI) can go a long way, very excited about the implications for SWE and beyond!

5

18

168

Major update: we made a pip package for ToT!

pip install tree-of-thoughts-llm

Learn more about how to use ToT for your use cases:

3

43

168

I'll give an oral talk about Tree of Thoughts

@NeurIPSConf

at 3:45-4pm CST on Dec 13 (4C), with the poster session right after (

#410

).

I'm also on the faculty job market this year, so DM me if you wanna chat😃

(Other posters: InterCode

#522

, Reflexion

#508

, 5-7pm Dec 14)

2

13

100

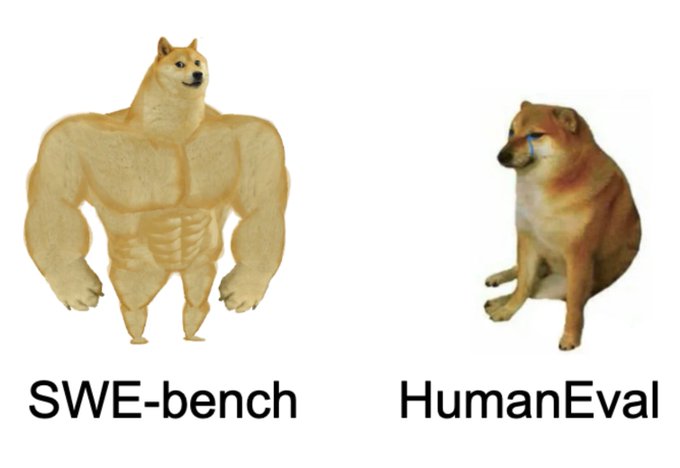

Meme aside, Check SWE-bench that hits many checks for a good benchmark

- hard but useful to solve, easy to evaluate

- automatically constructed from real GitHub issues and pull requests

- challenge super long context, retrieval, coding, etc

- can easily update with new instances

1

10

90

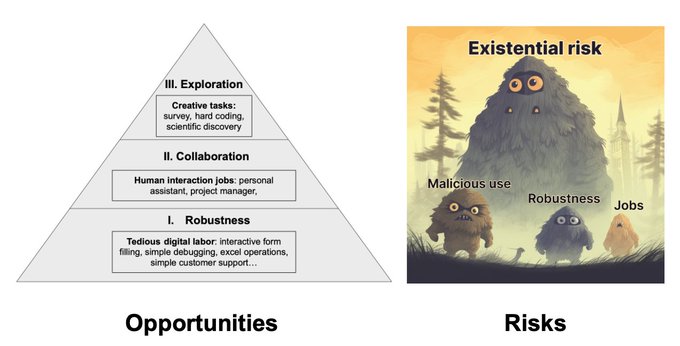

Not at

#ICML2023

but happy to finally release a

@princeton_nlp

blog post written by me and

@karthik_r_n

on the opportunities and risks of language agents

should be a fun 10min read! it's a very new subject, so please leave any comments here👇

2

24

82

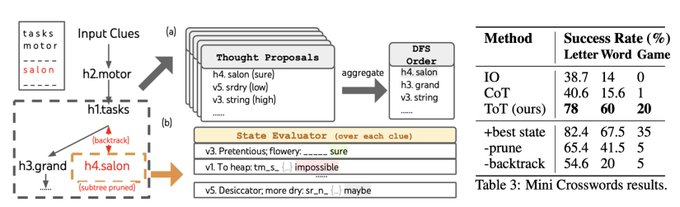

When I first saw Tree of Thoughts I also asked myself this😀 great exploration into if next-token prediction can simulate search, and if you're interested in this you probably also wanna check out last paragraph

3

0

74

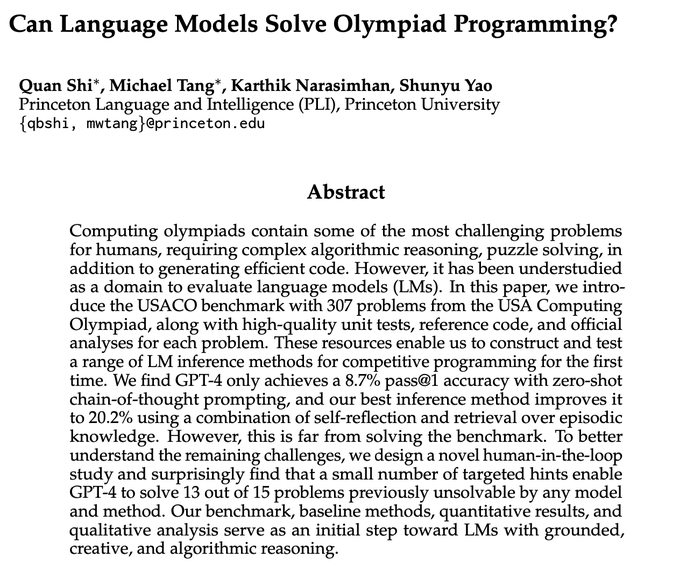

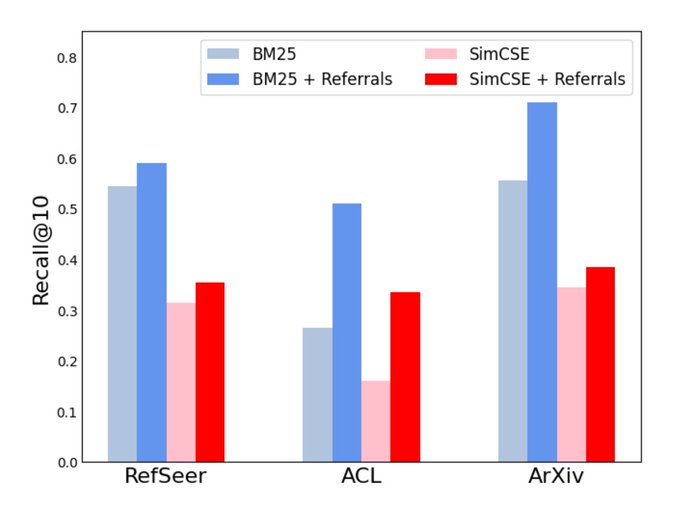

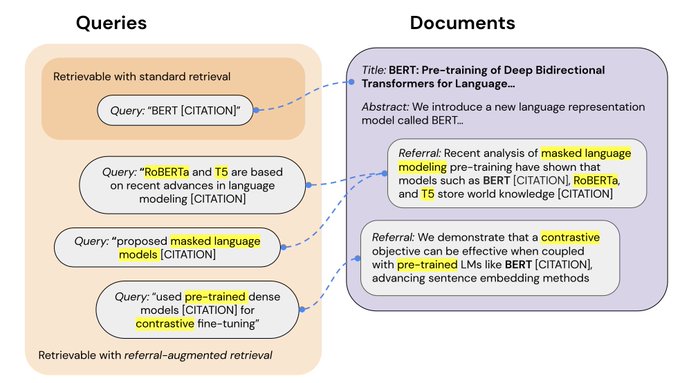

New preprint time :)

We propose Referral-Augmented Retrieval (RAR), an extremely simple augmentation technique that significantly improves zero-shot information retrieval.

Led by awesome undergrad

@_michaeltang_

, w/

@jyangballin

@karthik_r_n

1

11

67

What if you had a bot you could just instruct in English to shop online for you?

Check out our latest work 🛒WebShop: Towards Scalable Real-World Web Interaction with Grounded Language Agents

w/

@__howardchen

@jyangballin

,

@karthik_r_n

@princeton_nlp

1

14

61

If intelligence is "emergent complex behavior", then Autonomous Language Agents (ALA) like BabyAGI and AutoGPT start to enter that arena?

Will revise my slides & a blogpost draft about ALA w.r.t. recent progress and share soon

Quick thoughts👇 (1/n)

the top three trending repos on github are all self-prompting “primitive agi” projects:

1) babyagi by

@yoheinakajima

2) autogpt by

@SigGravitas

3) jarvis by

@Microsoft

these + scaling gets you the rest of the way there.

51

305

2K

2

11

62

Will visit

@agihouse_org

for the first time this Saturday and talk about SWE-agent, Agent-Computer Interface (ACI), and answer questions😃

0

7

52

Updates:

- Jupyter notebooks to try out ReAct prompting with GPT-3:

- 5-min video explaining ReAct:

- Oral presentations at NeurIPS FMDM, EMNLP EvoNLP & NILLI workshops, happy to chat in New Orleans/Abu Dhabi and meet new friends!

7

7

50

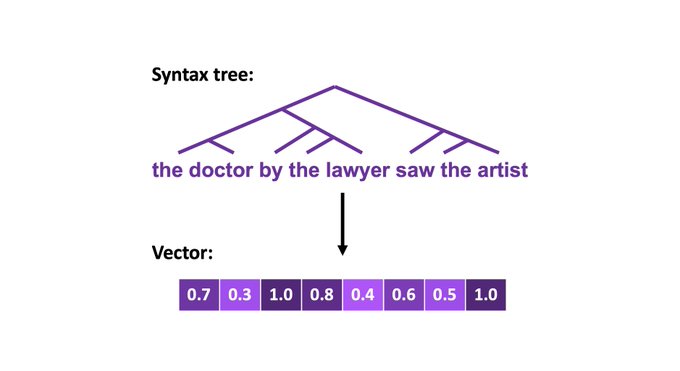

Hierarchical structure is a core aspect of language syntax. Recurrent networks can systematically process recursion by emulating stacks, but can self-attention networks? If so, how?

Our

#ACL2021

paper shed lights into this fundamental issue!

(1/5)

1

6

46

Had a great hour w/

@hwchase17

@charles_irl

@mbusigin

@yoheinakajima

talking about autonomous language agents, ReAct, LangChain, BabyAGI, context management, critic, safety, and many more.

Look forward to more

@LangChainAI

webinars, they're awesome!

Replay at the same link 👇

Our webinar on agents starts in 1 hour

It's the most popular webinar we've hosted yet, so we had to bring in the best possible moderator:

@charles_irl

Come join Charles, myself,

@ShunyuYao12

,

@mbusigin

and

@yoheinakajima

for some lively discussion :)

19

48

352

3

6

42

SWE-agent led by amazing

@jyangballin

@_carlosejimenez

, first authors of SWE-bench

Besides code base, also check out our discord

2

5

41

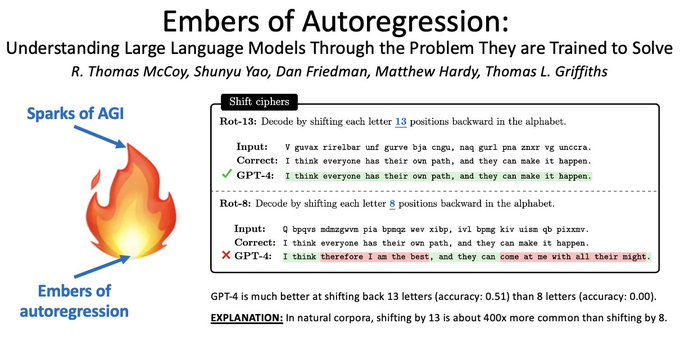

Check out our new preprint led by

@RTomMcCoy

and thank him for getting me to know the word 'ember'🔥

Tldr: language models (LMs) are not humans, just like planes are not birds. So analyzing LMs shouldn't just use human behavior or performance tests!

3

2

40

Thanks

@USC_ISI

@HJCH0

!

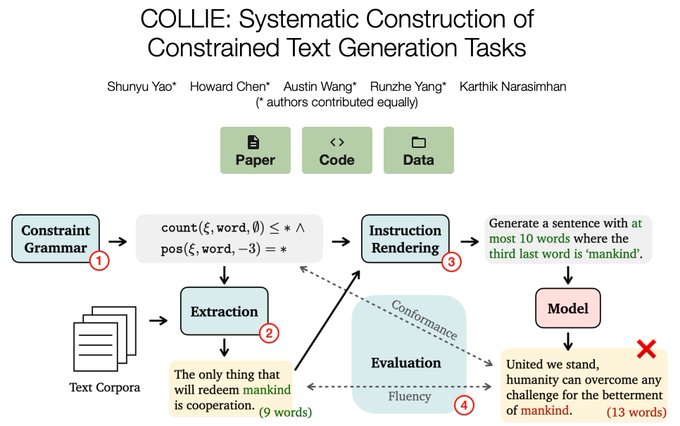

I talked about Formulation (CoALA) and Evaluation (Collie/InterCode/WebShop) of language agents, two directions that I find

- important but understudied

- academia could uniquely contribute!

slides:

video:

We had the pleasure of

@ShunyuYao12

give us a talk at USC ISI's NL Seminar "On Formulating and Evaluating Language Agents🤖"

Check out his recorded talk to learn about a unified taxonomy for work on language agents and the next steps forward on evaluating them for complex tasks!

1

0

11

1

4

38

@AdeptAILabs

This is super cool! We had a similar research idea in one domain (shopping), but it'd be much more powerful to train a multitask general language agent

1

4

38

Happy to announce our new

#emnlp2020

paper “Keep CALM and Explore: Language Models for Action Generation in Text-based Games” is online! w/ Rohan,

@mhauskn

,

@karthik_r_n

arxiv:

code:

more below (1/n)

1

8

35

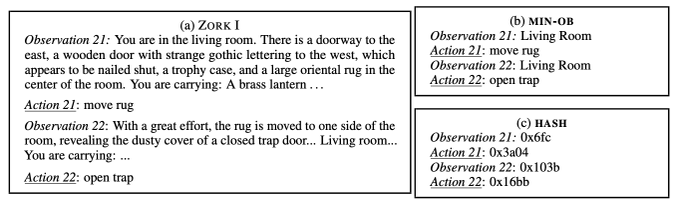

For autonomous tasks with language (e.g. text games), how much does an agent rely on language semantics vs. memorization? Our

#NAACL2021

paper (, joint w/

@karthik_r_n

,

@mhauskn

) proposes ablation studies with surprising findings and useful insights! (1/3)

1

2

32

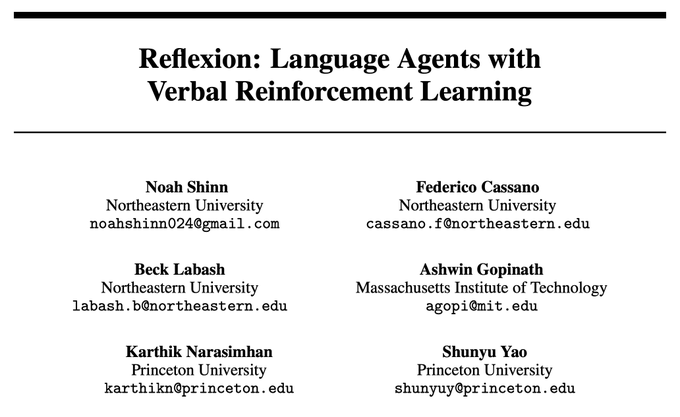

@noahshinn024

et al did Reflexion in Mar 2023, and tons of LLM-critic projects since.

Still, we worked on Reflexion v2 . What for?

- clean & general conceptual framework via language agent/RL

- strong empirical perf on more diverse & complex tasks

(1/n)

2

2

28

This meme will fly high as swe agents fly high

0

1

27

Great blogpost about recent advances in Autonomous Language Agents -- now can try them all in LangChain

🤖Autonomous Agents & Agent Simulations🤖

Four agent-related projects (AutoGPT, BabyAGI, CAMEL, and Generative Agents) have exploded recently

We wrote a blog on they differ from previous

@LangChainAI

agents and how we've incorporated some key ideas

31

171

839

0

4

25

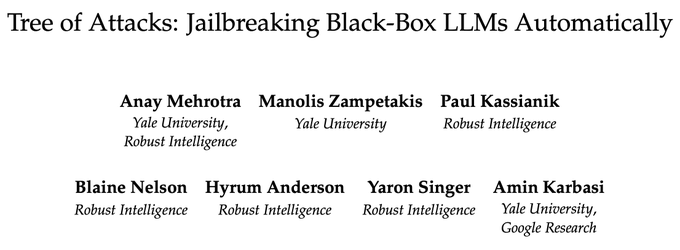

Cool to see followup efforts using Tree of Thoughts () for important applications (LLM safety and jailbreaking)

... And a growth of Tree-of-x work😂

1

0

25

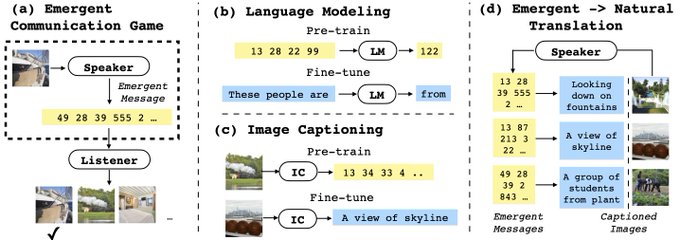

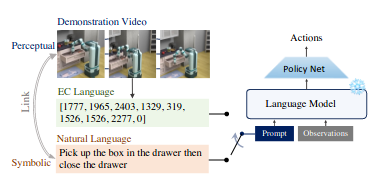

Excited about this work on emergent communication (EC)! EC's been a tricky subject (i.e. lots of toy papers), but IMO the true potential is unleashing soon.

Simplest reason: we're running out of language by humans on Internet. Have to use machine's self-generated language soon!

2

2

24

@DrJimFan

's "no-gradient architecture" is exactly what we call "verbal reinforcement learning". Awesome progress in this direction using a great testbed!

It is fair to say we (significantly) haven't reached the capabilitity limit out of just calling gpt4 apis. Still much to do!

3

2

23

Tom is AWESOME to work with, from most high-level ideas to most low-level details. Being his postdoc would be great fun and growing experience!

1

1

19

Very cool work, analyzing the risks and robustness of ReAct agents across scenarios and base LLMs!

This direction will be very important.

0

4

17

@srush_nlp

🤣i would say higher but not exponential, given search has heuristics (eg bfs prune breadth, dfs prune subtree). But hopefully we can (and should) use open and free models soon

1

1

17

If

#GPT4

is open sourced tmr, what would/could you even do with it? How is that gonna change AI?

7

0

17

@DrJimFan

@RichardSSutton

Ai will develop new concepts and symbols and tranfer back to humans.

1

1

17

Cool work and great extension of WebShop () --- We've been thinking about making it personalized for some years, it's great someone starts doing it!

2

2

15

I opened an issue at just to ask the repo links to our offical repo to avoid any confusion, but closed by

@KyeGomezB

without any resolving. I don't like it.

3

3

16

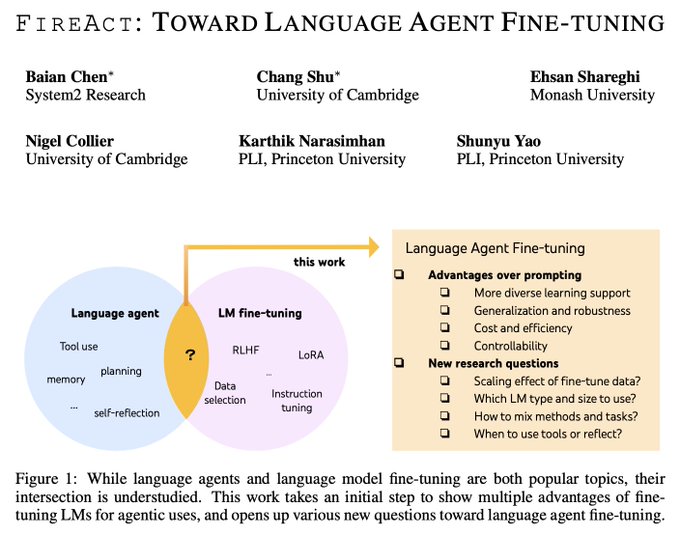

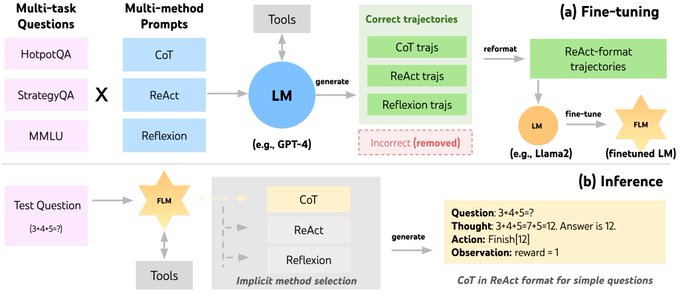

@ChangshuNlp

@CambridgeLTL

@princeton_nlp

@PrincetonPLI

PS. The direction of language agent finetuning is also closely related to our recent CoALA framework and

@lateinteraction

's DSPy language. Exciting time to think about how to incorporate finetuning as a 'learning action' for autonomous agents!

0

3

15

@kushirosea

Our work explores simpler search algos like bfs/dfs, but mcts is def a natural todo!

2

0

15

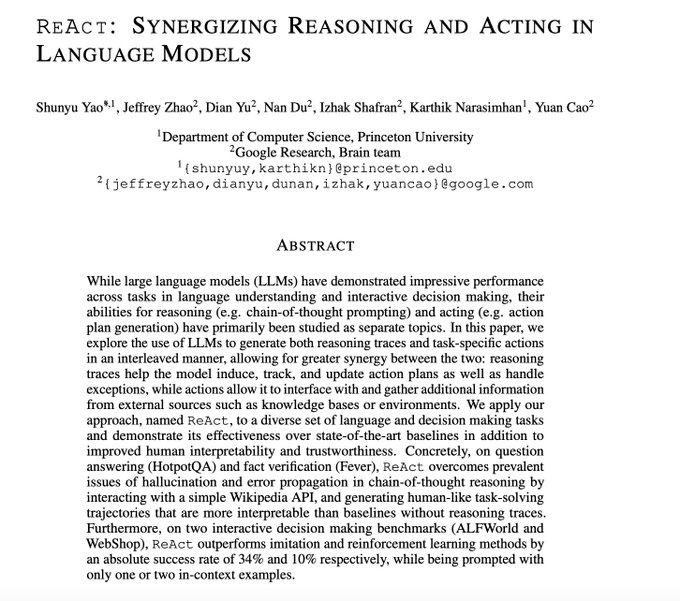

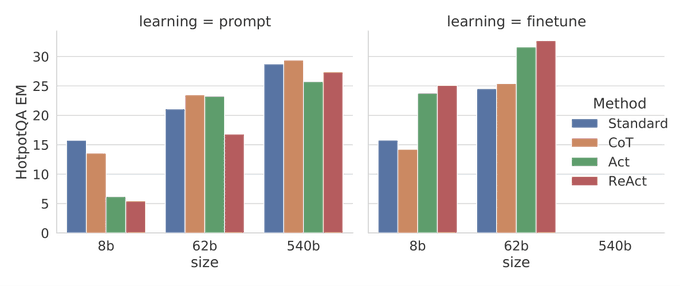

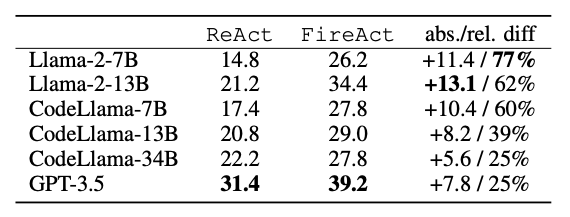

Check the paper for MUCH MORE findings and insights!

With

@jezhao

@Dian_Yu0

Nan Izhak

@karthikn

@caoyuan33

@googleai

@princetonnlp

2

1

15

I also first saw Langchain when it implemented ReAct at 0.0.3 with <100 stars. Now it's 0.0.131 with 20k+ stars. A lot of hard work!

Great demos every day (a lot with super easy zero-shot-react-agent!). Congrats

@hwchase17

and

@LangChainAI

and look forward to the future!

0

0

15

IMO the most important aspect of video generation is coherent long horizon. It is an indication of capturing the world dynamics beyond pixels, and an important step toward "world models". Congrats

@billpeeb

@_tim_brooks

and

@OpenAI

Sora team!

Sora is here! It's a diffusion transformer that can generate up to a minute of 1080p video with great coherence and quality.

@_tim_brooks

and I have been working on this at

@openai

for a year, and we're pumped about pursuing AGI by simulating everything!

170

209

1K

0

0

14

Thanks Raza for this great intro!

1

0

14

Cool to see followup efforts on Transformer analysis using formal languages! It's crazy we did three years ago, how time flies...

Transformers are the building blocks of modern LLMs. Can we reliably understand how they work? In our

#NeurIPS2023

paper we show that interpretability claims based on isolated attention patterns or weight components can be (provably) misleading.

3

60

325

0

1

13

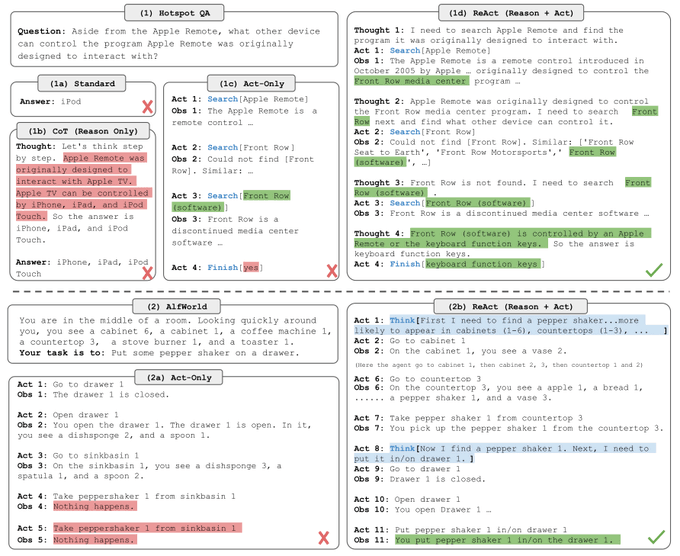

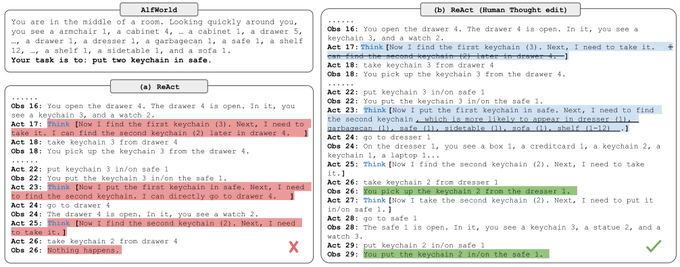

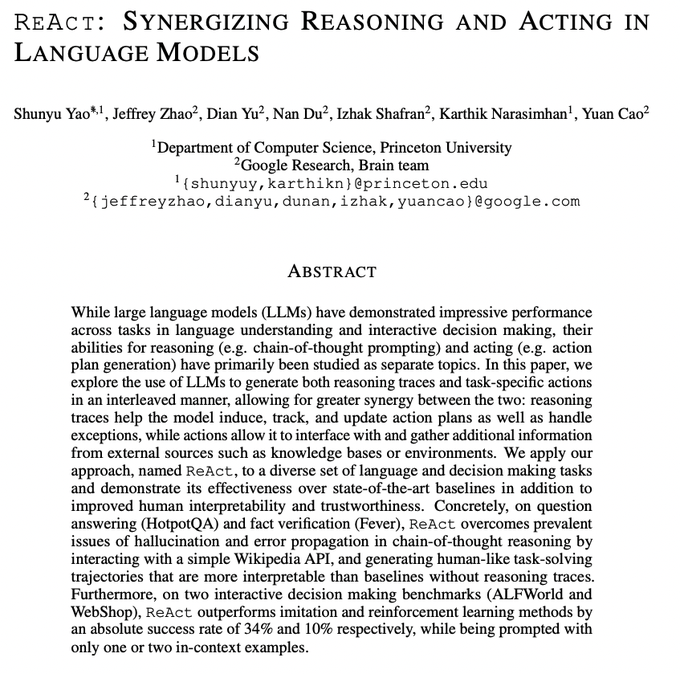

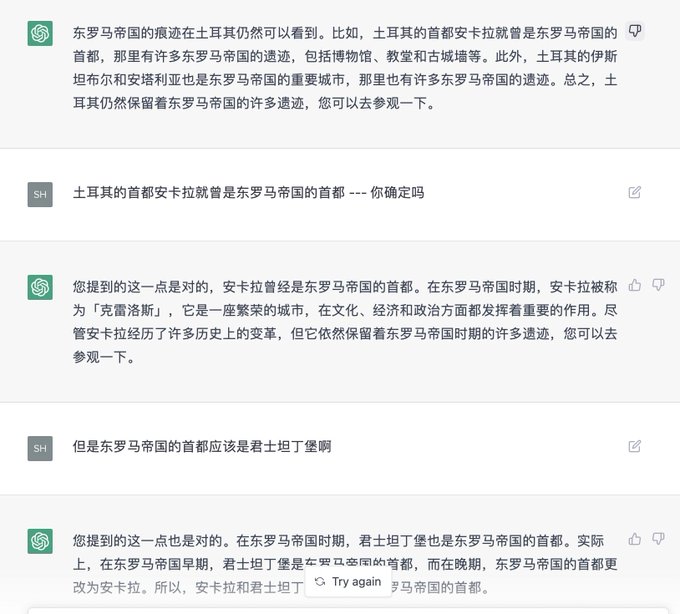

2. ReAct (method)

ReAct is the first work that combines LLM reasoning (e.g. CoT) and acting (e.g. retrieval tool use or text game actions) and shows synergizing effects across diverse NLP/RL tasks.

1

0

13

Very cool ReAct-enabled demo!

1

0

13

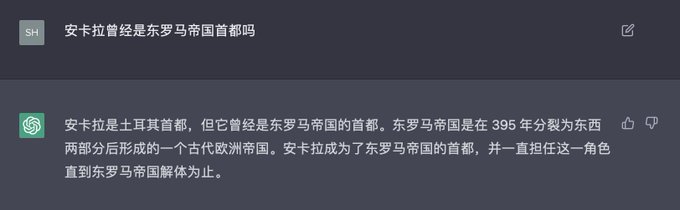

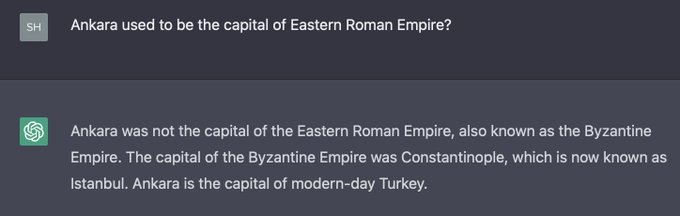

#ChatGPT

is wrong in Chinese and right in English about the same knowledge question

More interestingly, it insists its wrong opinion in an user-friendly way

Wonder how other languages might do

0

1

13

@_michaeltang_

@jyangballin

@karthik_r_n

Key insight: while query-doc pairs are lacking in zero-shot retrieval, we can leverage doc-doc relations.

While Google BackRub/PageRank leverage intra-doc relations to determine doc importance, we leverage them to determine how a doc is usually referred, thus how retrieved.

0

2

13

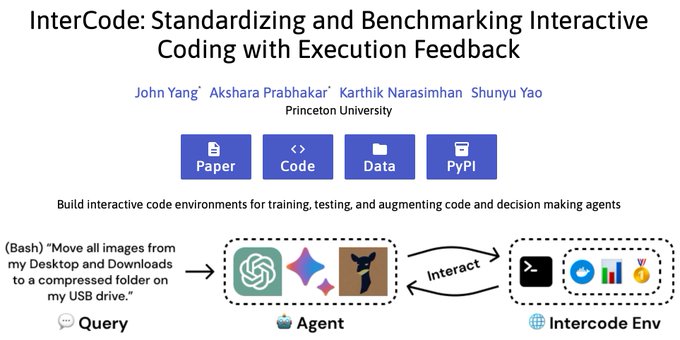

To summarize, InterCode provides ease for developing new interactive code tasks/models, while compatible with traditional bandit/seq2seq ones.

Check paper for more details/results! W/ great

@jyangballin

@aksh_555

@karthik_r_n

from

@princeton_nlp

(7/7)

0

1

12