Rylan Schaeffer

@RylanSchaeffer

Followers

6K

Following

8K

Media

368

Statuses

1K

CS PhD Student at Stanford Trustworthy AI Research with @sanmikoyejo. Prev interned/worked @ Meta, Google, MIT, Harvard, Uber, UCL, UC Davis

Mountain View, CA

Joined October 2011

The hardest scaling law prediction problems are no match for @YingXiao armed only with a spreadsheet and his measuring stick.

0

2

9

RT @koraykv: Advanced version of Gemini Deep Think (announced at #GoogleIO) using parallel inference time computation achieved gold-medal p….

deepmind.google

Our advanced model officially achieved a gold-medal level performance on problems from the International Mathematical Olympiad (IMO), the world’s most prestigious competition for young...

0

154

0

RT @BrandoHablando: Come to Convention Center West room 208-209 2nd floor to learn about optimal data selection using compression like gzip….

0

4

0

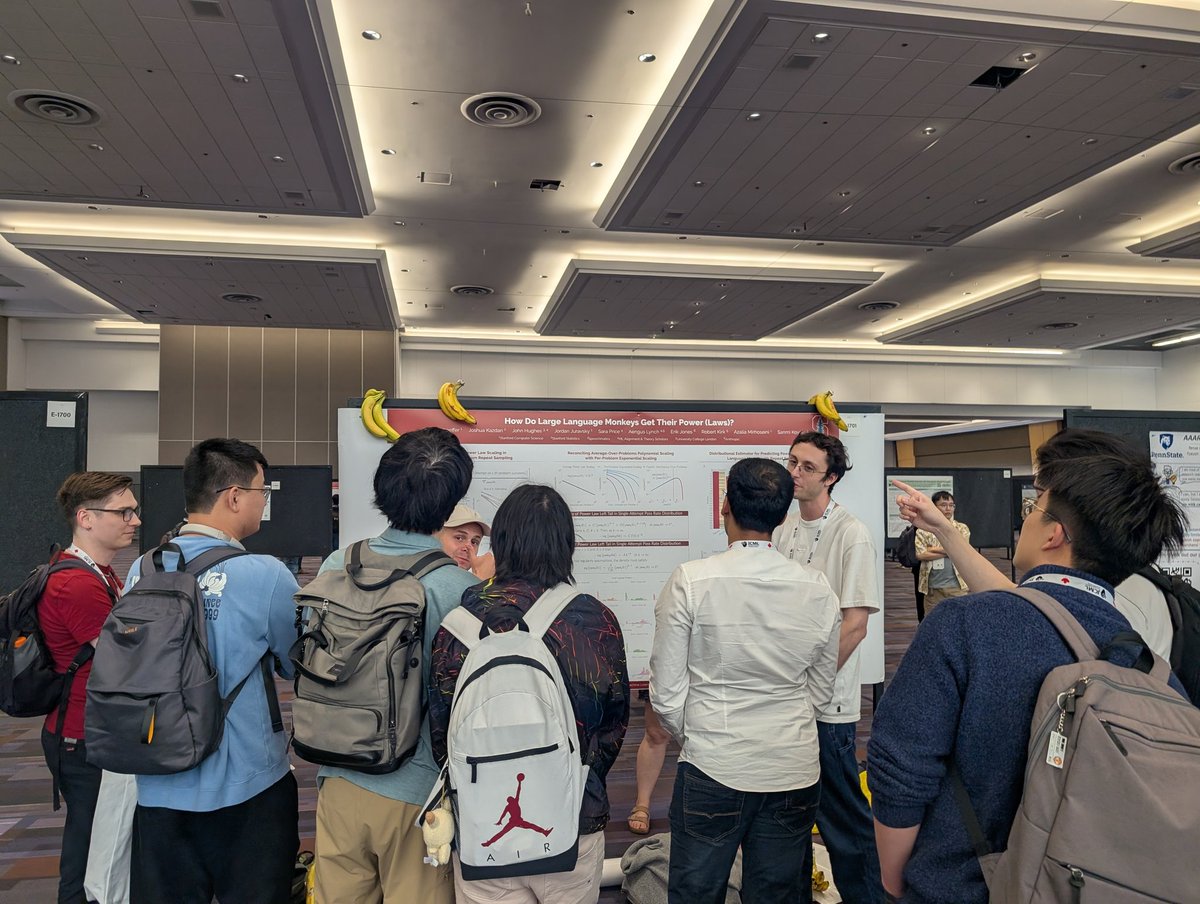

If you want to learn about the power (laws) of large language monkeys (and get a free banana 🍌), come to our poster at #ICML2025 !!

1

6

65

One of two claims is true: . 1) We fully automated AI/ML research, published one paper and then did nothing else with the technology, or. 2) People lie on Twitter. Come to our poster E2809 at #ICML2025 now to find out which!!!.

We refused to cite the paper due to severe misconduct of the authors of that paper: plagiarism of our own prior work, predominantly AI-generated content (ya, the authors plugged our paper into an LLM and generated another paper), IRB violations, etc. Revealed during a long.

3

4

52

Post-AGI employment opportunity: AI Chain of Thought Inspector?.

If you don't train your CoTs to look nice, you could get some safety from monitoring them. This seems good to do!. But I'm skeptical this will work reliably enough to be load-bearing in a safety case. Plus as RL is scaled up, I expect CoTs to become less and less legible.

0

0

4

#ICML2025 hot take: A famous researcher (redacted) said they feel like AI safety / existential risk from AI is the most important challenge of our time, and despite many researchers being well intentioned, this person feels like the field has produced no deliverables, has no idea.

An #ICML2025 story from yesterday:. @BrandoHablando and I made a new friend who told us that she saw me give a talk at NeurIPS 2023 and then messaged Brando to chat, without realizing that Brando and I are different people 😂

5

1

44

An #ICML2025 story from yesterday:. @BrandoHablando and I made a new friend who told us that she saw me give a talk at NeurIPS 2023 and then messaged Brando to chat, without realizing that Brando and I are different people 😂

6

0

42

I love this work! Over a year ago, in we pointed out that Modern.Continuous Hopfield Networks can be straightforwardly linked to Gaussian KDEs and urged the field to build new bridges between associative memory & probabilistic models. 1/2

We introduce a new energy function: Log-Sum-ReLU (LSR) — inspired by optimal kernel density estimation. Unlike the standard Log-Sum-Exp energy, LSR enables exact memory retrieval with exponential capacity — no exponential separation needed. 🔍🧠

2

6

88

#Vancouver is beautiful! Excited for #ICML2025 . Share food recommendations!

On my way to #ICML2025 ! What food recommendations do people have for #Vancouver?. A friend jokingly said Oshinori changed his life

1

0

42

On my way to #ICML2025 ! What food recommendations do people have for #Vancouver?. A friend jokingly said Oshinori changed his life

6

0

7

RT @savvyRL: We are raising $20k (which amounts to $800 per person), to cover their travel and lodging to Kigali, Rwanda in August, from ei….

donorbox.org

Watch our fundraiser video to meet us, hear our stories, and learn what your support makes possible. We're raising $20,000 to send 25 early-career AI researchers from Nigeria and Ghana to...

0

29

0

That PNAS paper and its sister are the reasons why I first fell in love with ML & NeuroAI research. Beautiful, elegant research.

arxiv.org

Despite the widespread practical success of deep learning methods, our theoretical understanding of the dynamics of learning in deep neural networks remains quite sparse. We attempt to bridge the...

btw,.1) we proved conditions for a simple version of the Platonic Representation Hypothesis for two layer linear networks back in 2019 in our @PNASNews paper: A mathematical theory of semantic development in deep nets (Fig. 11): 2) we also showed evidence.

1

2

16

RT @jackyk02: ✨ Test-Time Scaling for Robotics ✨. Excited to release 🤖 RoboMonkey, which characterizes test-time scaling laws for Vision-La….

0

13

0